If you had to nominate one modern technology as a mind reading device, the fMRI looks like a good bet. By measuring blood flow fMRI can track activity in your brain, and this opens the window to your mind – it may even allow us to figure out what your eyes are seeing at any given moment. The ATR Computational Neuroscience Laboratories in Kyoto, Japan is able to show a geometric pattern to a test subject and then have a computer program recreate that image by analyzing brain activity gathered by fMRI (NIPS 2009). Scientists at UC Berkeley have used fMRI to study the visual cortex to encode images as brain activity and decode brain activity into images. In other words, for a given image they know how your brain will react, and for a given brain reaction they know the image that would cause it. Researchers at UCB have even managed to do the same with video – their decoding system can create a rough facsimile of what a subject was watching at the time. This is incredible! I had a chance to talk with Jack Gallant of UC Berkeley about these attempts to see what the brain sees. While this technology is still in its very early stages, the work already finished is truly astounding. Check out a video discussing ATR, and pics of research from UCB after the break.

fMRIs have been used in several different attempts to “read minds”. Carnegie Mellon is working on a system that can track how your brain responds when you see different words. When you read “apple”, the CM fMRI can measure your brain activity and know you weren’t looking at “badger” or “football”. Similarly, Microsoft Research has used EEG to categorize which kinds of images you are watching (an animal versus a face, for instance). They may use the technology to help automatically tag images at high speeds. Work at ATR and UCB, however, is a step in another direction. These are the groups which are asking: “if I can monitor your brain, can I reconstruct the image that was on your retina?” They are actively pursuing a means to take brain activity and translate it into something other humans could look at and understand.

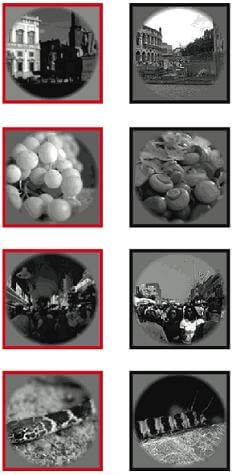

The work at UCB, headed by Jack Gallant, has been underway for several years. He, Thomas Naselaris, and colleagues determined how a random image from a database of thousands could be viewed by a subject and then identified by a computer program that monitored brain activity through fMRI (as published in Nature, 2008). In September of 2009 they took that work one step further and developed the means to use semantic, structural, and prior information to accurately reconstruct an image (as published in Neuron). Were you staring at a house on a river? UCB’s computer program can use structural and semantic clues to figure this out and choose among a set of prior images which agree with the one in front of you. It may not be the same house on a river, but it will look close.

The semantic input is an interesting twist. As with the work done at CMU and Microsoft, UCB’s work shed some light on the function of voxels (volumetric pixels, basically a 3D section) in different locations in the brain. Groups of voxel in the brain could be used to process a particular kind of visual structure, another group could be used to categorize a particular set of objects. While Gallant is adamant that fMRI is actually a clumsy tool (“we only work with it because it’s the best we have”) it can help scientists label patterns of voxels as coding for different kinds of images. Look at a face and one part of your brain lights up, look at a truck, and another part is activated. Get enough such semantic voxels labeled, and you’ve got a good tool to help identify any image you might show a subject. It seems likely that the first mind reading machines of the future will need to use such voxel-based semantic clues to help them guess what you’re seeing.

What I really find exciting about the research at UCB, is that they’ve already started to decode moving images. As reported at the Society for Neuroscience, Shinji Nishimoto (part of Gallant’s team) showed test subjects a long series of videos (hours of DVD trailers) while recording their brain activity with fMRI to understand how the brain encodes video data. A computer program then took this encoding process and used it to guess how the subject’s brain should respond to other videos (taken from YouTube). According to Gallant, the total length of this YouTube footage was equal to about 6 months of continuous video. That’s a lot of “Charlie Bit my Finger!”

So now you have a computer program that knows pretty well what each video in its collection should do to someone’s brain if they watched it. What do you do next? You show a test subject a completely new video (“the target”). The computer reads their brain and says, which of my video clips best fits this new activity? It finds the best matching one second clip of video and puts it into a compilation. As the subject keeps watching the target video, the computer is assembling this Frankenstein’s Monster of clips. The end result is a movie montage that jumps back and forth among thousands of videos. That montage, surprisingly, gives a fairly accurate portrayal of what the test subject saw in the target.

According to Gallant, fMRI is really limited in its spatial and temporal resolution. You can’t get much better than one second, and the voxels aren’t as small as neurons. Still, the fact that we can scan a brain and reconstruct what they are looking at in any form is astounding. Whatever technology replaces fMRI with better resolution is only going to increase the accuracy of these reconstructed images.

As always when computers are reaching towards your brain, the fear of invasion of privacy becomes paramount. We’ve already seen how governments are planning to use brain scans in security checks, could they start judging us for what we see? Could they even know what we are dreaming?

Possibly yes, but not yet. Brain scanning science is still in its (rather lengthy) infancy. And fMRI, the best technology at this point for reading brain activity, requires you to hold perfectly still in a huge machine with powerful magnets. Not the sort of thing you could secretly install in someone’s home without them knowing. One day, though, it’s possible we’ll engineer a device that can really capture exactly what you are seeing (or imagining) and record it. Memories could be recorded directly. Maybe even more abstract concepts could be observed – ATR is working to categorize visual art by the way that it stimulates brain activity. Once we get machines to look inside our brains its only a short hop to getting our brains linked together. Borg, here we come!

[image credit: UC Berkeley]

[screen capture and video credit: NTD World News]

[sources: Nature, Neuron, Jack Gallant, ATR Website (Google Translated), NIPS]