MIT Uses XBox Kinect to Create Minority Report Interface

Share

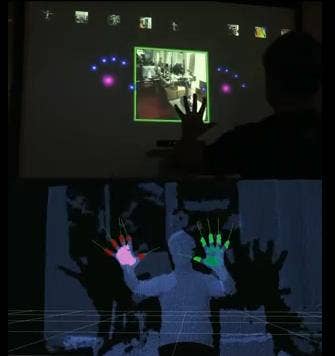

In the movie Minority Report, Tom Cruise uses an advanced user interface system that lets him control media files with nothing more than a gesture from his gloved hands. He could do the same now with little more than an XBox. A member of the research staff at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL) has created a Minority Report interface using the Kinect 3D sensor and a bunch of open source software. Not only does the device only cost $150, you can use it without any dorky gloves. Garratt Gallagher, the interfaces's creator, shows off some basic gesture controls in the video below. It really does look like it came straight out of the movie. Awesome.

Did Microsoft understand what they were doing when they put a $150 3D motion tracker on the market? This thing is a hacker's dream come true! We've seen some amazing projects recently that take advantage of the Kinect sensor. A student at MIT (not Gallagher) hooked a Kinect up to a robot and had it detecting people and following gesture commands in no time. Everywhere you go on YouTube you'll see new projects that use the Kinect as everything from a range finder to a puppet control system. Gallagher's Minority Report interface, however, stands out as one of the more impressive displays of the sensor's accuracy. It can detect hands from among 60,000 points of data collected by the Kinect (see the dots in the bottom half of the video), and it can track the motion of hands and fingers at 30 frames per second. That's more than fast enough for practical applications, as you can see:

Compare Gallagher's setup to the original system shown in the movie. Tom Cruise's interface may be flashier, but I'd say the two are pretty damn similar. And again, no gloves needed for Gallagher.

For those who regularly follow Singularity Hub, you'll remember that the scientist who was asked to design the original interface shown in the movie, John Underkoffler, is also from MIT. He's spent the last several years taking his concept and making it a real-world system called G-speak. His company, Oblong, is actively perfecting that platform for market. Gallagher's creation has to be a kick in the pants to Underkoffler. It may not look at nice as G-speak, but it's certainly much cheaper.

And Gallagher did it all using open source software! He relied on LibFreenect as the driver to get the Kinect talking to Linux. Point Cloud Library (PCL) handles the complex geometry created by the enormous number of spots detected by the Kinect. PCL is being developed for the Robot Operating System (ROS) by Gallagher and others at MIT. Gallagher also created his own hand detection tools for ROS which are pretty cool.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

The great part of all these packages of open source software is that they can be used, modified, and shared for free. A few weeks ago, in fact, Gallagher was demonstrating a remarkably similar setup he used to create a virtual piano you could play with the Kinect. Watch it in the video below. Open source software is the path to wonderfully accelerated research...and fun musical instruments!

According to CSAIL, Gallagher wants the Minority Report interface to inspire new research with robots. His future plans for the Kinect will see it as part of a system to guide robotic manipulation. Other major robot developers, like Willow Garage, are working on similar projects. Having played with the Kinect in the way it was intended - as a video game controller for the XBox - I have to say that I like these applications much better. Hitting a virtual ping pong ball with your hand is mildly enjoyable. Controlling a real-world robot with the flick of a wrist is frikkin' awesome. Kudos, Gallagher, and keep up the good work!

[screen capture and video credit: MIT CSAIL]

Source: mit csail

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading