51 Percent Of Total Online Traffic is Non-Human

Share

It probably is no surprise to most that much of online traffic isn't human. Hacker software, spam, or innocuous data collection from search engines all get their slice of the bandwidth pie. But what might surprise you is exactly how much bandwidth is consumed by humans versus non-humans. It's pretty much an even split.

Actually, a slight lead goes to the non-human, web-surfing robots.

According to a report by Internet security company Incapsula, 51 percent of total online traffic is non-human. There's more bad news. Of the 51 percent, 20 percent of the traffic is accounted for by search engines, the other 31 percent are the bad bots.

Here’s the breakdown:

- 49 percent human traffic, 51 percent non-human traffic

Non-human traffic:

- 5 percent hacking tools

- 5 percent “scrapers,” software that posts the contents of your website to other websites, steals email addresses for spamming, or reverse engineers your website’s pricing and business models

- 2 percent comment spammers

- 19 percent other sorts of spies that are competitive analyzers, sifting your website for keyword and SEO (search engine optimization) data to help give them a competitive edge in climbing the search engine ladder

- 20 percent search engines and other benevolent bot traffic

The report was based on data compiled from 1,000 of Incapsula customer websites.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

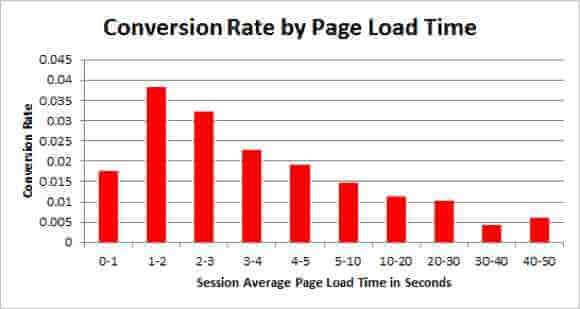

We always knew it was us against the machines. But until now the arms race had generally referred to virus versus anti-virus, malware versus anti-malware. Symantec wasn’t warning us about the perils of non-human traffic. For web-based business owners though, that extra traffic can turn into lost business. Tagman recently reported that a delay of just one second in webpage load time decreases page views by 11 percent, customer satisfaction by 16 percent, and conversions – the number of people who buy something divided by the number of visits – by 6.7 percent. Incapsula says it’s easy enough to get around the hacker, scraper, and spam software, but that most website owners aren’t equipped to spot the infiltrators.

We already knew that robots did some amazing things, now we learn that they're doing things we're not even aware of – at least not the extent. They're not alive, yet they're surfing the web more than we are. So who will win in the end, human or machine? Better monitoring tools – for free – would help. You can't get rid of the critters if you don't know they're there in the first place.

[image credits: Incapsula, Tagman, and Average Bro]

image 1: graph

image 2: tagman

image 3: spambot

Peter Murray was born in Boston in 1973. He earned a PhD in neuroscience at the University of Maryland, Baltimore studying gene expression in the neocortex. Following his dissertation work he spent three years as a post-doctoral fellow at the same university studying brain mechanisms of pain and motor control. He completed a collection of short stories in 2010 and has been writing for Singularity Hub since March 2011.

Related Articles

Sparks of Genius to Flashes of Idiocy: How to Solve AI’s ‘Jagged Intelligence’ Problem

Researchers Break Open AI’s Black Box—and Use What They Find Inside to Control It

What the Rise of AI Scientists May Mean for Human Research

What we’re reading