Automated Grading Software In Development To Score Essays As Accurately As Humans

Share

April 30 marks the deadline for a contest challenging software developers to create an automated scorer of student essays, otherwise known as a roboreader, that performs as good as a human expert grader. In January, the Hewlett Foundation of Hewlett-Packard fame introduced the Automated Student Assessment Prize (ASAP...get it?) offering up $100,000 in awards to "data scientists and machine learning specialists" to develop the application. In sponsoring this contest, the Foundation has two goals in mind: improve the standardized testing industry and advance technology in public education.

The contest is only the first of three, with the others aimed at developing automated graders for short answers and charts and graphs. But the first challenge for the nearly 150 teams participating is to prove their software has the spell checking capabilities of Google, the insights of Grammar Girl, and the English language chops of Strunk's Elements of Style. Yet the stakes are much higher for developing automated essay scoring software than the relatively paltry $60,000 first-place prize reflects.

Developers of reliable roboreaders will not just rake in massive loads of cash thrown at them by standardized testing companies, educational publishers, and school districts, but they'll potentially change the way writing is taught forever.

It's hard to comprehend exactly how transformative the Bush Administration's No Child Left Behind reform effort has been to U.S. education, but one consequence of the law is that it fueled reliance on standardized testing to gauge student performance. In 2001, the size of the industry was somewhere in the $400-700 million range, but today, K-12 testing is estimated to be a $2.7 billion industry. Even as the stakes are incredibly high for school districts to perform well on tests or lose funding, the technology used to grade the exams is fundamentally the same that's been around for over 50 years. That's right, it's optical mark recognition, better known as scantron machines, which work incredibly well for true/false and multiple choice questions.

But many tests include some assessment of student writing and for those sections, testing companies need human graders. A recent exposé of this industry reveals that tens of thousands of scorers are employed temporarily in the spring to grade these test sections under high stress in "essay-scoring sweatshops." It's sobering to think that the measure of a school's performance rests on the judgment of a solitary temp worker earning between $11 and $13 an hour plowing through stacks of exams to assess responses to the prompt "What's your goal in life?".

That's not to say that these temps aren't qualified or don't work hard, or even that their jobs are expendable. However, when hundreds or even thousands of children's lives, as well as parents, teachers, and administrators, can be disrupted by receiving poor marks for essay writing, the development of a roboreader that can grade like a human being is clearly long overdue.

Although the demand for automated scoring software is about bringing greater reliability to standardized testing, the same technology could quickly become a teacher's best friend. That's because if you ask educators what the worst part of their job is, they'll likely say "grading", especially if they utilize short- and long-answer questions or they teach English and must read student essays. Because of this, it's common for teachers to rely on assessments that lend themselves to easier scoring, such as true/false, multiple choice, fill-in-the-blank, and matching questions. Educators can't really be faulted for this, especially as classroom sizes have grown as has the demand for more assessments.

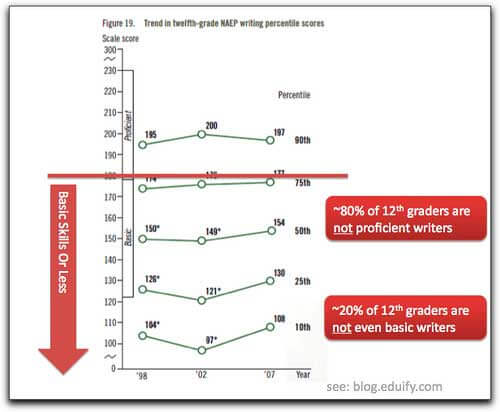

Yet students need to improve their writing skills now more than ever. Studies from the National Center for Educational Statistics (NCES) conducted since 1998 consistently show that 4 out of 5 12th grade students in the US are not proficient writers and approximately 1 in 5 are not even basic writers:

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Unfortunately, digital technology has made everyone's writing skills open for public evaluation and critique. Whether it's emails, tweets, status updates, comments, threads, ebay descriptions, blog posts, resumes, product reviews, or articles, we are increasingly writing and being read. In the information age, writing is our primary means of expression and communication in personal, professional, and public forums. As mobile use increases and our lives become more digital by the day, the need for strong writing increases. And for nearly everyone, the foundation for this vital skill set is built from years of writing assignments during the first two decades of life.

Still, there has been resistance to roboreaders, which is primarily rooted in the fear of two outcomes: they'll make teachers either lazy or obsolete. One only needs to recall an easily overlooked technology to see how these fears are unfounded. Spell checkers. Around since before even PCs existed (first was developed in 1966 at MIT), spell checkers were quickly made a staple of word processors in the 1980s. They're so standard in programs now that the only critique anyone really has is when they aren't 100 percent accurate (as has been captured for your viewing pleasure by Damn You, Autocorrect). Spell checkers have been criticized for making students poorer spellers, but perhaps that's because the technology hasn't been utilized directly to help people improve their spelling. Or maybe, like so many other things, correct spelling is something humans can relegate to computers and not really lose out on anything.

Spelling, grammar, sentence and paragraph structure, and even style are the machinery of language that follows hierarchies of rules, which translate well into logic-based computer code. Additionally, back in 2007, Peter Norvig of Google fame demonstrated how a spell checker could be written in 20 lines of Python code using probability theory. Consider how Wolfram Alpha has automated the understanding of language, fueling voice-responsive apps like Siri into common use with similar apps like Evi on the horizon. So through the combination of various approaches, assessing the quality of someone's writing is ripe for automation with the proper algorithms. Inherently, roboreaders are no different than any other form of automation. They are effectively taking over the manual labor of language analysis, which will allow English teachers the freedom to focus on what they are passionate about anyway: creativity, ideas, and patterns of thinking, all of which may be what differentiates humans from the machines of the future.

Education desperately needs tools to help improve student writing. Given the present economy and school budgets across the U.S., it is clear that the solution must be efficient, cost effective, widely available, versatile, and easy to use. And roboreaders look like the best bet. If the Hewlett Foundation's contest can lead to roboreaders that can perform better than temp workers, they can be modified for adoption by educators to help in grading, which will ultimately help students become better writers.

And just maybe, the same technology will be accessible to anyone who writes extensively in the digital space, helping everyone to improve their writing. For the sake of the English language on the web, here's hoping the contest achieves its goals.

[Media: Eduify]

David started writing for Singularity Hub in 2011 and served as editor-in-chief of the site from 2014 to 2017 and SU vice president of faculty, content, and curriculum from 2017 to 2019. His interests cover digital education, publishing, and media, but he'll always be a chemist at heart.

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

What we’re reading