America’s Titan Surpasses Sequoia as World’s Fastest Supercomputer

Share

Last week, a throng of computer geeks descended on snowy Utah to show off, admire, and debate the future of the fastest computers on the planet. And of course, to find out which Boolean monster rules the roost. For the second time in 2012, a different supercomputer took top honors: Titan.

Titan is a Cray XK7 residing in Oak Ridge National Laboratory (ORNL). According to the November Top500 list of most powerful supercomputers, the system notched 17.59 petaFLOP/s (floating point operations per second) as measured by the Linpack Benchmark. The previous mark of 16.32 petaFLOP/s was held by Lawrence Livermore Laboratory’s BlueGene/Q system, Sequoia.

While Titan is a new name, it is not an entirely new computer.

The system is a souped up version of ORNL’s previous Top500 list champ, Jaguar (November 2009 to June 2010). Although Titan occupies the same footprint and consumes about the same power as Jaguar, it is almost ten times faster. A mark that won Titan third on the Green500 list of most power efficient machines.

And that’s really a key point. Measuring and comparing speed is exciting, but the future of supercomputing depends on efficiency gains too.

In the last decade or so, engineers have increased power by building massively parallel systems. That is, engineers have been linking more and more processors and stuffing them into tighter and tighter spaces.

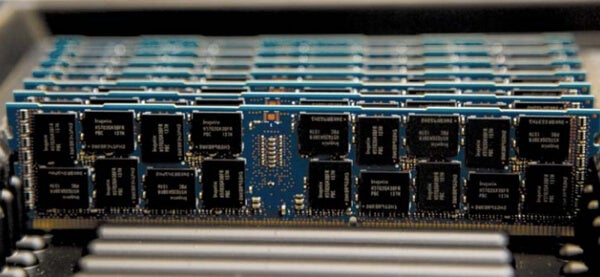

Indeed, the previous record holder, Sequoia, packs over 1.6 million processing cores. And to get from Jaguar to Titan, engineers increased the number of processing cores per node from 12 to 16—for a total of over 560,000.

But simply increasing the number of cores isn’t scalable long term—practically or economically. According to ORNL computational scientist Jim Hack, “We have ridden the current architecture about as far as we can.”

Jaguar was powered by 300,000 processors in 200 cabinets—upping performance by ten (as Titan has) would have required “2,000 cabinets on the floor, consume 10 times the power, et cetera.”

Titan is ten times speedier—but is also about the same size and requires the same amount of power as Jaguar. The system's superior efficiency is thanks in part to an improved Cray system interconnect, upping communication volume and efficiency between processors. But Titan makes use of another increasingly popular strategy.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Instead of relying on traditional CPUs to do all the heavy lifting and higher level decision-making and communication, engineers are taking a page from high performance gaming. Installed in each of Titan’s 16-core nodes is an NVIDIA K20X Graphics Processing Unit (GPU).

The GPU co-processor serves as the system workhorse—not terribly bright, but immensely powerful.

GPUs handle all the really heavy computational work superfast, leaving the CPUs to direct traffic. This specialization realizes some pretty awesome efficiency gains without sacrificing speed to get there.

Beyond Titan, China’s Tianhe-1A—Top500 champ in 2010, now #8 on the list—uses GPU co-processors. In fact, 62 of the top 500 supercomputers use co-processors, up from 58 systems in June.

Chipmakers are taking note too.

Three big players revealed ultra high performance GPUs in November—NVIDIA’s Tesla K20X (utilized in Titan), Intel’s Xeon Phi (Knight’s Corner), and AMD’s FirePro S10000.

Supercomputers aren’t just getting speedier. They’re getting more speed per unit of power and space too. Maybe in the not too terribly distant future, we’ll remember today’s giant, power hungry machines with an incredulous shake of the head. Like those old room-sized Crays that now fit into a laptop.

Jason is editorial director at SingularityHub. He researched and wrote about finance and economics before moving on to science and technology. He's curious about pretty much everything, but especially loves learning about and sharing big ideas and advances in artificial intelligence, computing, robotics, biotech, neuroscience, and space.

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading