One of the hottest areas for tech development in the last few years has been gesture control, which allows users to interact with computers without having to touch any inputs like a keyboard or a mouse. Undoubtedly inspired both by Microsoft Kinect and the film Minority Report, a host of devices and approaches have steadily been announced that promise to break people free from being physically ‘wired’ to their computers.

Now, a company started by three University of Waterloo engineering graduates brings yet another device to the table for your consideration: an armband called MYO.

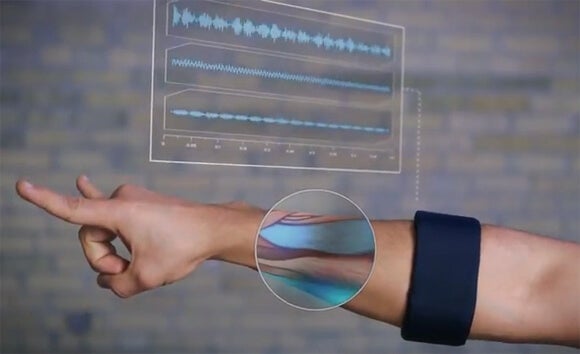

Unlike other gesture control devices that rely on positioning through camera tracking, MYO senses electrical activity in the muscles that control hand and finger motions. The armband is worn on the forearm and flexing of various muscles serve as gesture inputs. These inputs are communicated via Bluetooth, which are then translated into motions on the screen thanks to some machine-learning algorithms.

Thalmic Labs, the startup bringing MYO to the world that has backing from Y Combinator, has set a preorder price point of $149 for the device and has produced a cool video to demonstrate what gesture control with the device is like:

Thalmic Labs has stated that MYO will be available in late 2013 and is courting developers to use its open API.

The armband is entering into a market that has had a slew of activity lately, but it has already made a big splash capturing a lot of media attention. In fact, the last gesture control device to stir this kind of excitement was the Leap Motion controller, which uses infrared cameras to allow for incredibly precise gesture control within a short distance of a computer. The Leap controller is scheduled to ship its $70 preorders in May and will reportedly be available at retail stores like Best Buy.

Compared to the Leap Motion, the MYO armband allows users to control a computer at distances greater than a few feet. Furthermore, because it is detecting motion within the arm, control can be wielded during a variety of tasks whether facing the computer or not, as demonstrated in the video. At the same time, MYO has to be worn directly on the body, which might cause discomfort for some people. Additionally, users who are fidgety have to learn that little motions like tapping one’s fingers or ticks are interpreted as inputs. The proximity requirement of a Leap controller is more akin to keyboards and mice in that they are opt-in devices, but an always-on controller like MYO means the user has to be more cognizant of being engaged with the computer.

MYO looks incredibly cool and puts to shame recent efforts by Microsoft researchers to develop a wrist-worn finger tracking that relies on cameras. Like a wristwatch, the armband would likely go unnoticed after a user acclimates to it. It also is not as impeding as other gesture control devices like the Acceleglove from a few years ago.

Gesture control promises a revolution in the way we interact with computers, much in the same way that touch computing has. But that doesn’t necessarily mean that gesture control will be a cure all to computer interactions. Even though touch computing has been incredibly popular, it has its limitations for repeated use. Touch computing has taken off because of handheld mobile devices like smartphones and tablets, but touch in desktops is known to cause users discomfort over long periods of times (it’s called ‘gorilla arm’).

New devices like Google Glass are seeking a means to overcome some of the limitations that touch induces, like having to disengage from what you are doing in order to interact with the computer. Additionally, Google has been busy developing Voice Search as the ultimate in touchless computer control.

Just as carpal tunnel syndrome is caused by poor ergonomics when using a keyboard and mouse for extended periods of time, new input methods like touch computing and gesture control will have their issues. The resolution to these issues is already known: users must find uses that are natural and comfortable.

A few years from now, it isn’t hard to imagine people wearing Google Glass or a related headset, using a MYO armband to control the headset and other computers in the area, and having a Leap Motion to use a computer at their workstations. Then again, a whole new set of input devices currently being imagined by ambitious entrepreneurs could be right around the corner.

What all this means is that computer interaction is evolving, and each device is its own experiment in shaping the user’s experience. The success of each device will only be measured in its longevity. After all, the keyboard and mouse aren’t just inputs we got stuck with, but are devices that have worked successfully for people around the world and been a huge part of the digital revolution.

MYO goes to show that 2013 really is turning out to be the dawn of wearable computing.