This Robot’s Been Programmed to Look You in the Eye at Just the Right Times

Share

In design, a robot or animated character tends to evoke an increasingly positive emotional response as it more closely approximates human characteristics—but too close, and things go terribly wrong. It’s known as the uncanny valley, where near but imperfect approximations of human qualities evoke revulsion.

Why, for example, do we love Wall-E the trash compacting robot but get a little creeped out by the more closely human Repliee Q2 android pictured below?

Explanatory theories abound—fear of death, mate selection, avoidance of pathogens. Whatever the cause, the uncanny valley is a major hurdle designers of human robots and avatars have sought to overcome for years. And it isn’t just physical traits. Inappropriate behavioral cues can make for creepy creations too.

Eye contact, for example, is crucial, but only in just the right dose. Maintaining gaze builds trust, but it’s almost as important to avert your gaze at just the right times.

Last year, in a University of Wisconsin-Madison paper, Sean Andrist, Michael Gleicher, and Bilge Mutlu studied people in conversation and used the data to build gaze regulation software for computer personalities and robots.

It isn’t the only such study, but while previous studies focused on eye contact, Andrist's team studied gaze aversion (looking away). And they hoped to more rigorously quantify their findings specifically for use in behavioral software.

Although gaze aversion can be associated with negative traits, like not being trustworthy, gaze aversion also buys time to think, shows thought and effort are being given, adjusts intimacy, and regulates turn taking.

In the first instance, people look away before speaking to indicate they are considering a question. They use a similar tactic mid-conversation to indicate a pause ought not be interrupted—that is, that they intend to continue speaking.

People also look away during a conversation to make the other person feel at ease. Too much eye-contact feels invasive or provocative, too little feels evasive. Just the right amount, depending on the relationship, results in an easy flow.

Finally, eye contact can request the floor to speak at the beginning of speech and yield the floor at the end; gaze aversion while speaking, however, holds the floor.

Andrist and his team used these general guidelines to group, label, and time gaze aversion in 24 video conversations.

They then built a program based on their findings to regulate the eye contact of four virtual agents (avatars representing the computer), and studied how 24 new participants interacted with the agents in one of three states—good timing, bad timing, and static gaze—to see how gaze aversion affected the interaction.

After asking a question, people tended to yield the floor to an unresponsive agent far longer if it appropriately averted its gaze. That is, instead of believing, perhaps, that the agent was malfunctioning and interrupting, they treated it more like a human—giving it time to “think” about its response.

Similarly, agents employing appropriate gaze aversion during a pause in speech were yielded the floor more than when they either did not avert their gaze or maintained steady eye contact. (The researchers note this finding was partially supported—appropriate gaze aversion resulted in only slightly less interruption.)

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Perhaps most interesting of all, however, the agents successfully regulated the intimacy of the encounter. That is, an agent employing appropriate gaze aversion more effectively put participants at ease, and they tended to open up more.

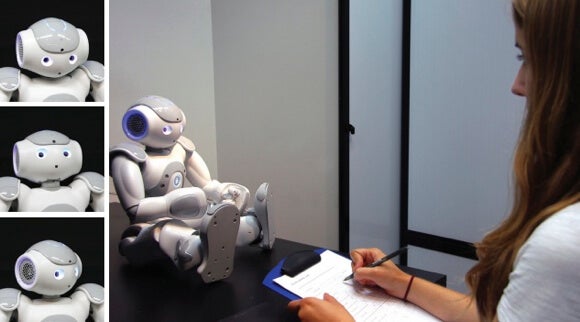

In a follow-up study released this year, Andrist and his team uploaded their gaze regulating software to a humanoid NAO robot and ran tests similar to those with the virtual agents. The robot was likewise able to better claim the floor before answering and to hold the floor when talking.

Unlike the virtual agents, the robot did not elicit greater disclosure—that is, it didn't appear to noticeably regulate intimacy. Participants did, however, believe the robot appeared more thoughtful. There was no such effect in the test with virtual agents.

In general, however, they believe more research is needed. The current version of their gaze regulation software is static, but eye contact is dynamic—what is appropriate can change throughout a conversation. And their data show that getting timing wrong can negatively impact the interaction.

As it's refined, such behavioral software may well find good use in the real world.

People don’t currently interact with computer avatars (with no human behind them) on a screen all that often. But we can imagine such interactions increasing in the future as algorithms take on customer service roles.

Further, CGI is beginning to climb out of the uncanny valley physically too. Compare, for example, the detailed but corpse-like characters in animated film The Polar Express, to this Galaxy chocolate commercial starring an impressive CGI Audrey Hepburn last year.

Now, simply add a behaviorally correct, autonomous program and you might find yourself having a very intriguing, lifelike interaction with a computer personality.

And while, physically, robots are far from equaling Lieutenant Commander Data in looks, perhaps as behavioral designs improve, people will be become increasingly more comfortable with bots too.

Image Credit: University of Wisconsin-Madison (Sean Andrist, Xiang Zhi Tan, Michael Gleicher, and Bilge Mutlu); Galaxy Chocolate/YouTube

Jason is editorial director at SingularityHub. He researched and wrote about finance and economics before moving on to science and technology. He's curious about pretty much everything, but especially loves learning about and sharing big ideas and advances in artificial intelligence, computing, robotics, biotech, neuroscience, and space.

Related Articles

What the Rise of AI Scientists May Mean for Human Research

This ‘Machine Eye’ Could Give Robots Superhuman Reflexes

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

What we’re reading