Have you ever been in a situation where knowing another language would have come in handy?

I remember standing on the platform at Tokyo Station watching my train to Nagano — the last train of the day — pulling away without me on it. What ensued was a frustrating hour of gestures, confused smiles, and head-shaking as I wandered the station looking for someone who spoke English (my Japanese is unfortunately nonexistent). It would have been really helpful to have a bilingual pal along with me to translate.

Bilingual pals can be hard to find, but Google’s new translation software may be an equally useful alternative. In a paper released last week, the authors noted that Google’s Neural Machine Translation system (GNMT) reduced translation errors by an average of 60% compared to Google’s phrase-based system. GMNT uses deep learning, a technology that aims to ‘think’ in the same way as a human brain.

From biological brain to artificial brain

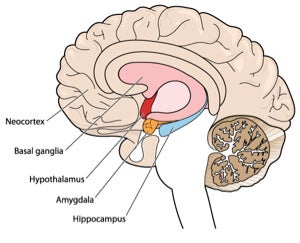

Deep learning software is inspired by the structure of the brain’s neocortex, the grooved upper layer of the brain that’s in charge of complex mental functions like sensory perception, spatial reasoning, and language.

The neocortex makes up about three-fourths of the brain and is itself made up of six layers. The layers contain ten to fourteen billion neurons which vary in shape, size, and density from layer to layer.

The neocortex makes up about three-fourths of the brain and is itself made up of six layers. The layers contain ten to fourteen billion neurons which vary in shape, size, and density from layer to layer.

Deep learning software simulates this layered structure with an artificial neural network that enables computers to learn to solve problems through examples. To do this, a program maps out a set of virtual neurons then assigns random numerical values, called weights, to connections between them. These weights determine how each simulated neuron responds to digitized features.

Programmers can then train this network by feeding it digitized versions of images or sound waves. The goal is for the system to learn to recognize patterns in the data. When the network doesn’t recognize a particular pattern, an algorithm adjusts its values.

Once the first layer of artificial neurons accurately recognizes basic features, those features serve as the input to the next layer of neurons, which trains itself to recognize more complex features. The process is repeated in successive layers until the system can reliably recognize sounds or objects.

For example, after the first neuron layer recognizes individual units of sound, those units of sound can flow to the second layer of neurons and be combined to recognize words. Words then flow to the third layer to be put together as phrases, which flow to the fourth to become sentences, and so on.

Artificial neural networks rely on big sets of training data and powerful computers — both of which are becoming increasingly available. In recent years, deep learning has been focused on improving image and speech recognition software, and using the technique for language translation is a logical progression.

Why it’s better

Google’s deep learning translation method uses 16 processors to transform words into a value called a vector. The vector represents how related one word is to every other word in the dictionary of training materials. There are 2.5 billion sentence pairs for English and French, and 500 million for English and Chinese.

For example, “shoe” is more closely related to “sock” than “pizza,” and the name “Barack Obama” is more closely related to “George Bush” than a country name like “Argentina.”

Using vectors from the input language, the system comes up with a list of possible translations that are ranked based on their probability of occurrence. Cross-checks help increase accuracy.

The craziest part about the translator’s improved accuracy is this: it happened because Google’s researchers made their neural-network-powered system more independent of its human designers.

Programmers feed in the initial inputs, but the computer takes over from there and uses the hierarchical neural network to train itself. This is called unsupervised learning, and it’s proven to be more successful than supervised learning, where a programmer is more closely involved.

In other words, it’s finally happened: computers are smarter than people. In a contest that tested the new software against human translators, it came close to matching the fluency of humans for some languages. People fluent in two languages scored the new system between 64 and 87 percent better than the previous one.

Google is already using the new system for Chinese to English translation, and plans to completely replace its existing translation technique with the GMNT.

We’re still good for something

It’s worth noting that while deep learning software can accurately translate sentences from one language to another, there are still many nuances of language that only a human brain can grasp.

Every language has its slang and colloquialisms, and most of these reflect deeper truths about a culture. Trying to translate idioms can yield some pretty hilarious results. There are terms that exist in one language but not another, necessitating an explanation rather than a translation.

One of my favorite examples of this is the Japanese term arigata-meiwaku.

The short translation is “unwelcome kindness,” but to really understand the expression you might want the full explanation. Arigata-meiwaku refers to an act someone does for you that you didn’t want them to do and tried to avoid having them do, but they went ahead and did it anyway because they were determined to do you a favor, then the favor went wrong and caused you a lot of trouble, but in the end, social conventions nonetheless required you to express gratitude.

Even if Google’s translator manages to spit out an accurate version of that mouthful, it won’t be able to replace a human’s understanding of why this term exists in Japanese and not English.

The best part of a language is its quirks

The vocabulary and structure of languages contain fascinating reflections of societies’ cultures and values. The Japanese place a stronger emphasis on propriety and saving face than Western cultures do.

An American, finding himself in the situation described above, would probably just say, “Hey, I really wish you hadn’t done me that favor.” There’s certainly no need in American English for a single-word equivalent of arigata-meiwaku.

All languages have their own set of hard-to-translate peculiarities. Thai has a separate vocabulary for speaking to or about the king, for example, and Spanish has two different ways to say “I love you” —using the wrong one might get you in trouble with your significant other.

Quirks like these mean that even if a computer can translate for us, studying foreign languages will continue to be a fascinating and important pursuit. It’ll give you a good laugh now and then, too.

All things considered, though, the next time you miss a train in a foreign country you probably wouldn’t say no to having Google’s translator in your pocket to help bridge the gap.

Image credit: Shutterstock