This Smart Vest Lets the Deaf ‘Hear’ With Their Skin

Share

What are the limits of human perception?

Take a second and concentrate on your surroundings: the subtle flickering of your laptop screen, the faint whiff of lingering coffee, the muffled sounds of traffic, the warm touch of sunlight peeking through your window.

We owe our understanding of the world to our various senses. Yet what we naturally perceive is only a sliver of the physical world. The eerie beauty of infrared is beyond our grasp, as are the air compression waves that bats use for navigation, or the electromagnetic fields that constantly course through our bodies.

“Your senses limit your reality,” said Stanford neuroscientist Dr. David Eagleman at the TED conference last year in Vancouver, British Columbia.

We are slaves to our senses, and when we lose one, we also lose our ability to perceive that fraction of the world. Take hearing, for example. Although cochlear implants somewhat restore sound perception as an inner ear replacement, they’re pricey, surgically invasive and very clunky. They also don’t work very well for congenitally deaf people when implanted later in life.

According to Eagleman, replacing faulty biological sensory hardware is too limited in scope.

What if, instead of trying to replace a lost sense, we could redirect it to another sense? What if, instead of listening, we could understand the auditory world by feeling it on our skin? And what if, using the same principles, we could add another channel to our sensory perception and broaden our reality?

Our “Mr. Potato Head” Brain

Eagleman’s ideas aren’t as crazy as they sound.

Our brain is locked in a sensory vacuum. Rather than vision, smell, touch or sound, it only understands the language of electric-chemical signals that come in through different “cables.” In essence, our valued peripheral organs are nothing but specialized sensors, translating various kinds of external input — photons and sound waves, for example — into electricity that feeds into the brain.

“Your brain doesn’t know and it doesn’t care where it gets the data from,” says Eagleman. Your ear could be a microphone, your eye a digital camera, and the brain can still learn to interpret those signals. That’s why cochlear and retinal implants work.

In other words, when it comes to human perception, the brain is basically a plug-and-play general-purpose computer. Our current biological configuration works, but we’re not necessarily stuck with it.

The best evidence for this “Mr. Potato Head” theory of sensory perception, as Eagleman dubs it, is a phenomenon called sensory substitution.

Back in the late 1960s, pioneering neuroscientist Dr. Paul Bach-y-Rita developed a dental chair with an array of pushpins in its back and invited blind participants to sit in it while “looking” at objects placed in front of them. The chair was connected to a video camera feed that captured the objects and translated the images into a series of vibrating patterns on the participants’ lower back.

After several sessions, the blind participants started developing a “visual” intuition of what’s in front of the camera based on the vibrations that they felt. In a way, they began “seeing” the world using the skin on their back.

A modern incarnation of the pushpin dental chair is the sleek BrainPort, which sits on a blind person’s tongue and translates information from a wearable digital camera (similar in looks to Cyclops' visor) into electrical vibrations on the tongue.

With time, users are able to interpret the “bubble-like” patterns as the shape, size, location and motion of objects around them. They get so good that they can navigate through an obstacle course.

“I do not see images as if I were sighted, but if I look at a soccer ball I feel a round solid disk on my tongue,” says one user, “the stimulation…works very much like pixels on a visual screen.”

Then there’s the case of Daniel Kish, a blind man who had his eyes removed at 13 due to retinal cancer. Kish subsequently taught himself echolocation — basically, using his ears to “see.” He got so good that he could take hikes and ride bikes, and appreciate the visual beauty of the world using nothing but a series of clicking sounds.

Kish is hardly the only one that rewired sight to sound. And “rewiring” here is quite literal. When scientists looked into the brains of blind people who learned to echolocate, they found that parts of their visual cortex were activated. Surprisingly, brain areas normally used to process sound stayed silent. In other words, the brain had learned to expect sound waves, rather than photons, as the source of data input to neural cables that lead into regions normally reserved for vision.

A VEST for the deaf

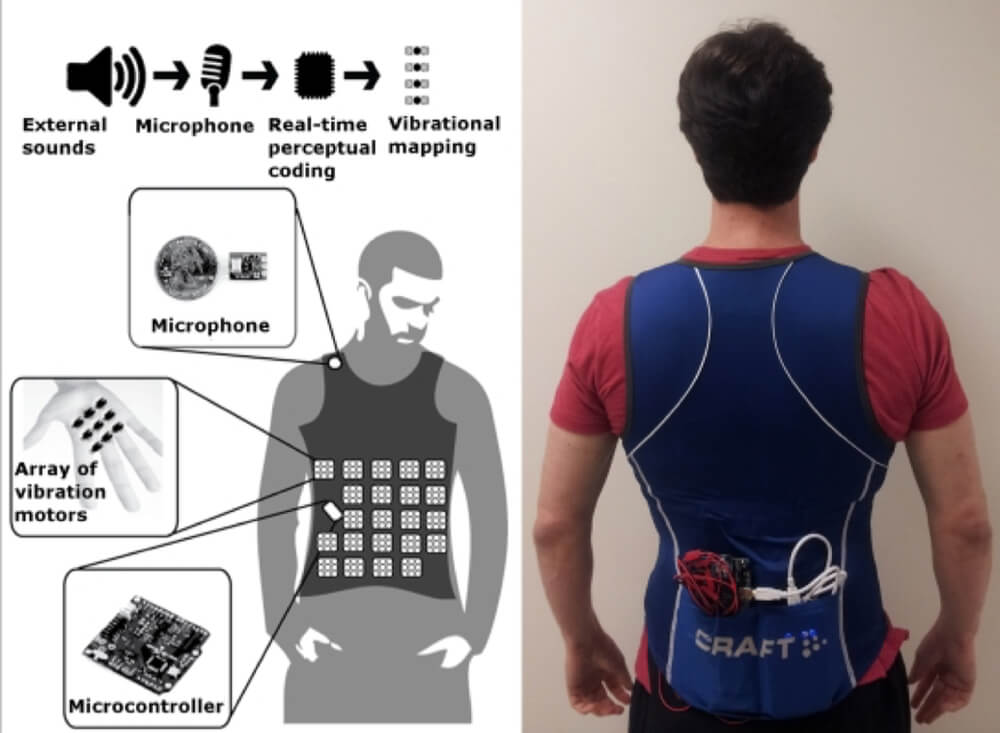

Eagleman wants to push sensory substitution into the field of hearing. With graduate student Scott Novich and students at Rice University, his team developed a VEST — Versatile Extra-Sensory Transducer — that, true to its name, a deaf person wears to “feel” speech.

It works like this.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Sounds are picked up by microphones embedded in portable computing devices like smartphones or tablets, and an app compresses the data in real-time into a computationally-manageable bitrate (without losing too much quality) and sends them to the vest via Bluetooth. The vest then translates that data into a series of intricate vibrating patterns that differ based on the frequencies of the sound source.

Image credit: Scott Novich and David Eagelman

With training, the deaf can begin to understand our sonic world through tactile sensation from the vest. The project is still ongoing, but so far, the results are promising. After a mere four days of training with the vest, one 37-year-old congenitally deaf man was able to translate the buzzing on his back into words that were said by an experimenter sitting next to him.

Incredibly, the translations happened without any conscious effort on the subject’s part. The vibrating patterns were far too complex for him to process consciously; they represent sound frequencies, not a code for letters or words. In other words, he was feeling representations of the sounds, and extracting meaning from them.

It’s a hard concept to wrap your head around. But our natural hearing works very much the same way. Different sound frequencies (pitches) stimulate specific hair cells that reside in the inner ear, which then converts sound waves into electrical firings that go the brain. Think piano keys wired to their respective strings. With VEST, the skin takes the role of those hair cells.

We expect users to gain a direct perceptual experience of hearing after about three months of using VEST, says Eagleman. Like normal hearing, the meaning of speech should just pop out without passing through the conscious mind.

A “sense” that expands our reality?

The team doesn’t yet understand how VEST is changing the neurobiology of the brain, but they hope to find out using functional imaging studies that track the brains of participants as they get increasingly proficient with the device. Whether VEST can help with higher-level appreciation of our sonic world, such as music enjoyment, also remains to be seen.

In the meantime, the team has begun pondering a more philosophical question: if a device like VEST could replace a foregone sense, could we use it to add to our perception of the world?

Eagleman’s ideas get pretty wild.

We could stream stock market data in real-time through VEST, and train ourselves to “feel” the economic pulse of the world, says Eagleman. Astronauts could “sense” the status of the International Space Station by converting different gauges and measures into distinct vibrating patterns. Pilots of drones could intuit the pitch, yaw, orientation and heading of their quadcopter, and potentially improve their flying ability.

Without doubt, this all sounds like complete science fiction. But so did cochlear and retinal implants back in the day. The hypothesis that underlies these ideas — that the brain could come up with ways to understand new sources of data — seems very possible. The question is how far we can biologically push the “general computing device” buzzing away inside our heads.

Eagleman eloquently sums it up: “As we move into the future, we're going to increasingly be able to choose our own peripheral devices…So the question now is, how do you want to go out and experience your universe?”

Image credit: TED/YouTube

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

What the Rise of AI Scientists May Mean for Human Research

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

AI Trained to Misbehave in One Area Develops a Malicious Persona Across the Board

What we’re reading