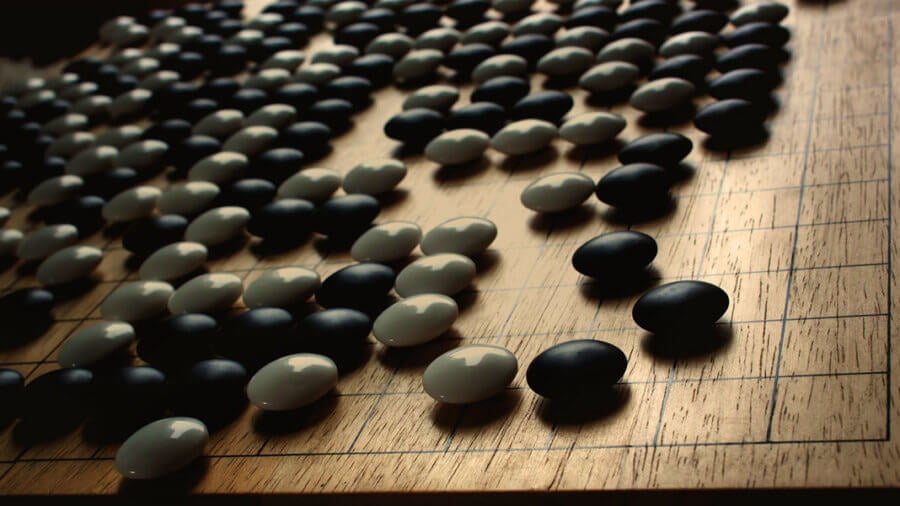

It’s the end of an era in AI research. For decades complex board games like Go, chess, and shogi have been seen as the leading yardstick for machine intelligence. DeepMind’s latest program can master all three by simply playing itself, suggesting we need to set bigger challenges for AI.

Machines first started chipping away at humanity’s intellectual dominance back in 1997, when IBM’s Deep Blue beat chess grandmaster Garry Kasparov. In the first half of this decade, masters of Japan’s harder chess variant shogi started falling to computers. And in 2016 DeepMind shocked the world when its AlphaGo program defeated one of the world’s top-ranked masters of Go, widely considered the most complicated board game in the world.

As impressive as all these feats were, game-playing AI typically exploit the properties of a single game and often rely on hand-crafted knowledge coded into them by developers. But DeepMind’s latest creation, AlphaZero, detailed in a new paper in Science, was built from the bottom up to be game-agnostic.

All it was given was the rules of each game, and it then played itself thousands of times, effectively using trial and error to work out the best tactics for each game. It was then pitted against the most powerful specialized AI for each game, including its predecessor AlphaGo, beating them comprehensively.

“This work has, in effect, closed a multi-decade chapter in AI research. AI researchers need to look to a new generation of games to provide the next set of challenges, “IBM computer scientist Murray Campbell, who has worked on chess-playing computers, wrote in an opinion for Science.

The generality of AlphaZero is important. While learning to master the most complex board games in the world is impressive, their tightly-controlled environments are a far stretch from the real world. The reinforcement learning approach that DeepMind has championed has achieved impressive results in Go, video games, and simulators, but practical applications have been harder to come by.

By making these programs more general, the company hopes they can start to break out of the confines of these more rigid environments and use the approach to tackle real-world challenges. Last week, there was evidence that transition may have begun—one of their algorithms won a competition for predicting how proteins fold, a complex problem at the heart of many biological processes and, perhaps more importantly, drug design.

One of the most impressive features of the new program is the efficiency with which it searches for the ideal move. The researchers used Monte Carlo tree search, which has long been the standard for Go-playing machines but is generally thought to be inappropriate for chess or shogi.

But the switch allowed the program to focus on the most promising potential positions rather than employing the brute force approach of its opponents. It assessed just 60,000 potential positions per second in both chess and shogi, compared with 60 million for its chess-playing opponent and 25 million in shogi.

Nonetheless, all three games are “perfect information games” where each player always has complete visibility of the game state, unlike card games like poker in which a player doesn’t know his opponents’ hands. Very few real-world situations have perfect information, so building the capacity to deal with uncertainty into these systems will be a crucial next step.

That’s leading to emerging interest among AI researchers in multiplayer video games, like StarCraft II and Dota 2. The games are incredibly open-ended, give players very limited visibility of their opponents’ actions, and require long-term strategic planning. And so far, AI programs have yet to beat their human counterparts.

One final aspect worth noting about this research is the enormous amount of computing power that went into it. AlphaZero took just 9 hours to master chess, 12 hours for shogi, and 13 days for Go. But this was achieved using 5,000 TPUs—Google’s specialized deep learning processors.

That’s a colossal amount of computing power, well out of the reach of pretty much anyone other than Silicon Valley’s behemoths. And as New York University computer scientist Julian Togelius noted to New Scientist, the system is only general in its capacity to learn. The program trained on shogi can’t play chess, so you need access to such enormous computing resources every time you repurpose it.

This is part of a trend noted by OpenAI researchers earlier this year; the computing resources used to train the programs behind some of the field’s most exciting advances are increasing exponentially. And that’s prompting both excitement and concern.

On the one hand, it raises the question of whether a substantial factor in the tractability of many problems is simply how many chips you can dedicate to them. With companies seemingly willing to dedicate extensive resources to AI, that would suggest a promising future for the field.

But at the same time, it also points to a future of AI haves and have nots. If computing resources become increasingly integral to making progress in the field, many bright minds outside the largest tech companies could be left behind.

Image Credit: Chill Chillz / Shutterstock.com