Remember when predicting protein shapes using AI was the breakthrough of the year?

That’s old news. Having solved nearly all protein structures known to biology, AI is now turning to a new challenge: designing proteins from scratch.

Far from an academic pursuit, the endeavor is a potential game-changer for drug discovery. Having the ability to draw up protein drugs for any given target inside the body—such as those triggering cancer growth and spread—could launch a new universe of medicines to tackle our worst medical foes.

It’s no wonder multiple AI powerhouses are answering the challenge. What’s surprising is that they converged on a similar approach. This year DeepMind, Meta, and Dr. David Baker’s team at the University of Washington all took inspiration from an unlikely source: DALL-E and GPT-3.

These generative algorithms have taken the world by storm. When given just a few simple prompts in everyday English, the programs can produce mind-bending images, paragraphs of creative writing, or film scenes, and even remix the latest fashion designs. The same underlying technology recently took a stab at writing computer code, besting nearly half of human competitors in a highly challenging programming task.

What does any of that have to do with proteins?

Here’s the thing: proteins are essentially strings of “letters” molded into secondary structures—think sentences—and then 3D “paragraphs.” If AI can generate gorgeous images and clean writing, why not co-opt the technology to rewrite the code of life?

Here Come the Champions

Protein is the key to life. It builds our bodies. It runs our metabolisms. It underlies intricate brain functions. It’s also the basis for a wealth of new drugs that could treat some of our most insurmountable health problems to date—and create new sources of biofuels, lab-grown meats, or even entirely novel lifeforms through synthetic biology.

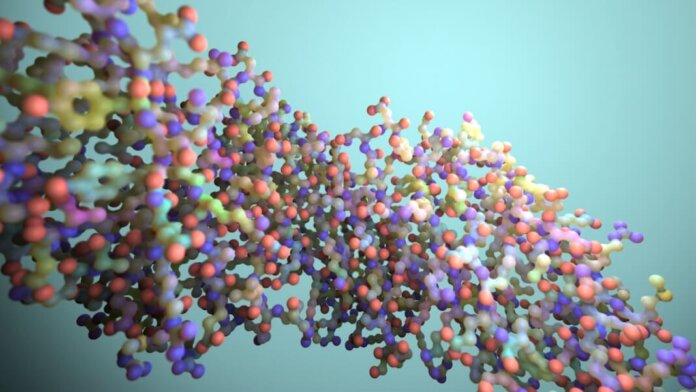

While “protein” often evokes pictures of chicken breasts, these molecules are more similar to an intricate Lego puzzle. Building a protein starts with a string of amino acids—think a myriad of Christmas lights on a string— which then fold into 3D structures (like rumpling them up for storage).

DeepMind and Baker both made waves when they each developed algorithms to predict the structure of any protein based on their amino acid sequence. It was no simple endeavor; the predictions were mapped at the atomic level.

Designing new proteins raises the complexity to another level. This year Baker’s lab took a stab at it, with one effort using good old screening techniques and another relying on deep learning hallucinations. Both algorithms are extremely powerful for demystifying natural proteins and generating new ones, but they were hard to scale up.

But wait. Designing a protein is a bit like writing an essay. If GPT-3 and ChatGPT can write sophisticated dialogue using natural language, the same technology could in theory also rejigger the language of proteins—amino acids—to form functional proteins entirely unknown to nature.

AI Creativity Meets Biology

One of the first signs that the trick could work came from Meta.

In a recent preprint paper, they tapped into the AI architecture underlying DALL-E and ChatGPT, a type of machine learning called large language models (LLMs), to predict protein structure. Instead of feeding the models exuberant amounts of text or images, the team instead trained them on amino acid sequences of known proteins. Using the model, Meta’s AI predicted over 600 million protein structures by reading their amino acid “letters” alone—including esoteric ones from microorganisms in the soil, ocean water, and our bodies that we know little about.

More impressively, the AI, called ESMFold, eventually learned to “autocomplete” protein sequences even when some amino acid letters were obscured. Although not as accurate as DeepMind’s AlphaFold, it ran roughly 60 times faster, making it easier to scale up to larger databases.

Baker’s lab took the protein “autocomplete” function to a new level in a preprint published earlier this month. If AI can already fill in the blanks when it comes to predicting protein structures, a similar principle could potentially also generate proteins from a prompt—in this case, its potential biological function.

The key came down to diffusion models, a type of machine learning algorithm that powers DALL-E. Put simply, these neural networks are especially good at adding and then removing noise from any given data—be it images, texts, or protein sequences. During training, they first destroy training data by adding noise. The model then learns to recover the original data by reversing the process through a step called denoising. It’s a bit like dismantling a laptop or other electronic and putting it back together to see how different components work.

Because diffusion models usually start with scrambled data (say, all the pixels of an image are rearranged into noise) and eventually learn to reconstruct the original image, it’s especially effective at generating new images—or proteins—from seemingly random samples.

Baker’s lab tapped into the approach with a bit of fine-tuning of their signature RoseTTAFold structure prediction network. Previously, a version of the software generated protein scaffolds—the backbone of a protein—in just a single step. But proteins aren’t uniform blobs: each has multiple hotspots that allow them to physically tag onto each other, which triggers various biological processes. When RoseTTAFold faced tough problems—such as designing protein hotspots with minimal knowledge—it struggled.

The team’s solution was to integrate RoseTTAFold with a diffusion model, with the former helping with the denoising step. The resulting algorithm, RoseTTAFold Diffusion (RF Diffusion), is a love-child between protein structure prediction and creative generation. The AI designed a wide range of elaborate proteins with little resemblance to any known protein structures, constrained by pre-defined but biologically relevant limits.

Designing proteins is just the first step. The next is translating these digital designs into actual proteins and seeing how they work in cells. In one test, the team took 44 candidates with antibacterial and antiviral potential and made the proteins inside the trusty E. Coli bacteria. Over 80 percent of the AI designer proteins folded into their predicted final form. This isquite the feat, as several sub-units had to come together in specific numbers and orientations.

The proteins also grabbed onto their intended targets. One example had a protein structure binding to SARS-CoV-2, the virus that causes Covid-19. The AI design specifically honed in on the virus’s spike protein, the target for Covid-19 vaccines.

In another example, the AI designed a protein that binds to a hormone to regulate calcium levels in the blood. The resulting candidate readily grabbed onto the target—so much so that it needed just a tiny amount. Speaking to MIT Technology Review, Baker said the AI seemed to pull protein drug solutions “out of thin air.”

“These works reveal just how powerful diffusion models can be for protein design,” said study author Dr. Joseph Watson.

Do AIs Dream of Molecular Sheep?

Baker’s lab isn’t the only one chasing AI-based protein drugs.

Generate Biomedicines, a startup based in Massachusetts, also has its eyes on diffusion models for generating proteins. Dubbed Chroma, their software works similarly to RF Diffusion, including the generated proteins adhering to biophysical constraints. According to the company, Chroma can generate large proteins—over 4,000 amino acid residues—in just a few minutes on a GPU (graphics processing unit).

While just ramping up, it’s clear that the race for on-demand protein drug design is on. “It’s extremely exciting,” said David Juergens, author of the RF Diffusion study, “and it’s really just the beginning.”

Image Credit: Ian Haydon / Institute for Protein Design / University of Washington