Turn Your Body Into Your I/O with Skinput (video)

Share

Electronic devices are getting smaller, and so are their interfaces. If you've ever had problems typing on your mobile, or changing a song on your iPod while jogging, Chris Harrison has the answer. His Skinput prototype is a system that monitors acoustic signals on your arm to translate gestures and taps into input commands. Just by touching different points on your limb you can tell your portable device to change volume, answer a call, or turn itself off. Even better, Harrison can couple Skinput with a pico projector so that you can see a graphic interface on your arm and use the acoustic signals to control it. The project is set to be presented at this year's SIGCHI conference in April, but you can check it out now in the video below. Skip past 1:00 to avoid the intro, but make sure to catch the Tetris game demo at 1:19, and the cool pico projector interface starting at 2:05.

Incorporating your body into your mobile systems could be the next big theme in human computer interfaces. The Skinput system bears a strong resemblance to the I/O for Sixth Sense, Pranav Mistry's open source personal augmented reality device. Whereas Mistry uses a camera to capture video input, Harrison has focused on acoustics. Researchers at Microsoft have taken a third tactic: using the electric signals on the skin's surface that correspond to muscle movement as input commands. All three are pretty novel approaches to controlling your technology through your body, and any one might make headway in the next few years. Together, they suggest that the best way to take advantage of miniaturized mobile devices is to make them part of you.

I've been impressed with Harrison's work at Carnegie Mellon's Human Computer Interaction Institute since I reviewed his Scratch Input device last year. That system used acoustic signals on hard surfaces to control various electronics. Skinput is the logical successor to Sratch Input, taking the basic premise of an acoustic interface and upgrading it with projected video and augmenting it by placing it on the body.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

As advanced as Skinput may be, however, it's hard to know if it will ever make it out of the prototype phase. Novel I/O systems are amazing to watch, but transitioning them into a marketable product can take years. Here's an idea though: Mistry's Sixth Sense device is open source, maybe Harrison should try to combine it with Skinput. Together, visual and audio input could give the personal AR system a dynamic and sensitive set of complex controls able to take on any task.

Whenever I see this level of engineering and inventiveness I'm as impressed by the people behind it as I am with the technology itself. Harrison's already on my radar after Sratch Input, even more so now with Skinput. Prototypes like these help shape the dialogue about the future of human computer interfaces even if they never make it to market. (Relatively) young inventors like Harrison, Mistry, and countless others are going to make this century a truly remarkable one to live in. Maybe in a few years I'll be writing these posts on my arm while I'm out jogging. Make it happen, Harrison!

[image and video credits: Chris Harrison, CMU HCII]

[source: Chris Harrison website]

Related Articles

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

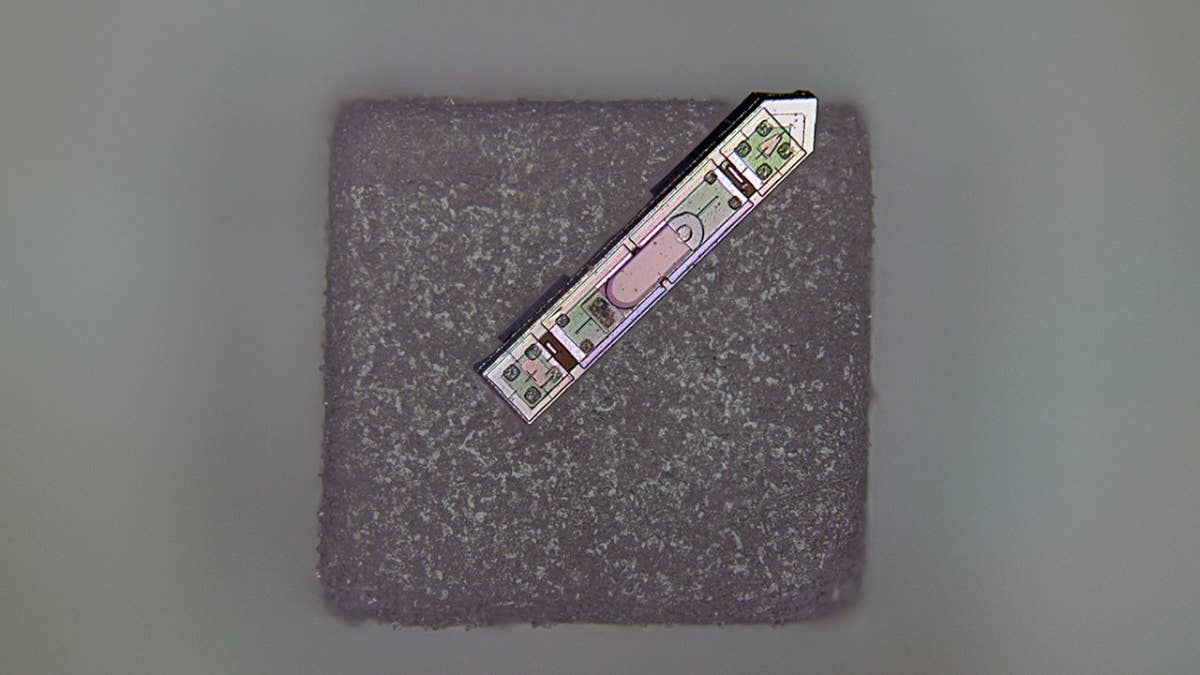

These Brain Implants Are Smaller Than Cells and Can Be Injected Into Veins

This Wireless Brain Implant Is Smaller Than a Grain of Salt

What we’re reading