Audeo Lets You Talk or Control Wheelchair With Thoughts (Video)

Share

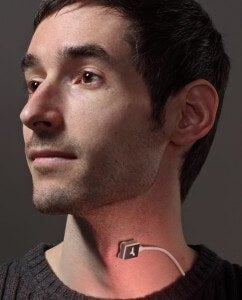

Coleman has mastered the evil scientist pose while riding in his mind controlled wheelchair.

There's something eerie and amazing about the way that Tom Coleman travels in his wheelchair. You see, Tom isn't paralyzed and yet he's not using his body to control his movement. He thinks about saying commands to his chair...and then the chair moves. Tom and Michael Callahan are the founders of Ambient Corporation, makers of the Audeo, a device which reads nerve impulses in the neck to help people speak and even control an electronic wheelchair. Designed to help people suffering from diseases like ALS which erode muscle control over time, Audeo has received numerous awards. Audeo Basic, the system which allows someone to speak only using nerve signals, is already available on a limited basis for trials. Check out Callahan's presentation of how audeo can be used with cell phones, and video of Coleman in the chair after the break.

This sort of "nerve reading" technology isn't unique. We've seen Braingate use motor neuron signals in the brain to move a wheelchair and computer cursor. Cyberdyne uses surface sensors to detect muscle commands to help guide the movements of their HAL exoskeleton. Audeo is somewhat of a blend of the two. It doesn't require you to have gold wires in your brain, like Braingate, but it can control remote objects, and it reads surface signals, like HAL, but for both motor movements and language. Taken as a group, we're seeing an amazing trend in being able to read nerve signals like computer commands. Whether those commands allow us to talk, roll, or lift heavy objects, is just marketing. As nerve signal reading becomes more sophisticated, we'll see an increase in the range of devices you can control, and the number of disabilities that can be overcome with the technology.

Go to 1:25 to skip explanation:

When you speak, your brain sends signals to your vocal cords, lungs, and mouth to help you shape and sound out words. With some forms of muscle degeneration, the lungs and the mouth may cease to function, but the signals to the cords can still be used. That's where Audeo comes in. With just three tiny pill sized sensors around the neck, the device can pick up electrical signals from nerves. Those signals are filtered and amplified by the device, sent to a PC for decoding, and then spoken as words out of speakers.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

As is clear in the videos, there's a significant lag time between subvocalizing words and commands and actually have the computer speak or wheelchair move. The current rate is something near 30 words per minute, about one fifth the normal rate. Hopefully as the Audeo program is developed further, the processing speed of the signals will be improved.

Callahan hopes that the Audeo will one day be as inobtrusive and cheap as a bluetooth headset.

Of course, no matter how good the program is, it takes a little time to get used to. While wheelchair commands are fairly simple (Coleman says he can get people moving around in ten minutes), the speech recognition isn't as easy. Audeo should be able to distinguish all 40+ English phonemes (the sounds we make when we speak) but it takes time for users to become accustomed to "pronouncing" the correct sounds in the new system. Still, judging by Callahan's performance in the first video, with familiarity comes a pretty cool mastery of speech.

It's hard to get over the straight-faced completely still bodies of Coleman and Callahan as they demonstrate the capabilities of Audeo. Those are the placid faces of "mind-control". Eventually, it would be amazing to see this technology adapted into the SixthSense system from MIT or even keyed into a humanoid robot. While the ability to overcome ALS and similar illnesses is a wonderful short term benefit, the long term possibilities for the general public are just as amazing.

[photo credits: John B Cernett, Bland Designs]

Related Articles

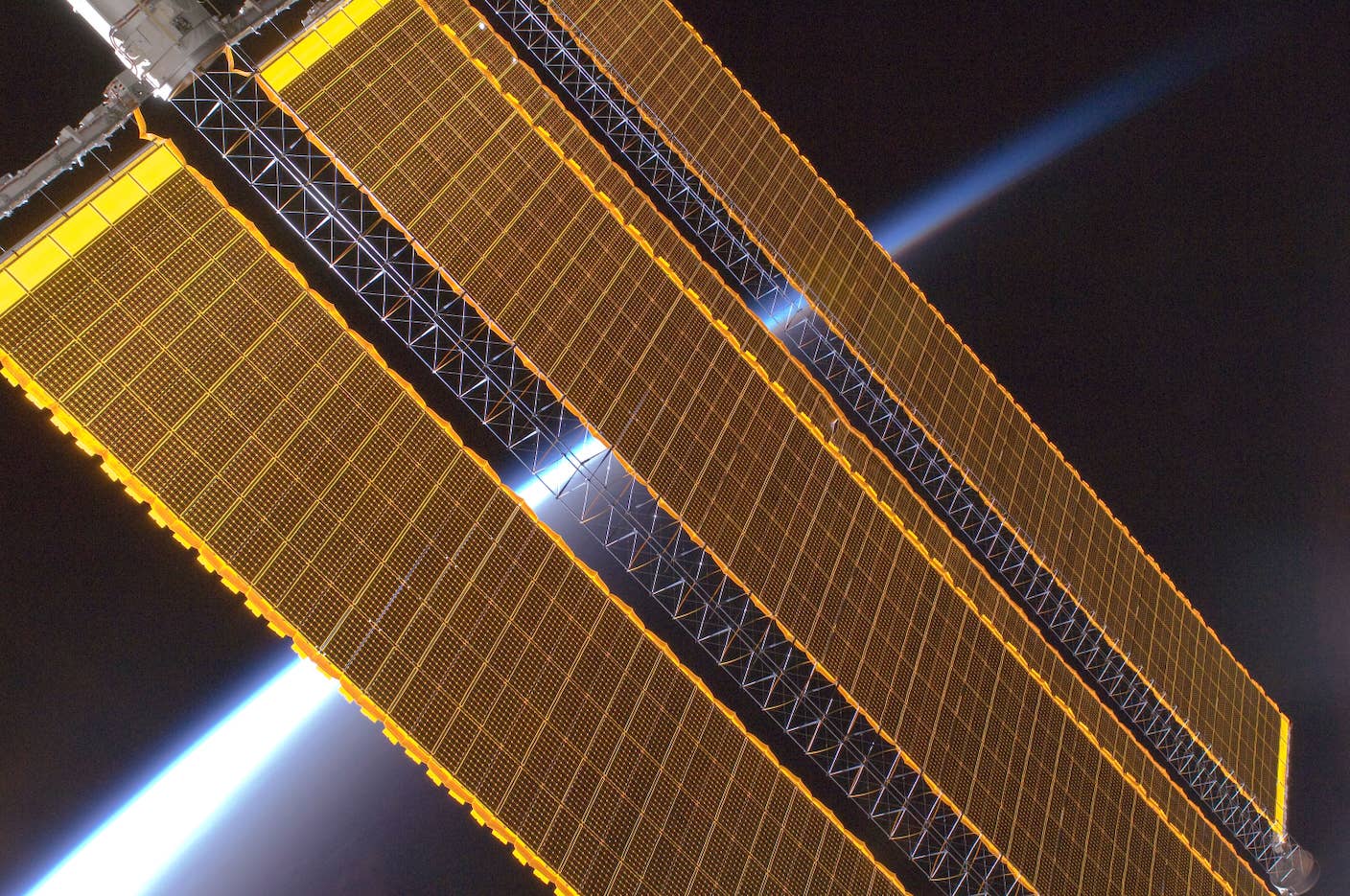

Data Centers in Space: Will 2027 Really Be the Year AI Goes to Orbit?

New Gene Drive Stops the Spread of Malaria—Without Killing Any Mosquitoes

These Robots Are the Size of Single Cells and Cost Just a Penny Apiece

What we’re reading