NAO Robot Impresses With New Abilities At Paris Conference

Share

During the first weekend in April, the French maker of NAO robots, Aldebaran Robotics, organized the first ever NAO Spring Dev Days in Paris, a codathon programming weekend for 40 developers to create useful applications, exchange ideas, and show off what they could do with NAO Next Gen robots. The weekend featured a number of presentations, including a talk on choreography and one from the team that taught the robot to play Connect 4. But the devs were mostly busy coding in a "Bring NAO to Life!" contest for the best application produced in 24 hours with the prize being a Next Gen NAO robot. Though only a few videos of the weekend were recorded, three of the applications stood out for their potential to improve human-robot interactions: transcription of spoken words into handwriting, bot mimicking via Kinect, and the NAO bot as personal fitness trainer.

To kickoff the event, a 5-member group of NAOs captured the audience's attention as they danced to a 3-minute ethereal electronic mix peppered with samples from GLADOS of Valve's popular Portal series of games. Aldebaran's CEO Bruno Maisonnier had previously demonstrated the fluid motion of the Next Gen robots at TEDxConcorde in January, so it was time for another dance. While a lot of the bots motions were similar to a 2010 performance at the Shanghai World Expo and when they danced the macarena, some of the moves are impressive, especially a one-legged, yoga-esque part in the middle requiring careful balance:

If you can't get enough of robots dancing, you can always watch them performing Michael Jackson's Thriller.

After the 24-hour programming session, developers were ready to show off what they can do with NAO and bring the stuff of science fiction into reality.

NAO As Transcriptionist: Taylor Veltrop, who is a robotics applications expert at Aldebaran, has programmed a NAO bot to hear a word and write it out by hand with the aid of some packing tape holding the pen to its hand:

Although voice-command technology is a staple of any Jetsons episode and voice recognition software has been around for years in operating systems like Windows, applications such as Dragon, and commonly in customer service centers, wide adoption of this technology by the masses just hasn't come to pass. The release of the iPhone 4S brought Siri into many people's lives, and a recent survey reveals that 87 percent of owners use the app at least once a month and about a third use Siri almost daily.

But transcribing spoken words into printed text also involves handwriting, which is a lot of mechanical manipulation for a robot to do. In the digital age, some may argue that handwriting is pointless, yet a study conducted at Indiana University Bloomington showed that handwriting activates parts of children's brains essential for learning to read. Kids that struggle with handwriting need a lot of one-on-one practice, so if a robot could demonstrate good handwriting skills, it could be used for more individual instruction and eventually be enhanced for occupational therapy for students who need a lot more help with printing.

NAO As Mimic: As a follow up to a presentation given in Tokyo last year, Taylor also demonstrated how a robot could be used to mirror his movements using a master/slave control system via a converted Kinect controller. By performing a bunch of trigonometric calculations on the skeleton tracking of Kinect, the application allows NAO to process Taylor's movements and mimic them with only a slight delay:

What's amazing about this is that Hollywood released the film Real Steel in 2011 about a bot named Atom that mimics Hugh Jackman's motions to learn how to box (worldwide, the film pulled in $300 million showing the kind of enthusiasm moviegoers have for this concept). For being at such an early stage in development, NAO's moves are pretty impressive. The project is a great example of the convergence of technologies considering that the Kinect motion controller was released by Microsoft less than 2 years ago. When the developers participating in DARPA's Robotics Challenge start implementing the same motion-tracking approach, we could see emergency robots entering disaster scenes that are mimicking the motions of "controllers" miles away long before the bots have the artificial intelligence to operate autonomously.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

NAO As Fitness Trainer: The winner of the contest was Craig Schulman with his project "Work Out Nao!" aimed at turning a NAO bot into a fitness trainer:

One of the well-publicized problems of modern times is the obesity pandemic affecting people across the globe. With the release of Wii Fit and Kinect, many had hoped that exergaming would become a viable tool in reducing obesity, but clearly more is needed. Most people need accountability in their fitness programs and the Wii can't prompt you to get up and exercise. But a robot could. That's what Massimo Battaglia had in mind with Gymbot, a robotic personal fitness trainer that earned him the 2011 ICSR design award, which he envisioned would be available by 2020. But it could happen much sooner than that with the progress that Schulman has made coupled with the work already going on at Aldebaran.

These projects demonstrate how developers are only scratching the surface of what NAO bots can do, which makes this first NAO Dev Days quite a success. As more technologies are introduced and improved upon, we are likely to see an emergence of some remarkable things that NAOs can do along with all their groovy dances.

For an excellent overview of the weekend, profiling some of the developers, presentations, and of course, the NAO bots, check out this nice video:

[Media: Robot Dreams, YouTube]

David started writing for Singularity Hub in 2011 and served as editor-in-chief of the site from 2014 to 2017 and SU vice president of faculty, content, and curriculum from 2017 to 2019. His interests cover digital education, publishing, and media, but he'll always be a chemist at heart.

Related Articles

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

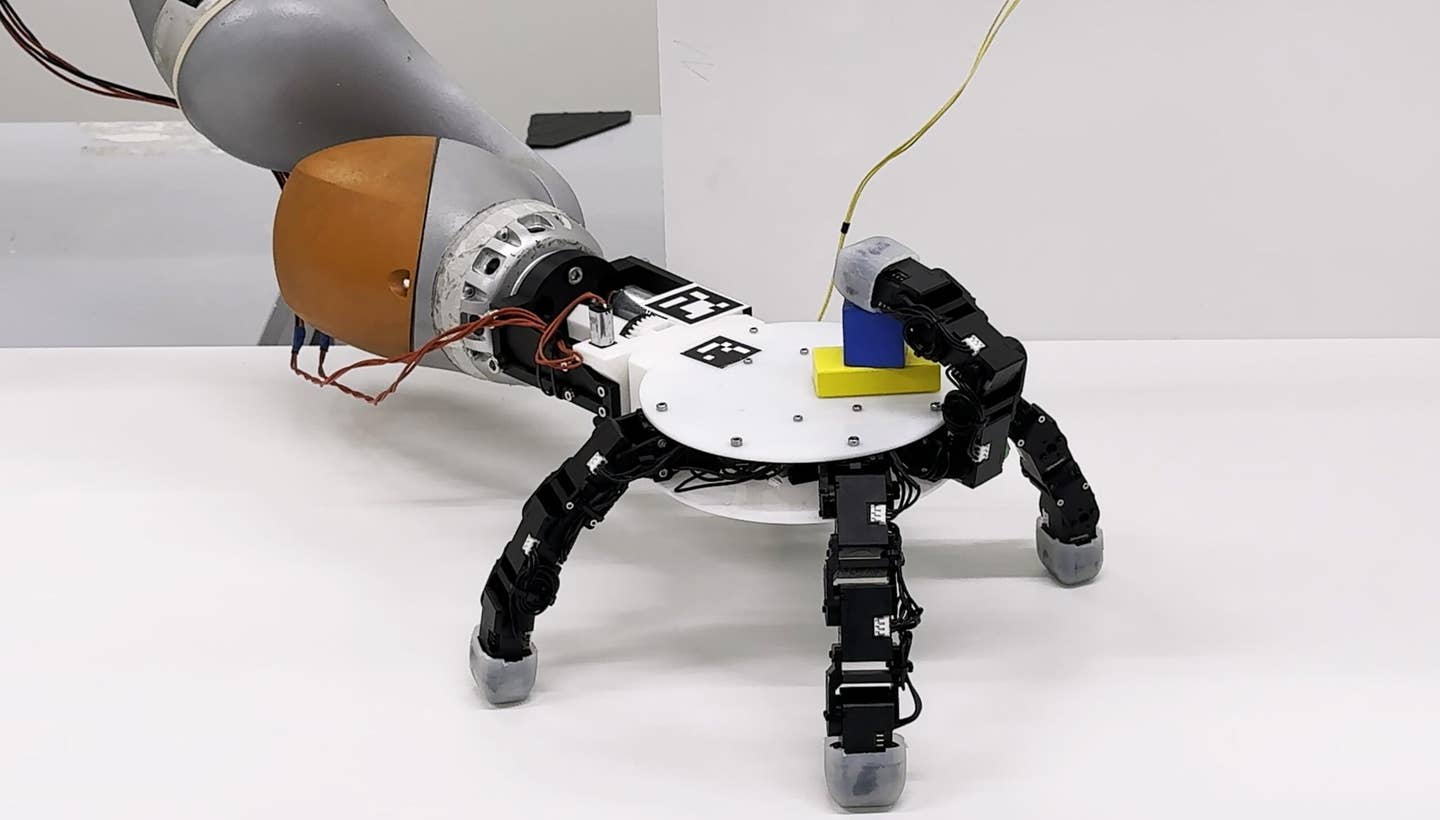

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

What we’re reading