New Software Reveals “Hidden Information” In Video Footage

Share

Leave it up to those brainiacs at MIT to come up with a technology like this. With a nifty video processing program they’re able to see changes impossible to see with the naked eye. Blood flow across a person’s face, vibrations of individual guitar strings, or the breathing patterns of a tiny infant are all made visible by their software which allows us to see subtle changes in the world that would otherwise go undetected.

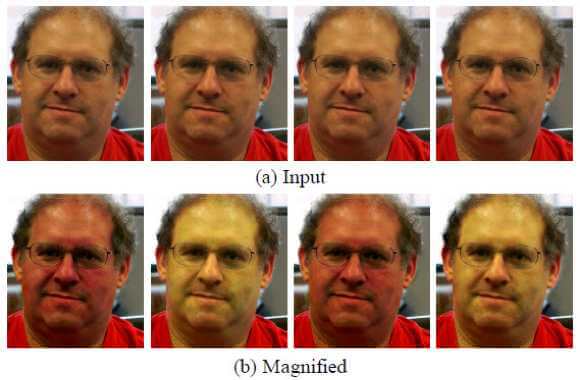

The process, called Eulerian Video Magnification, extracts the “hidden information” in video footage by dividing the image up spatially, tracking the frequency at which the color in those smaller regions change, then amplifying changes that occur only at certain frequencies. By amplifying signals that change, say, between 50 and 70 times per minute – a probable range for heart rates – they can actually see blood flow across a person’s face and thus measure his or her pulse rate.

“Just like optics has enabled [someone] to see things normally too small,” Fredo Durand, MIT computer scientist and coauthor of the study, told MIT Tech Review, “computation can enable people to see things not visible to the naked eye.”

Users can choose which frequencies to monitor and they can monitor those changes in realtime. Check out this video and watch as the software reveals things you didn’t even know you were seeing.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

[Source: Miki Rubinstein via YouTube]

Michael Rubenstein, an MIT graduate student who participated in the project, says the system could be used for “contactless monitoring” of patients in a hospital. Pulse rate and breathing patterns of premature infants in intensive care units where the less sensors that need to be attached the better. He also thinks a similar system could be setup in the home to monitor infant breathing and replace current monitors which can be pricey.

Speaking with MIT News, Rubenstien mentioned that colleagues have suggested other possible uses including long-range surveillance, laparoscopic imaging of organs, and lie detection which is based on changes in pulse rate.

The team will make the software code available this summer to anyone who wants to use it. Durand expects it will be predominantly used for remote diagnostics, but also thinks that it could be useful to structural engineers who want to measure how buildings may move under windy conditions. The software can be used with footage from just about any video camera, but noise produced while filming with lower quality cameras will also be amplified, so high quality cameras will yield the best results.

The software is another example of extracting more information from the same old data. In 2011 a group at University of California San Diego created software that can make a copy of your keys from a digital image. Who knows what other algorithmic acrobatics might be able to extract from video footage or photographs? One thing’s for certain, if there’s more “hidden information” to be gotten, groups like Durand’s will be searching for it.

Peter Murray was born in Boston in 1973. He earned a PhD in neuroscience at the University of Maryland, Baltimore studying gene expression in the neocortex. Following his dissertation work he spent three years as a post-doctoral fellow at the same university studying brain mechanisms of pain and motor control. He completed a collection of short stories in 2010 and has been writing for Singularity Hub since March 2011.

Related Articles

Hugging Face Says AI Models With Reasoning Use 30x More Energy on Average

Study: AI Chatbots Choose Friends Just Like Humans Do

AI Companies Are Betting Billions on AI Scaling Laws. Will Their Wager Pay Off?

What we’re reading