This Week’s Awesome Stories From Around the Web (Through July 15)

Share

ARTIFICIAL INTELLIGENCE

DeepMind's AI Is Teaching Itself Parkour, and the Results Are Adorable

James Vincent | The Verge

"The research explores how reinforcement learning (or RL) can be used to teach a computer to navigate unfamiliar and complex environments. It’s the sort of fundamental AI research that we’re now testing in virtual worlds, but that will one day help program robots that can navigate the stairs in your house."

VIRTUAL REALITY

Now You Can Broadcast Facebook Live Videos From Virtual Reality

Daniel Terdiman | Fast Company

"The idea is fairly simple. Spaces allows up to four people—each of whom must have an Oculus Rift VR headset—to hang out together in VR. Together, they can talk, chat, draw, create new objects, watch 360-degree videos, share photos, and much more. And now, they can live-broadcast everything they do in Spaces, much the same way that any Facebook user can produce live video of real life and share it with the world."

ROBOTICS

I Watched Two Robots Chat Together on Stage at a Tech Event

Jon Russell | TechCrunch

"The robots in question are Sophia and Han, and they belong to Hanson Robotics, a Hong Kong-based company that is developing and deploying artificial intelligence in humanoids. The duo took to the stage at Rise in Hong Kong with Hanson Robotics’ Chief Scientist Ben Goertzel directing the banter. The conversation, which was partially scripted, wasn’t as slick as the human-to-human panels at the show, but it was certainly a sight to behold for the packed audience."

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

BIOTECH

Scientists Used CRISPR to Put a GIF Inside a Living Organism's DNA

Emily Mullin | MIT Technology Review

"They delivered the GIF into the living bacteria in the form of five frames: images of a galloping horse and rider, taken by English photographer Eadweard Muybridge...The researchers were then able to retrieve the data by sequencing the bacterial DNA. They reconstructed the movie with 90 percent accuracy by reading the pixel nucleotide code."

DIGITAL MEDIA

AI Creates Fake Obama

Charles Q. Choi | IEEE Spectrum

"In the new study, the neural net learned what mouth shapes were linked to various sounds. The researchers took audio clips and dubbed them over the original sound files of a video. They next took mouth shapes that matched the new audio clips and grafted and blended them onto the video. Essentially, the researchers synthesized videos where Obama lip-synched words he said up to decades beforehand."

Alison tells the stories of purpose-driven leaders and is fascinated by various intersections of technology and society. When not keeping a finger on the pulse of all things Singularity University, you'll likely find Alison in the woods sipping coffee and reading philosophy (new book recommendations are welcome).

Related Articles

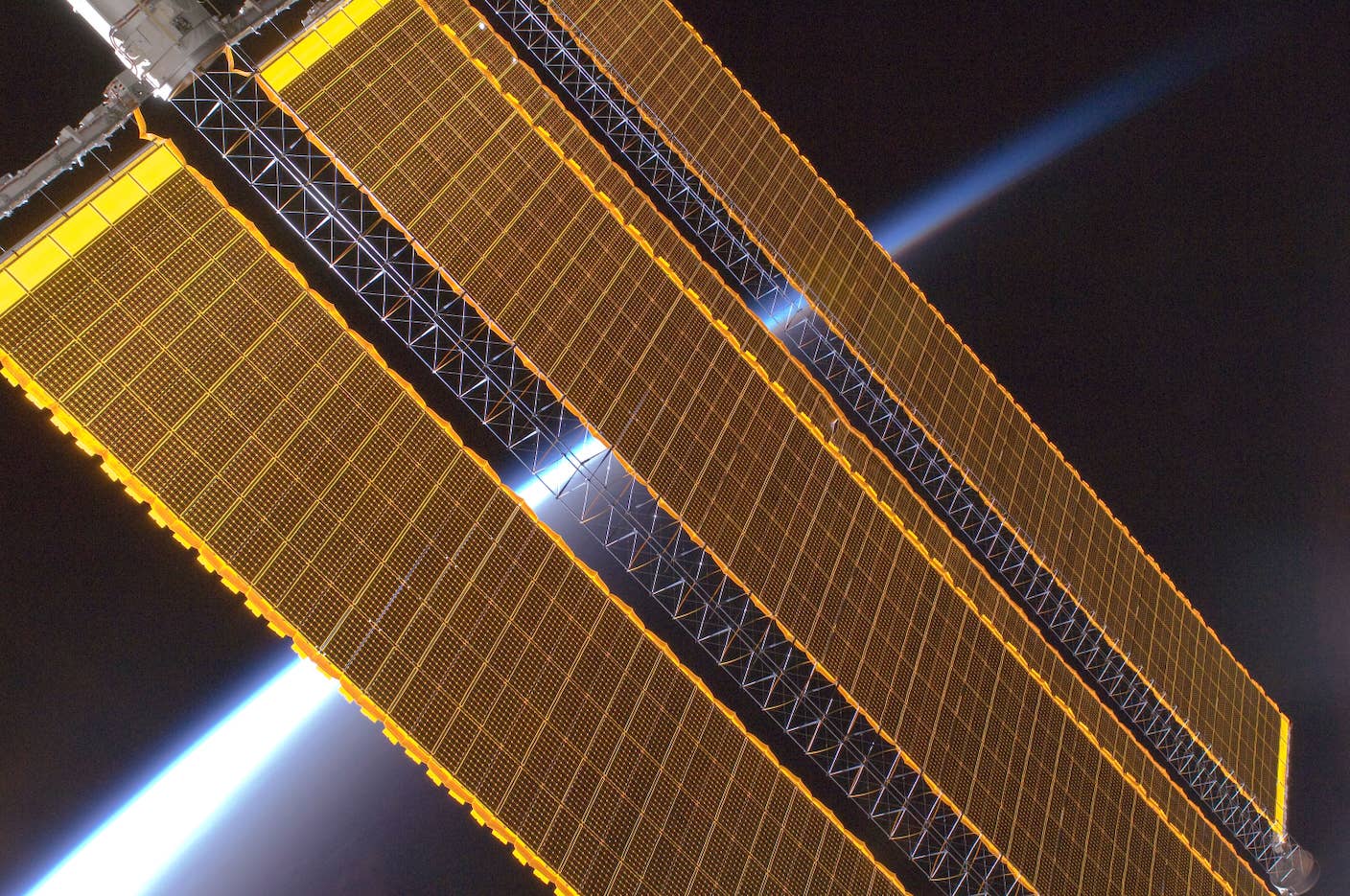

Data Centers in Space: Will 2027 Really Be the Year AI Goes to Orbit?

New Gene Drive Stops the Spread of Malaria—Without Killing Any Mosquitoes

These Robots Are the Size of Single Cells and Cost Just a Penny Apiece

What we’re reading