This Mind-Controlled Robotic Limb Lets You Multitask With Three Arms

Share

If you’ve ever juggled a situation where two arms aren’t enough, you’re in luck! Thanks to a new study published in Science Robotics, humans have the ability to mind-control a robotic arm on a task—while using their biological limbs on a different one.

The possibilities are endless: no more juggling keys with grocery bags as you struggle to open the door. Or stop typing because you need a bite or a drink. Or trying to hold a ladder steady while climbing on top of it. Or scratching your nose while conducting an epic raid in World of Warcraft (yup, I speak from experience).

Because the brain-machine interface only requires an electrode cap, the technology could become a widespread blessing for the multitaskers among us.

A mind-controlled third arm is already cool enough. The fact that human brains have the ability to operate another limb as if born with it is even cooler. But there’s more: the study shows that wearing the arm can actually nurture the wearer’s own multitasking skills over time, even after ditching the extra limb.

“Multitasking tends to reflect a general ability for switching attention. If we can make people do that using brain-machine interface, we might be able to enhance human capability,” said study author Dr. Shuichi Nishio at the Advanced Telecommunications Research Institute International in Kyoto, Japan.

Fully Armed

Although the field of mind-controlled prosthetics has largely focused on replacing lost limbs, a field called Supernumerary Robotic Limbs (SRL) focuses on augmenting the able-bodied with extra limbs. The hope is that one day robotics will be able to help you do things that are annoying, uncomfortable, impossible, or unsafe to do with your own arms.

There’s already been early successes. There’s the “drummer arm kit” that lets its user play music in collaboration with real hands. More practically, back in 2014, a team at MIT developed a pair of arms you can wear on your shoulder blades (and another that juts out from your waist). In a demo in a construction context, the team showed how the prosthetic arm could assist its wearer in lifting up a heavy piece while his biological arms attached it to the ceiling.

In a case like this, the robotic arms use gyroscopes and accelerometer data to make educated guesses for what the wearer is trying to do, and construct a set of “behavior models.” While useful, the arms hardly performed like normal extensions of the wearer’s body. (And the thought of robotic arms going off uncontrollably due to bugs in the software is nothing short of terrifying.)

A much more intuitive way to enhance human limbs is to copy nature. Rather than giving the robotic prostheses a software brain, it makes way more sense to directly control it with brain activity.

But right out the gate, there’s a problem: brain-controlled robotics requires sophisticated software that deciphers a person’s intention from reading brain waves alone. In the case of prostheses meant to replace a missing limb, the wearer focuses their attention single-mindedly on performing a task with the robotic arm. This generates a beautifully clear brain signal, which can be picked up by brain-reading software.

But when an able-bodied person wears an arm to multi-task, the problem becomes much more complex. In essence, the mind-reading software now has to untangle two sets of intent based solely on electrical signals measured from the brain.

“Controlling body augmentation devices with the brain and multitasking are two of the main goals in human body augmentation,” the authors said, but “to our knowledge, no BMI studies have explored the control of an SRL to achieve multitasking.”

Cracking Intent

To crack the problem, Nichio and colleague Christian Penaloza recruited 15 volunteers and outfitted them with a prosthetic arm and a brain-wave-reading cap.

When you think about performing some sort of action, neural circuits in motion-related areas in the brain generate electrical signals that can be picked up by arrays of electrodes placed onto the scalp. Each motion generates a unique activity pattern, much like a fingerprint.

In a run-of-the-mill brain-machine interface, an algorithm teases out a user’s intent by reading recorded brain waves. The software then operates the robotic arm, forming a seamless experience that makes users feel like they’re controlling the arm with their minds.

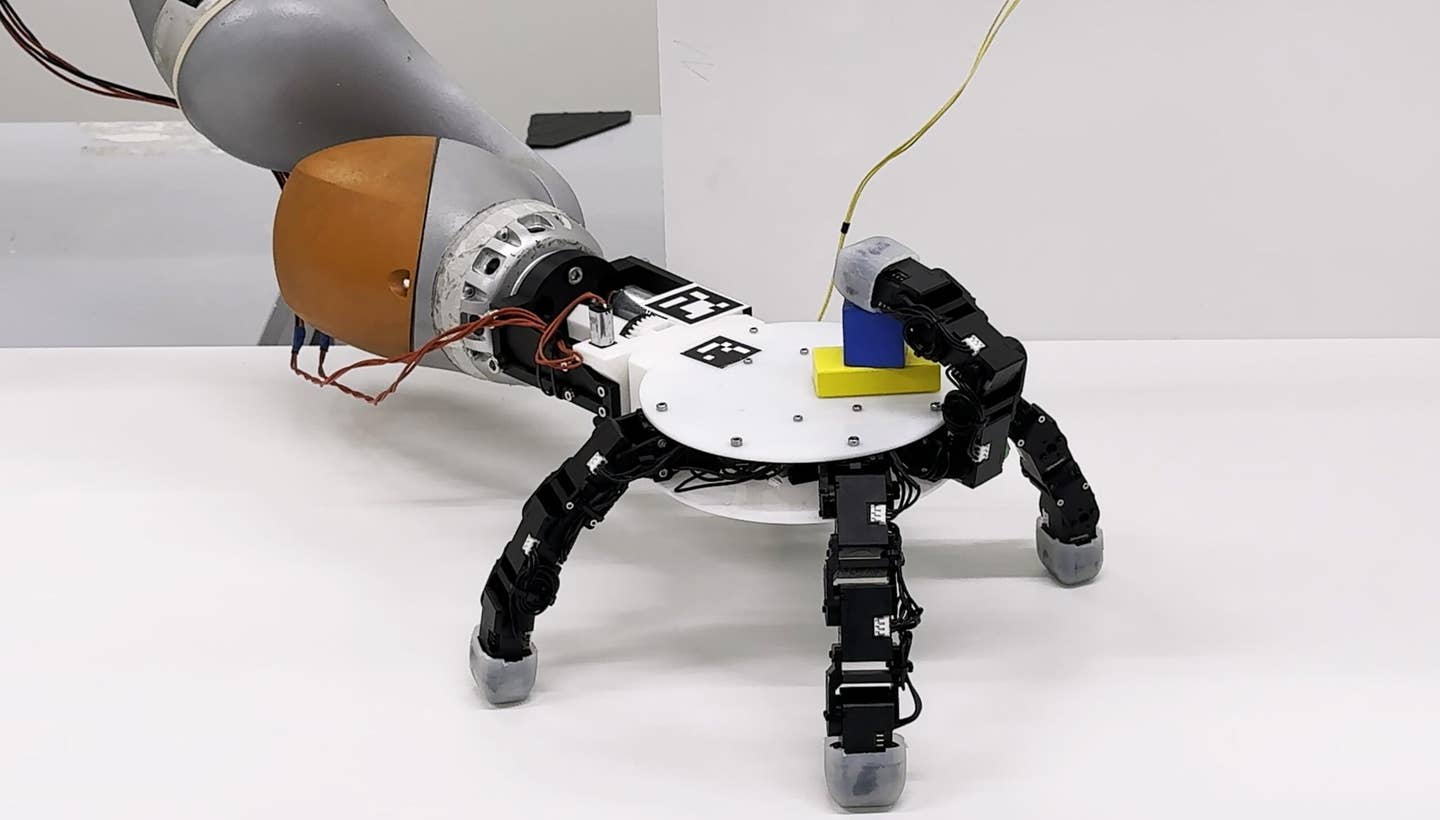

The participants were asked to sit in a chair mounted with a robotic arm, strategically placed in a location that makes them feel like it’s part of their body. To start off, each participant was asked to balance a ball on a board using their own arms while wearing an electrode cap, which picks up the electrical activity from the brain.

Next, the volunteers turned their attention to the robotic arm. Sitting in the same chair, they practiced imagining picking up a bottle using the prosthesis while having their brain activity patterns recorded. A nearby computer learned to decipher this intent, and instructed the robotic arm to act accordingly.

Then came the fun part: the volunteers were asked to perform both actions simultaneously: balancing the ball with natural arms, and grasping the bottle with the robotic one. Eight out of the 15 participants successfully performed both actions; overall, the group managed cyborg multitasking roughly three quarters of the time.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Robotic Brain-Training

When the team dug into their data, they found that they could statistically separate the group into good and bad performers. The latter struggled with both tasks, successfully managing only half the time.

This isn’t to say that the algorithm wasn’t working, the authors explain. Rather, it likely represents the group’s natural spread in their ability to multi-task.

Previous research shows that we can’t simultaneously focus on two (or more) tasks; the term “multi-tasking” is a bit of a misnomer. Rather, certain people can rapidly switch their full attention between different things, causing the illusion of managing multiple projects at once.

This seems to be what’s going on: the bad performers did pretty well when concentrating on one task, but faltered when challenged with both.

“It may be possible that both tasks require the same active brain regions to process different kinds of information or that one particular brain region activates during a parallel task, affecting the activity of another brain region that becomes active during the primary task” in these people, the authors said.

What’s more remarkable is how fast the participants picked up both tasks.

The team fully expects better performance with more natural-looking robotic arms, which could increase a wearer’s sense of ownership to the arm. Normally it takes multiple training sessions to reach the level of performance they reached, the authors explained.

That’s pretty awesome. It suggests that it may be possible to enhance human motor capabilities in multi-tasking by using the system as biofeedback. And the beauty of robotic arms, rather than a full-body exoskeleton, is that it’s much less constricting, making the technology more user-friendly.

This is the first mind-controlled robotic arm that can multitask. “That’s what makes it special,” said Penaloza.

Going forward, the team hopes to further amp up their robotic arms with context-aware capabilities that complement brain-issued commands. For example, an arm could be fitted with computer vision abilities to recognize the objects and context around it, and further optimize behavior to match user intention.

The age of the cyborg is rapidly descending. Now the question is: if you had a third arm, how would you use it?

Image Credit: Inspiring / Shutterstock.com

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

What the Rise of AI Scientists May Mean for Human Research

This ‘Machine Eye’ Could Give Robots Superhuman Reflexes

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

What we’re reading