How the Brain Builds a Sense of Self From the People Around Us

Share

We are highly sensitive to people around us. As infants, we observe our parents and teachers, and from them we learn how to walk, talk, read—and use smartphones. There seems to be no limit to the complexity of behavior we can acquire from observational learning.

But social influence goes deeper than that. We don’t just copy the behavior of people around us. We also copy their minds. As we grow older, we learn what other people think, feel, and want—and adapt to it. Our brains are really good at this—we copy computations inside the brains of others. But how does the brain distinguish between thoughts about your own mind and thoughts about the minds of others? Our new study, published in Nature Communications, brings us closer to an answer.

Our ability to copy the minds of others is hugely important. When this process goes wrong, it can contribute to various mental health problems. You might become unable to empathize with someone, or, at the other extreme, you might be so susceptible to other people’s thoughts that your own sense of “self” is volatile and fragile.

The ability to think about another person’s mind is one of the most sophisticated adaptations of the human brain. Experimental psychologists often assess this ability with a technique called a “false belief task.”

In the task, one individual, the “subject,” gets to observe another individual, the “partner,” hide a desirable object in a box. The partner then leaves, and the subject sees the researcher remove the object from the box and hide it in a second location. When the partner returns, they will falsely believe the object is still in the box, but the subject knows the truth.

This supposedly requires the subject to hold in mind the partner’s false belief in addition to their own true belief about reality. But how do we know whether the subject is really thinking about the mind of the partner?

False Beliefs

Over the last ten years, neuroscientists have explored a theory of mind-reading called simulation theory. The theory suggests that when I put myself in your shoes, my brain tries to copy the computations inside your brain.

Neuroscientists have found compelling evidence that the brain does simulate the computations of a social partner. They have shown that if you observe another person receive a reward, like food or money, your brain activity is the same as if you were the one receiving the reward.

There’s a problem though. If my brain copies your computations, how does it distinguish between my own mind and my simulation of your mind?

In our experiment, we recruited 40 participants and asked them to play a “probabilistic” version of the false belief task. At the same time, we scanned their brains using functional magnetic resonance imaging (fMRI), which measures brain activity indirectly by tracking changes in blood flow.

In this game, rather than having a belief that the object is definitely in the box or not, both players believe there is a probability that the object is here or there, without knowing for certain (making it a Schrödinger’s box). The object is always being moved, and so the two players’ beliefs are always changing. The subject is challenged with trying to keep track of not only the whereabouts of the object, but also the partner’s belief.

This design allowed us to use a mathematical model to describe what was going on in the subject’s mind, as they played the game. It showed how participants changed their own belief every time they got some information about where the object was. It also described how they changed their simulation of the partner’s belief, every time the partner saw some information.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

The model works by calculating “predictions” and “prediction errors.” For example, if a participant predicts that there is a 90 percent chance the object is in the box, but then sees that it’s nowhere near the box, they will be surprised. We can therefore say that the person experienced a large “prediction error.” This is then used to improve the prediction for next time.

Many researchers believe that the prediction error is a fundamental unit of computation in the brain. Each prediction error is linked to a particular pattern of activity in the brain. This means that we could compare the patterns of brain activity when a subject experiences prediction errors with the alternative activity patterns that happen when the subject thinks about the partner’s prediction errors.

Our findings showed that the brain uses distinct patterns of activity for prediction errors and “simulated” prediction errors. This means that the brain activity contains information not only about what’s going on out there in the world, but also about who is thinking about the world. The combination leads to a subjective sense of self.

Brain Training

We also found, however, that we could train people to make those brain-activity patterns for self and other either more distinct or more overlapping. We did this by manipulating the task so that the subject and partner saw the same information either rarely or frequently. If they became more distinct, subjects got better at distinguishing their own thoughts from the thoughts of the partner. If the patterns became more overlapping, they got worse at distinguishing their own thoughts from the thoughts of the partner.

This means that the boundary between the self and the other in the brain is not fixed, but flexible. The brain can learn to change this boundary. This might explain the familiar experience of two people who spend a lot of time together and start to feel like one single person, sharing the same thoughts. On a societal level, it may explain why we find it easier to empathize with those who’ve shared similar experiences to us, compared with people from different backgrounds.

The results could be useful. If self-other boundaries really are this malleable, then maybe we can harness this capacity, both to tackle bigotry and alleviate mental health disorders.![]()

This article is republished from The Conversation under a Creative Commons license. Read the original article.

I am an MBPhD student at University College London. I studied pre-clinical medicine at Oxford University, intercalating with a BSc in neuroscience. In 2014 I moved to UCL to undertake an MBPhD, so that i could do research alongside my medical studies. Between 2015 and 2019 i did my PhD in computational and cognitive neuroscience at the Max Planck UCL Centre for Computational Psychiatry and Ageing Research and the Wellcome Centre for Human Neuroimaging. My research used behavioral modelling and brain imaging to try and understand how the human mind distinguishes between Self and Other, and how this process might go wrong in mental health disorders. I am due to graduate from my medical training in 2021 and i hope to become a research clinician in psychiatry.

Related Articles

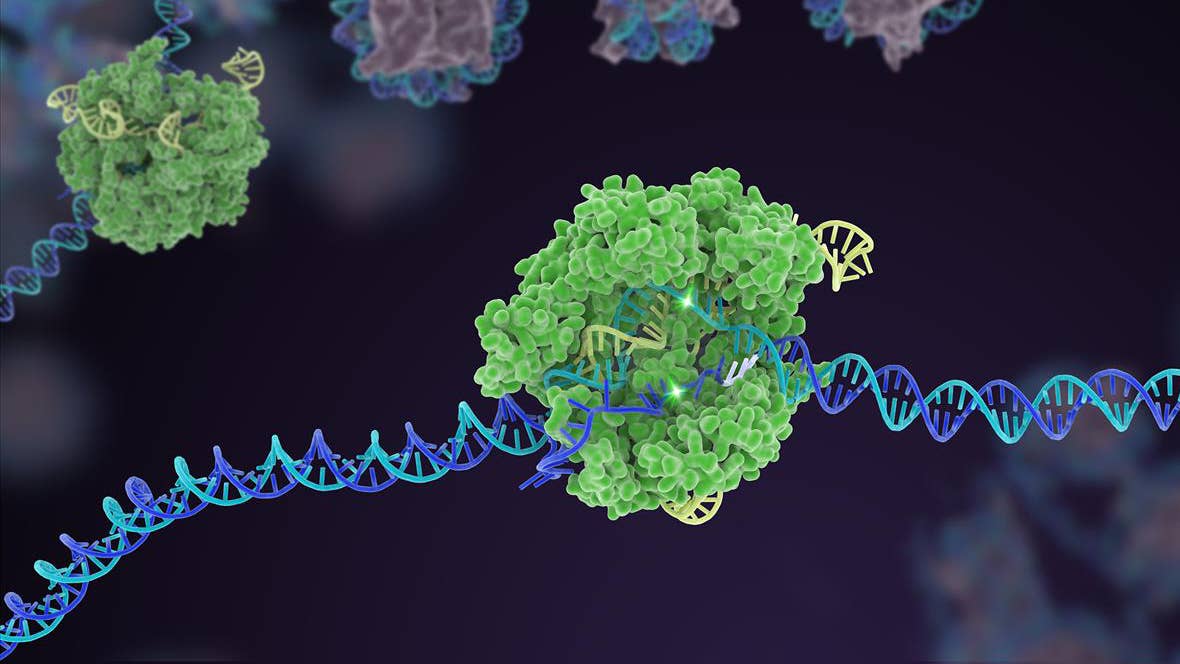

Souped-Up CRISPR Gene Editor Replicates and Spreads Like a Virus

This Brain Pattern Could Signal the Moment Consciousness Slips Away

This Week’s Awesome Tech Stories From Around the Web (Through February 14)

What we’re reading