Meta’s New ChatGPT-Like AI Is Fluent in the Language of Proteins—and Has Already Modeled 700 Million of Them

Share

The race to solve every protein structure just welcomed another tech giant: Meta AI.

A research offshoot of Meta, known for Facebook and Instagram, the team came onto the protein shape prediction scene with an ambitious goal: to decipher the “dark matter” of the protein universe. Often found in bacteria, viruses, and other microorganisms, these proteins lounge in our everyday environments but are complete mysteries to science.

“These are the structures we know the least about. These are incredibly mysterious proteins. I think they offer the potential for great insight into biology,” said senior author Dr. Alexander Rives to Nature.

In other words, they’re a treasure trove of inspiration for biotechnology. Hidden in their secretive shapes are keys for designing efficient biofuels, antibiotics, enzymes, or even entirely new organisms. In turn, the data from protein predictions could further train AI models.

At the heart of Meta’s new AI, dubbed ESMFold, is a large language model. It might sound familiar. These machine learning algorithms have taken the world by storm with the rockstar chatbot ChatGPT. Known for its ability to generate beautiful essays, poems, and lyrics with simple prompts, ChatGPT—and the recently-launched GPT-4—are trained with millions of publicly-available texts. Eventually the AI learns to predict letters, words, and even write entire paragraphs and, in the case of Bing’s similar chatbot, hold conversations that sometimes turn slightly unnerving.

The new study, published in Science, bridges the AI model with biology. Proteins are made of 20 “letters.” Thanks to evolution, the sequence of letters help generate their ultimate shapes. If large language models can easily construe the 26 letters of the English alphabet into coherent messages, why can’t they also work for proteins?

Spoiler: they do. ESM-2 blasted through roughly 600 million protein structure predictions in just two weeks using 2,000 graphic processing units (GPUs). Compared to previous attempts, the AI made the process up to 60 times faster. The authors put every structure into the ESM Metagenomic Atlas, which you can explore here.

To Dr. Alfonso Valencia at the Barcelona National Supercomputing Center (BCS), who was not involved in the work, the beauty of using large language systems is a “conceptual simplicity.” With further development, the AI can predict “the structure of non-natural proteins, expanding the known universe beyond what evolutionary processes have explored.”

Let’s Talk Evolution

ESMFold follows a simple guideline: sequence predicts structure.

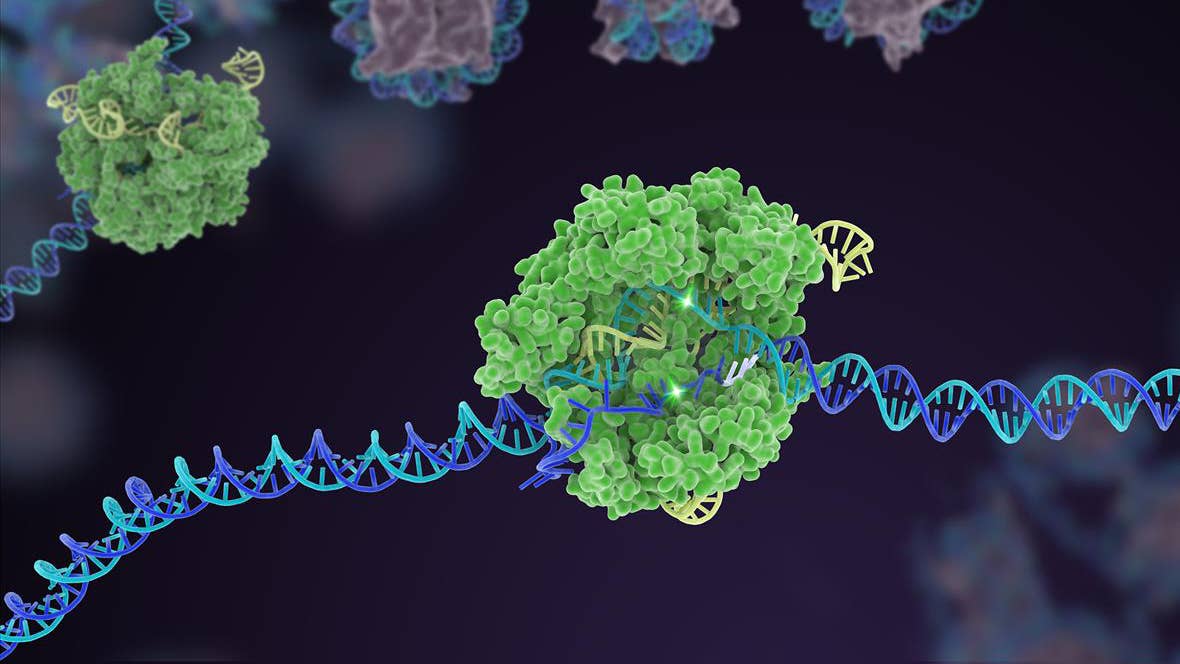

Let’s backtrack. Proteins are made from 20 amino acids—each one a “letter”—and strung up like spiky beads on a string. Our cells then shape them up into delicate features: some look like rumpled bed sheets, others like a swirly candy cane or loose ribbons. The proteins can then grab onto each other to form a multiplex—for example, a tunnel that crosses the brain cell membrane that controls its actions, and in turn controls how we think and remember.

Scientists have long known that amino acid letters help shape the final structure of a protein. Similar to letters or characters in a language, only certain ones when strung together make sense. In the case of proteins, these sequences make them functional.

“The biological properties of a protein constrain the mutations to its sequence that are selected through evolution,” the authors said.

Similar to how different letters in the alphabet converge to create words, sentences, and paragraphs without sounding like complete gibberish, the protein letters do the same. There is an “evolutionary dictionary” of sorts that helps string up amino acids into structures the body can comprehend.

“The logic of the succession of amino acids in known proteins is the result of an evolutionary process that has led them to have the specific structure with which they perform a particular function,” said Valencia.

Mr. AI, Make Me a Protein

Life’s relatively limited dictionary is great news for large language models.

These AI models scour readily available texts to learn and build up predictions of the next word. The end result, as seen in GPT-3 and ChatGPT, are strikingly natural conversations and fantastical artistic images.

Meta AI used the same concept, but rewrote the playbook for protein structure predictions. Rather than feeding the algorithm with texts, they gave the program sequences of known proteins.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

The AI model—called a transformer protein language model—learned the general architecture of proteins using up to 15 billion “settings.” It saw roughly 65 million different protein sequences overall.

In their next step the team hid certain letters from the AI, prompting it to fill in the blanks. In what amounts to autocomplete, the program eventually learned how different amino acids connect to (or repel) each other. In the end, the AI formed an intuitive understanding of evolutionary protein sequences—and how they work together to make functional proteins.

Into the Unknown

As a proof of concept, the team tested ESMFold using two well-known test sets. One, CAMEO, involved nearly 200 structures; the other, CASP14, has 51 publicly-released protein shapes.

Overall, the AI “provides state-of-the-art structure prediction accuracy,” the team said, “matching AlphaFold2 performance on more than half the proteins.” It also reliably tackled large protein complexes—for example, the channels on neurons that control their actions.

The team then took their AI a step further, venturing into the world of metagenomics.

Metagenomes are what they sound like: a hodgepodge of DNA material. Normally these come from environmental sources such as the dirt under your feet, seawater, or even normally inhospitable thermal vents. Most of the microbes can’t be artificially grown in labs, yet some have superpowers such as resisting volcanic-level heat, making them a biological dark matter yet to be explored.

At the time the paper was published, the AI had predicted over 600 million of these proteins. The count is now up to over 700 million with the latest release. The predictions came fast and furious in roughly two weeks. In contrast, previous modeling attempts took up to 10 minutes for just a single protein.

Roughly a third of the protein predictions were of high confidence, with enough detail to zoom into the atomic-level scale. Because the protein predictions were based solely on their sequences, millions of “aliens” popped up—structures unlike anything in established databases or those previously tested.

“It’s interesting that more than 10 percent of the predictions are for proteins that bear no resemblance to other known proteins,” said Valencia. It might be due to the magic of language models, which are far more flexible at exploring—and potentially generating—previously unheard of sequences that make up functional proteins. “This is a new space for the design of proteins with new sequences and biochemical properties with applications in biotechnology and biomedicine,” he said.

As an example, ESMFold could potentially help suss out the consequences of single-letter changes in a protein. Called point mutations, these seemingly benign edits wreak havoc in the body, causing devastating metabolic syndromes, sickle cell anemia, and cancer. A lean, mean, and relatively simple AI brings results to the average biomedical research lab, while scaling up protein shape predictions thanks to the AI’s speed.

Biomedicine aside, another fascinating idea is that proteins may help train large language models in a way texts can’t. As Valencia explained, “On the one hand, protein sequences are more abundant than texts, have more defined sizes, and a higher degree of variability. On the other hand, proteins have a strong internal ‘meaning’—that is, a strong relationship between sequence and structure, a meaning or coherence that is much more diffuse in texts,” bridging the two fields into a virtuous feedback loop.

Image Credit: Meta AI

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

Souped-Up CRISPR Gene Editor Replicates and Spreads Like a Virus

Your Genes Determine How Long You’ll Live Far More Than Previously Thought

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

What we’re reading