DeepMind’s New Self-Improving Robot Is Quick to Adapt and Learn Fresh Skills

Share

Despite rapid advances in artificial intelligence, robots remain stubbornly dumb. But new research from DeepMind suggests the same technology behind large language models (LLMs) could help create more adaptable brains for robotic arms.

While autonomous robots have started to move out of the lab and into the real world, they remain fragile. Slight changes in the environment or lighting conditions can easily throw off the AI that controls them, and these models have to be extensively trained on specific hardware configurations before they can carry out useful tasks.

This lies in stark contrast to the latest LLMs, which have proven adept at generalizing their skills to a broad range of tasks, often in unfamiliar contexts. That’s prompted growing interest in seeing whether the underlying technology—an architecture known as a transformer—could lead to breakthroughs in robotics.

In new results, researchers at DeepMind showed that a transformer-based AI called RoboCat can not only learn a wide range of skills, it can also readily switch between different robotic bodies and pick up new skills much faster than normal. Perhaps most significantly, it’s able to accelerate its learning by generating its own training data.

“RoboCat’s ability to independently learn skills and rapidly self-improve, especially when applied to different robotic devices, will help pave the way toward a new generation of more helpful, general-purpose robotic agents,” the researchers wrote in a blog post.

The new AI is based on the Gato model that DeepMind researchers unveiled last month. It’s able to solve a wide variety of tasks, from captioning images to playing video games and even controlling robotic arms. This required training on a diverse dataset including everything from text to images to robotic control data.

For RoboCat though, the team created a dataset focused specifically on robotics challenges. They generated tens of thousands of demonstrations of four different robotic arms carrying out hundreds of different tasks, such as stacking colored bricks in the right order or picking the correct fruit from a basket.

These demonstrations were given both by humans teleoperating the robotic arms and by task-specific AI controlling simulated robotic arms in a virtual environment. This data was then used to train a single large model.

One of the main advantages of transformer-based architecture, the researchers note in a paper published on arXiv, is the ability to ingest far more data than previous forms of AI. In much the same way, training on vast amounts of text has allowed LLMs to develop general language capabilities. The researchers say they were able to create a “generalist” agent that could tackle a wide range of robotics tasks using a variety of different hardware configurations.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

On top of that, the researchers showed that the model could also pick up new tasks by fine-tuning on between 100 and 1,000 demonstrations from a human-controlled robotic arm. That’s considerably fewer demonstrations than would normally be required to train on a task, suggesting that the model is building on top of more general robotic control skills rather than starting from scratch.

“This capability will help accelerate robotics research, as it reduces the need for human-supervised training, and is an important step towards creating a general-purpose robot,” the researchers wrote.

Most interestingly though, the researchers demonstrated the ability of RoboCat to self-improve. They created several spin-off models fine-tuned on specific tasks and then used these models to generate roughly 10,000 more demonstrations of the task. These were then added to the existing dataset and used to train a new version of RoboCat with improved performance.

When the first version of RoboCat was shown 500 demonstrations of a previously unseen task, it was able to complete it successfully 36 percent of the time. But after many rounds of self-improvement and training on new tasks, this figure was more than doubled to 74 percent.

Admittedly, the model is still not great at certain problems, with success rates below 50 percent on several tasks and scoring just 13 percent on one. But RoboCat’s ability to master many different challenges and pick up new ones quickly suggests more adaptable robot brains may not be so far off.

Image Credit: DeepMind

Related Articles

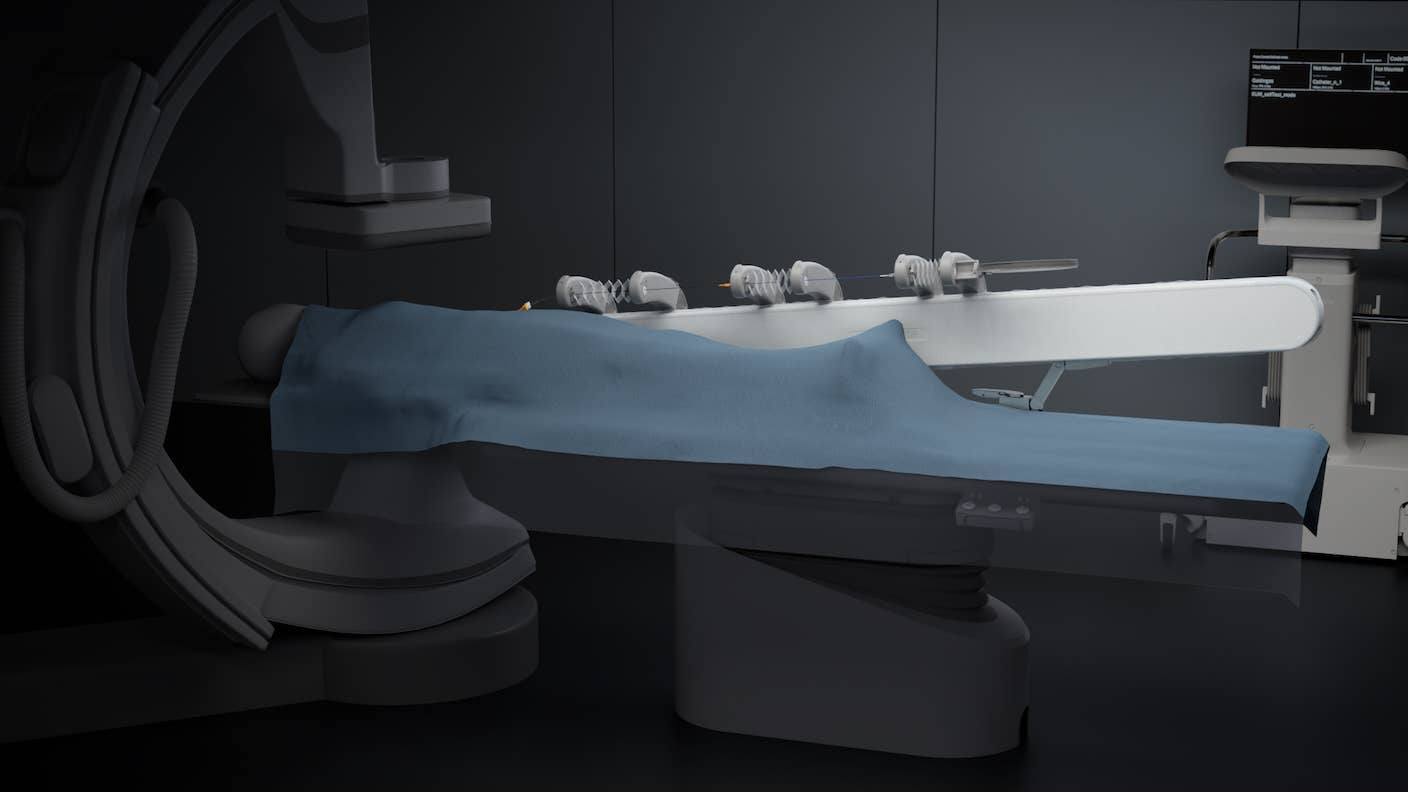

In Wild Experiment, Surgeon Uses Robot to Remove Blood Clot in Brain 4,000 Miles Away

A Squishy New Robotic ‘Eye’ Automatically Focuses Like Our Own

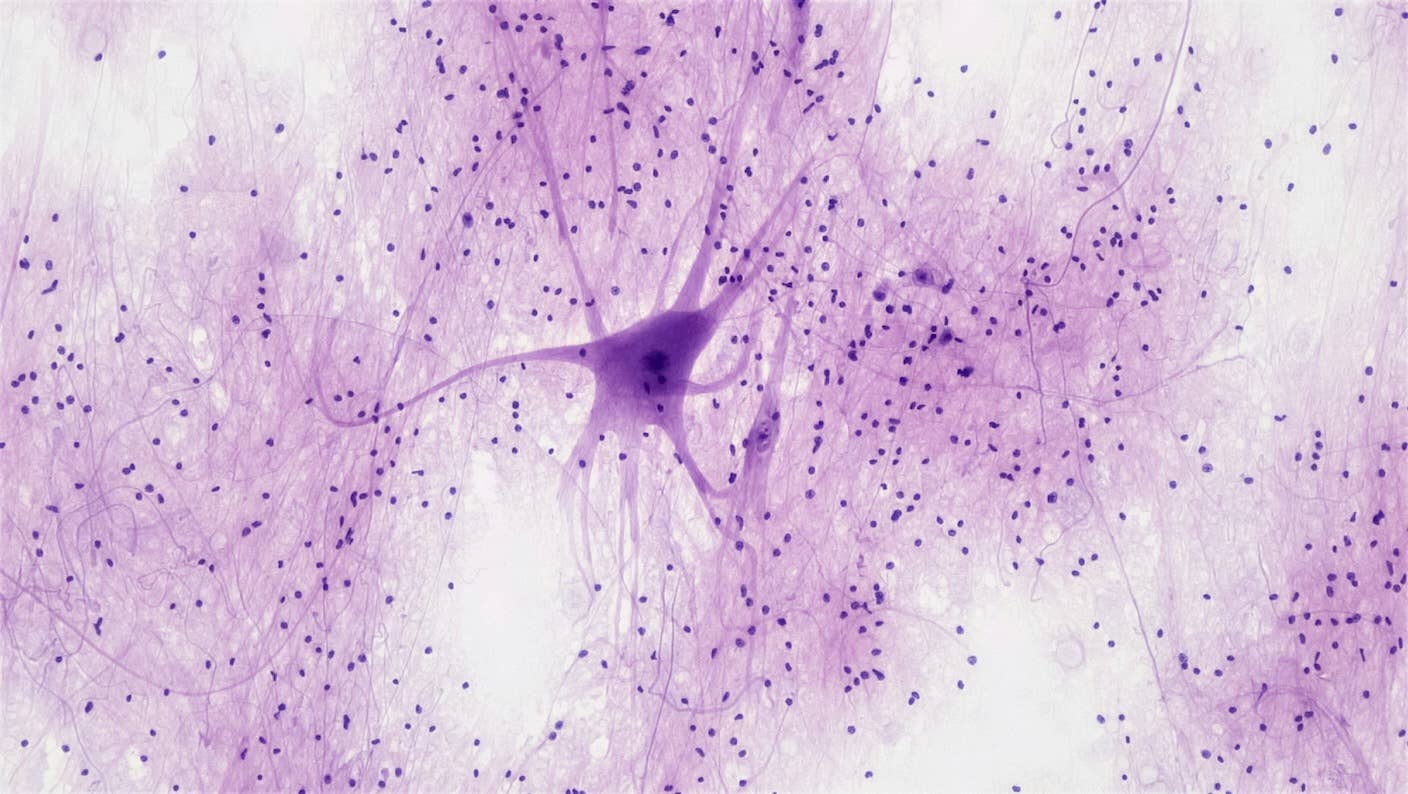

This Crawling Robot Is Made With Living Brain and Muscle Cells

What we’re reading