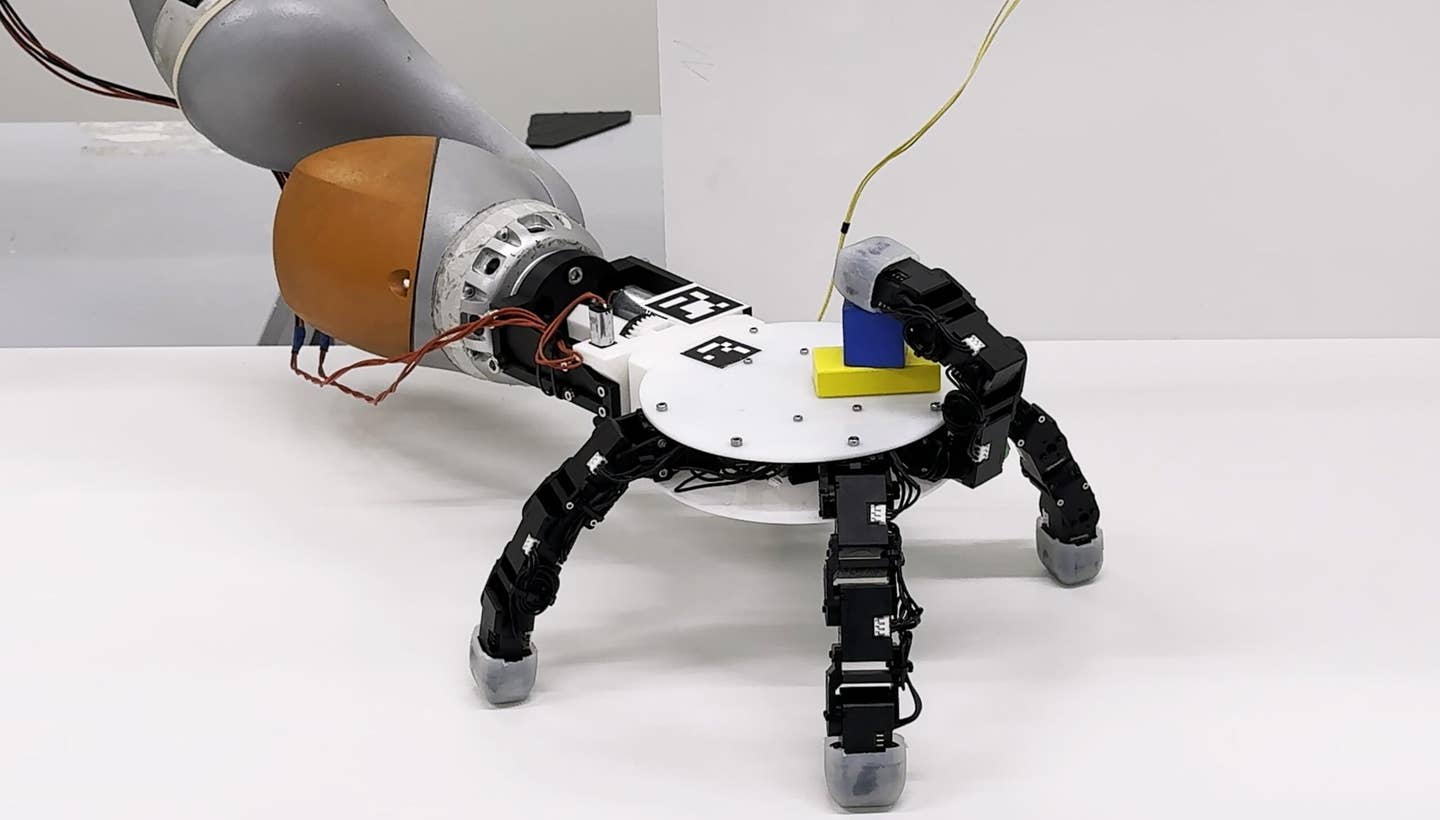

A ChatGPT Moment Is Coming for Robotics. AI World Models Could Help Make It Happen.

Robots need an internal representation of the world and its rules like ours.

Share

If you’re not familiar with the concept of “world models” just yet, a storm of activity at the start of 2025 gives every indication it may soon become a well-known term.

Jensen Huang, CEO of Nvidia, used his keynote presentation at CES to announce a new platform, Cosmos, for what they’re calling “world foundation models.” Cosmos is a generative AI tool that produces virtual-world-like videos. The next day, Google’s DeepMind revealed similar ambitions with a project led by a former OpenAI engineer. This all comes several months after an intriguing startup, World Labs, achieved unicorn status—a startup valued $1 billion or more—within only four months to do the same thing.

To understand what world models are, it’s worth pointing out that we’re at an inflection point in the way we build and deploy intelligent machines like drones, robots, and autonomous vehicles. Rather than explicitly programming behavior, engineers are turning to 3D computer simulation and AI to let the machines teach themselves. This means physically accurate virtual worlds are becoming an essential source of training data to teach machines to perceive, understand, and navigate three-dimensional space.

What large language models are to systems like ChatGPT, world models are to the virtual world simulators needed to train robots. Therefore, world models are a type of generative AI tool capable of producing 3D environments and simulating virtual worlds. Just like ChatGPT is built with an intuitive chat interface, world-model interfaces might allow more people, even those without technical game developer skillsets, to build 3D virtual worlds. They could also help robots better understand, plan, and navigate their surroundings.

To be clear, most early world models including those announced by Nvidia generate spatial training data in a video format. There are, however, already models capable of producing fully immersive scenes as well. One tool made by a startup called Odyssey, uses gaussian splatting to create scenes which can be loaded into 3D software tools like Unreal Engine and Blender. Another startup, Decart, demoed their world model as a playable version of a game similar to Minecraft. DeepMind has similarly gone the video game route.

All this reflects the potential for changes in the way computer graphics work at a foundational level. In 2023, Huang predicted that in the future, “every single pixel will be generated, not rendered but generated.” He’s recently taken a more nuanced view by saying that traditional rendering systems aren’t likely to fully disappear. It’s clear, however, that generative AI predicting which pixels to show may soon encroach on the work that game engines do today.

The implications for robotics are potentially huge.

Nvidia is now working hard to establish the branding label “physical AI” as a term for the intelligent systems that will power warehouse AMRs, inventory drones, humanoid robots, autonomous vehicles, farmer-less tractors, delivery robots, and more. To give these systems the ability to perform their work effectively in the real world, especially in environments with humans, they must train in physically accurate simulations. World models could potentially produce synthetic training scenarios of any variety imaginable.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

This idea is behind the shift in the way companies articulate the path forward for AI, and World Labs is perhaps the best expression of this. Founded by Fei-Fei Li, known as the godmother of AI for her foundational work in computer vision, World Labs defines itself as a spatial intelligence company. In their view, to achieve true general intelligence, AIs will need an embodied ability to “reason about objects, places, and interactions in 3D space and time.” Like their competitors, they are seeking to build foundation models capable of moving AI into three-dimensional space.

In the future, these could evolve into an internal, humanlike representation of the world and its rules. This might allow AIs to predict how their actions will affect the environment around them and plan reasonable approaches to accomplish a task. For example, an AI may learn that if you squeeze an egg too hard it will crack. Yet context matters. If your goal is placing it in a carton, go easy, but if you’re preparing an omelet, squeeze away.

While world models may be experiencing a bit of a moment, it’s early, and there are still significant limitations in the short term. Training and running world models requires massive amounts of computing power even compared to today’s AI. Additionally, models aren’t reliably consistent with the real world’s rules just yet, and like all generative AI, they will be shaped by the biases within their own training data.

As TechCrunch’s Kyle Wiggers writes, “A world model trained largely on videos of sunny weather in European cities might struggle to comprehend or depict Korean cities in snowy conditions.” For these reasons, traditional simulation tools like game and physics engines will still be used for quite some time to render training scenarios for robots. And Meta’s head of AI, Yann LeCun, who wrote deeply about the concept in 2022, still thinks advanced world models—like the ones in our heads—will take a while longer to develop.

Still, it's an exciting moment for roboticists. Just as ChatGPT signaled an inflection point for AI to enter mainstream awareness; robots, drones, and embodied AI systems may be nearing a similar breakout moment. To get there, physically accurate 3D environments will become the training ground for these systems to learn and mature.

Early world models may make it easier than ever for developers to generate the countless number of training scenarios needed to bring on an era of spatially intelligent machines.

Aaron Frank is a researcher, writer, and consultant who has spent over a decade in Silicon Valley, where he most recently served as principal faculty at Singularity University. Over the past ten years he has built, deployed, researched, and written about technologies relating to augmented and virtual reality and virtual environments. As a writer, his articles have appeared in Vice, Wired UK, Forbes, and VentureBeat. He routinely advises companies, startups, and government organizations with clients including Ernst & Young, Sony, Honeywell, and many others. He is based in San Francisco, California.

Related Articles

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Waymo Closes in on Uber and Lyft Prices, as More Riders Say They Trust Robotaxis

Sci-Fi Cloaking Technology Takes a Step Closer to Reality With Synthetic Skin Like an Octopus

What we’re reading