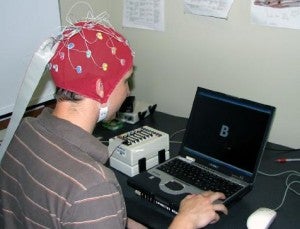

The most valuable machine you own may be between your ears. Work done at Microsoft Research is using electroencephalograph (EEG) measurements to “read” minds in order to help tag images. When someone looks at an image, different areas of their brain have different levels of activity. This activity can be measured and scientists can reasonably determine what the person is looking at. It only takes about half a second to read the brain activity associated with each image, making the EEG process much faster than traditional manual tagging. The “mind-reading” technique may be the first step towards a hybrid system of computer and human analysis for images and many other forms of data.

Whenever an image is entered into a database, it is typically tagged with labels manually by humans. This work is tedious and repetitive so companies have to come up with interesting ways to get it done on the cheap.

Amazon’s Mechanical Turk offers very small payments to those who wish to tag images online. Google Image Labeler has turned the process into a game by pairing taggers to counterparts with whom they can work together. Because EEG image tagging requires no conscious effort, workers may be able to perform other tasks during the process. Eventually EEG readings, or those fMRI techniques that some hope to adopt into security checks, could be used to harness the brain as a valuable analytical tool. Human and computer visual processing have separate strengths. While computers can recognize shapes and movements very well (as seen with computers learning sign language), they have a harder time with categorizing objects in human terms. Brains and computers working in conjunction could one day provide rapid identification and decision making, even without human conscious effort. This could have a big impact on security surveillance and robotic warfare.

The work at Microsoft Research was headed by Desney Tan and published over the past few years at IEEE (pdf) and the Computer Human Interaction Conference (pdf). The EEG image tagging process is just one of many projects that Tan and his team hope to explore in the realm of human-computer interfaces. We’ve heard from Tan before, he was one of those developing muscle-sensing input devices.

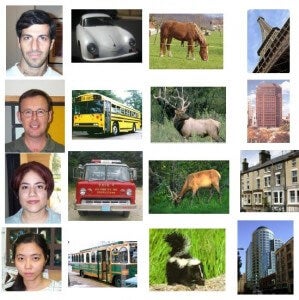

EEG readings are taken at the surface of the head and provide only a general guideline to which areas of the brain are active at what times. Yet this limited information is enough to distinguish between several useful scenarios. Researchers could determine if someone was looking at a face or an inanimate object. They also saw good results when contrasting animals with faces, animals with inanimate objects, and some 3-way classifications (i.e. faces vs. animals vs. inanimate objects). Better results were seen with multiple users, and when each image was viewed multiple times. Surprisingly, no improvement was seen if the viewer was given more than half a second to look at each image. This means that the images could be displayed at that speed without any loss of accuracy in tagging. During the experiments, test subjects were given distracting tasks and not told to categorize the images they saw, showing that the conscious mind does not have to be engaged (and in fact, should not be used) to provide the tagging information at that speed.

In order to replace current tagging systems, Tan’s team will have to find ways to determine when humans are making more precise comparisons (i.e. red-tipped hawk instead of animal, or Mickey Rourke instead of human face). They will also have to find the most efficient numbers of viewers and viewing instances that provide the most accurate tags.

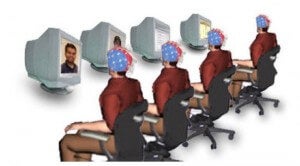

The work at Microsoft Research may provide a better means of tagging images. I can just picture the “tagging farms” now – vast rows of people sitting at computer screens looking at images while they work on other jobs. Yet the long term implications are much broader. Most other work we’ve seen with EEGs or “mind-reading” is aimed at either discerning what someone is thinking for security, or for control of computers and machines. The work discussed here is much more about using the mind itself as a machine. As brain analysis improves, we may see the human mind as a complementary worker or instructor to computer programs. One wonders how we’ll monetize or even quantify the “work” done by someone’s brain in these settings. Renting your mind out to Microsoft…sometimes I feel like I do that already.

[photo credits: Desney Tan, Microsoft Research]