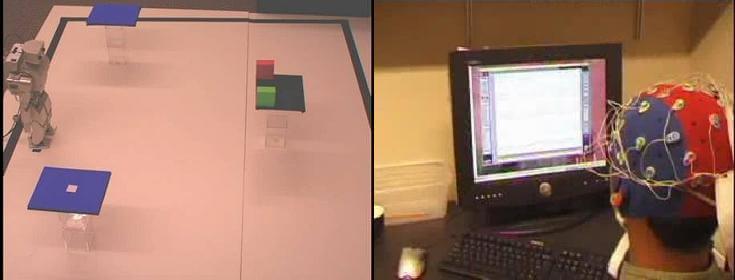

Ask any super villain and he’ll tell you – good robotic henchmen are hard to find. That’s why I love the robot from Rajesh Rao’s lab at the University of Washington. The little humanoid bot is controlled by the human brain. By measuring electric signals through the surface of the skull (no surgery required), you can command the robot to perform a simple task. Like any decent flunky, the robot knows how to accomplish the task already, it simply waits for you to tell it when and where you want it to act. Check out the video after the break to see the robot obey the power of the mind. An explanation of the different images follows the video.

Mind controlled robots, wheelchairs, or cars… the difficulty really comes from the mind-reading, not the automation. While ASIMO’s venture into brain-control had users make direct commands (lift left arm, stick out tongue, etc), and Braingate directly measures motor neurons, Rao’s team takes a broader approach to mind-control. Surface sensors measure a very narrow range of brain activity and basically just report which of several objects/locations you show interest in. This command-level approach is less sensitive than the other systems (it also was developed years earlier), but it has important implications. When we see robots directly controlled by human minds (as in the movie Surrogates), we are shown a direct thought to action connection. I want the arm to lift, the robot lifts the arm. But what if you just thought: “I want that ball” and the robot handled the arm lifting and grasping on its own? Precision is important, but directly controlling all the myriad functions of a robot may be too difficult for many users. After all, many of us have coordination problems in our own bodies.

The video lacks sound, so here’s a quick play by play of what you’re seeing:

0:05 – The user is hooked up with non-invasive surface sensors that read brain activity.

0:25 – The robot identifies two objects on the table.

0:56 – The user is shown both objects, with a box alternatively flashing around each. Brain activity peaks when the flash is perceived, thus the system knows which object is being actively focused on.

1:15 – After the selection is interpreted, the robot proceeds to approach and lift the chosen object. (This takes a while).

2:00 – Again, using flashes to record the user’s focus, the robot is told to place the block on the blue platform with the gray square.

2:45 – Success!

[photo and video credit: Rajesh Rao, University of Washington]