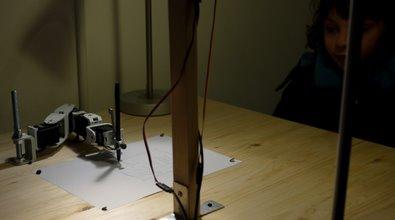

For several years, Patrick Tresset and Frederic Fol Leymarie of Goldsmiths University have been trying to teach a machine how to draw. The Aikon II project has a camera, some software, and a robotic arm with which to observe and sketch human faces. In that way it uses the same basic tools (eyes, brain, hands) that artists have been relying upon for centuries. Aikon’s sketches are not photo-realistic, but they do look stunning. Far better than anything I could draw, I assure you. At the recent Kinetica Art Fair in London, Aikon was on hand to sketch faces and earn praise. You can see it in action in the video below. Aikon II has funding to keep it going at least through 2011. According to Tresset and Leymarie, not only will Aikon’s skill improve, it may one day be able to draw in it’s own artistic style.

Anybody with a digital camera and a photo printer can produce a more realistic image of a human face than Aikon II. That’s not the point. Aikon is able to provide an artistic interpretation of what it sees. In this way, it’s very different from robots that simply create what they are programmed to draw. This project has much more in common with the attempts to get computer programs to create new pieces of classical music. Tresset and Leymarie have studied many historic sketches (and artist’s notes) to generate an idea of the visualization and interpretation needed to create an artistic rendering of a human face. As artificial intelligence improves programs like Aikon II could develop into artificial artists, full of the same creative insights, synthesis, and stylistic choices as their human counterparts. The only thing missing would be passion. But maybe we’ll learn how to program that into a robot, too.

[image credits: Aikon II Project]

[source: Aikon Website, Aikon II 2009 Press Release]