It’s 10pm, November 30th, 2013. An author, aiming to finish a novel in November, takes up his laptop and begins typing furiously. By midnight, he’s completed I Got a Alligator for a Pet. A world record? No, just a clever bit of code.

I Got a Alligator for a Pet, written by a developer’s computer program, was part of National Novel Generation Month (NaNoGenMo), a competition in which programmers write apps that automatically generate at least 50,000 words.

Emulating National Novel Writing Month (NaNoWriMo)—a November tradition where authors attempt to complete an entire novel (start to finish) in a month—developer and internet artist Darius Kazemi started NaNoGenMo last year.

Like NaNoWriMo, NaNoGenMo offers no prizes. Unlike NaNoWriMo, the computer-generated novels need not make one whit of sense (though many do). There are few rules beyond submission of the book and the code responsible for writing it.

Many of last year’s submissions used source content by humans—dream journals, tweets, fan fiction, Jane Austen, Homer. One is 50,000 words of roommates arguing about cleaning the apartment. Another has the simplistic feel of a reading primer. While the results aren’t exactly Shakespeare or Hemingway, they are, with few exceptions comical.

Many of last year’s submissions used source content by humans—dream journals, tweets, fan fiction, Jane Austen, Homer. One is 50,000 words of roommates arguing about cleaning the apartment. Another has the simplistic feel of a reading primer. While the results aren’t exactly Shakespeare or Hemingway, they are, with few exceptions comical.

Kazemi’s contribution, Teens Wander Around a House, begins, “Philomena, Dita, Vivianna, Darby, Kiah, and Gale found themselves dropped off at the same party at the same time by the irrespective mothers. How awkward.”

Indeed.

While Kazemi prefers pieces that make sense, he isn’t gunning for literature. In fact, he thinks the less human the output, the more intriguing. “What I want to see is code that produces alien novels that astound us with their sheer alienness,” Kazemi says. “Computers writing novels for computers, in a sense.”

The idea that computers are increasingly taking figurative pen to paper has recently attracted quite a bit of attention. Over the last few years, algorithmic news writing has begun quietly infiltrating big name journalism.

Last year a Los Angeles Times algorithm was first to break the news of a mild quake that hit LA in the early morning hours. And one of the best known algorithms, by Narrative Science, is used by a number of news outlets, including Forbes.

These robot writers are fairly limited and highly formulaic (to date). For the most part, they excel at what might be called data-centric journalism—sports, finance, weather. Basically anything that generates statistics and spreadsheets.

The bots peruse the data, looking for outliers, maximums, minimums, and averages. They take the most newsworthy of these statistics, come up with an angle and story structure—choosing from an internal database—and spit out the final text.

The result is simple but effective, and on a quick read, perfectly human.

It’s tempting to look ahead a few years and forecast a news media dominated by algorithmic writing. Narrative Science’s Kristian Hammond says computers might write Pulitzer-worthy stories by 2017 and generate 90% of the news by 2030.

He might be right. But the software will need to be more capable than it is now. In fact, computers have been similarly constructing algorithmic sentences since 1952. A machine from that era, the Ferranti Mark 1, constructed love letters from a static list of words, a very simple version of the way modern newsbots build articles from preprogrammed phrases.

“Dear Honey, my avid appetite lusts after your anxious desire. You are my beautiful tenderness my adorable longing,” begins one such letter signed, “Yours seductively—MUC.”

Modern news writing algorithms are more complex, faster, can analyze data, and peruse sizable lists of styles, angles, and phrases. But their advantage over the Ferranti Mark 1 is almost as much about computing power as it is about method.

That will likely change in the coming years. IBM, Google, and others are currently pouring resources into natural language processing research. What is natural language processing? IBM’s Watson, for example, understands questions phrased in plain english, pours over millions of pages related to the query, and provides conversational, self-generated answers.

Next generation newsbots will likely look more like Watson—able to draw upon both quantitative and qualitative information to inform their articles and reports. A capability that will be useful from journalism to medicine to business.

But will they ever make anything resembling art? Could, as a recent Fast Company headline phrased it, a great American novel be generated by code? By one measure, algorithms already make art and have for years.

William S. Burroughs, for example, championed algorithmic prose and poetry with his cut-up technique—a method where the artist slices and rearranges sentences or even entire scenes, often with little regard for meaning or flow.

Burroughs inspired musicians like David Bowie and REM’s Michael Stipe to make lyrics from cut-ups. Radiohead’s front man Thom Yorke has said he pulled phrases from a hat while recording the groundbreaking album, Kid A.

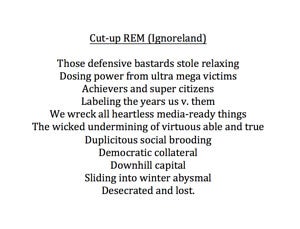

Curious, I separated the lyrics to REM’s “Ignoreland” into a list and, successively pairing the top and bottom words, made new lyrics. With a few minor rearrangements to fulfill basic grammar and syntax rules, the result was surprisingly passable, and of course, a computer, using the same algorithm and rules could also have performed the task.

Future algorithms may inject a just the right amount of randomness—with something like the cut-up method—source the entire internet for information, command millions of phrases, and even use broad formulas for general style and narrative structure.

Some of our most beloved and timeless works are, for example, quite formulaic. Joseph Campbell’s famed Hero With a Thousand Faces outlines consistent elements in the classic hero’s journey, evident in ancient and modern stories alike. Perhaps most famously, George Lucas said Campbell’s work was a driving force behind Star Wars.

Why couldn’t the structure of the hero’s journey, our favorite fairy tales, or best sellers be employed by algorithm?

Beyond the (likely significant) blood, sweat, and tears spent coding the program—there isn’t, in theory, any particular factor limiting the quality of computer-generated prose. It might eventually be indistinguishable from a human product.

However, even if such prose is beautiful, artistic, and coherent—a human is still pulling the strings, writing the program, feeding it information, and hitting the ‘start’ button. The program is a vastly complicated mechanism for pulling phrases from a hat. Just another tool used by humans to make art, like a paint brush, pottery wheel, or guitar amplifier.

But that may not always be the case. In the future, evolutionary algorithms (or some other form of self-reproducing code) might generate themselves, removing the human step and developing into something altogether new and undirected. If such programs decided to write, what would their prose look like? Probably, as Kazemi says, astoundingly alien.

Or there’s another possibility. Such extremely advanced programs might find writing charming, quaint, and utterly useless.

We tend to anthropomorphize artificial intelligence. Like Pinocchio, Star Trek’s Lieutenant Commander Data tries to be more human, learning violin or painting and never quite replicating the intangibles characteristic of fine art. But maybe art will forever remain a human endeavor, not because machines can’t master it—but because they just don’t give a damn.

Image Credit: Shutterstock.com