When we let our minds wander, sleeping or waking, they begin mixing and remixing our experiences to create weird images, hallucinations, even epiphanies.

These might be the result of idle daydreaming on the side of a hill, when we see a whale in the clouds. Or they might be more significant, like the famous tale that the chemist Friedrich Kekulé discovered the circular shape of benzene after daydreaming about a snake eating its own tail.

There is little doubt we are a species consumed by our dreams—that our ability to find unexpected new patterns in the noise is what makes us human and what makes us creative.

Maybe that’s why a set of incredibly dream-like images recently released by Google are causing such a stir. These particular images were dreamed up by computers.

Google calls the process by which the images were created inceptionism, recalling the movie, and likewise, the images themselves range from beautiful to bizarre.

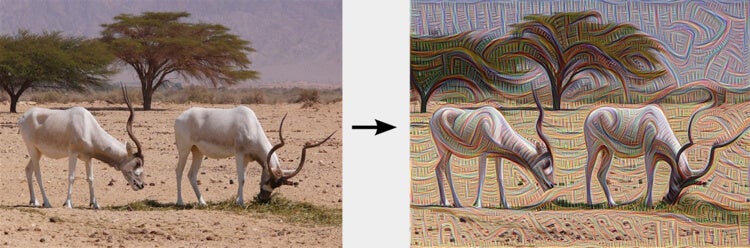

So, what exactly is going on here? We recently wrote about the torrid advances in image recognition using deep learning algorithms. By feeding these algorithms millions of labeled images (“cat”, “cow,” “chair,” etc.), they learn to recognize and identify objects in unlabeled images. Earlier this year, machines at Google, Microsoft, and Baidu beat a human benchmark at image recognition.

In this case, Google reversed the process. They tasked their software with generating images based on the information already stored in its artificial neural network.

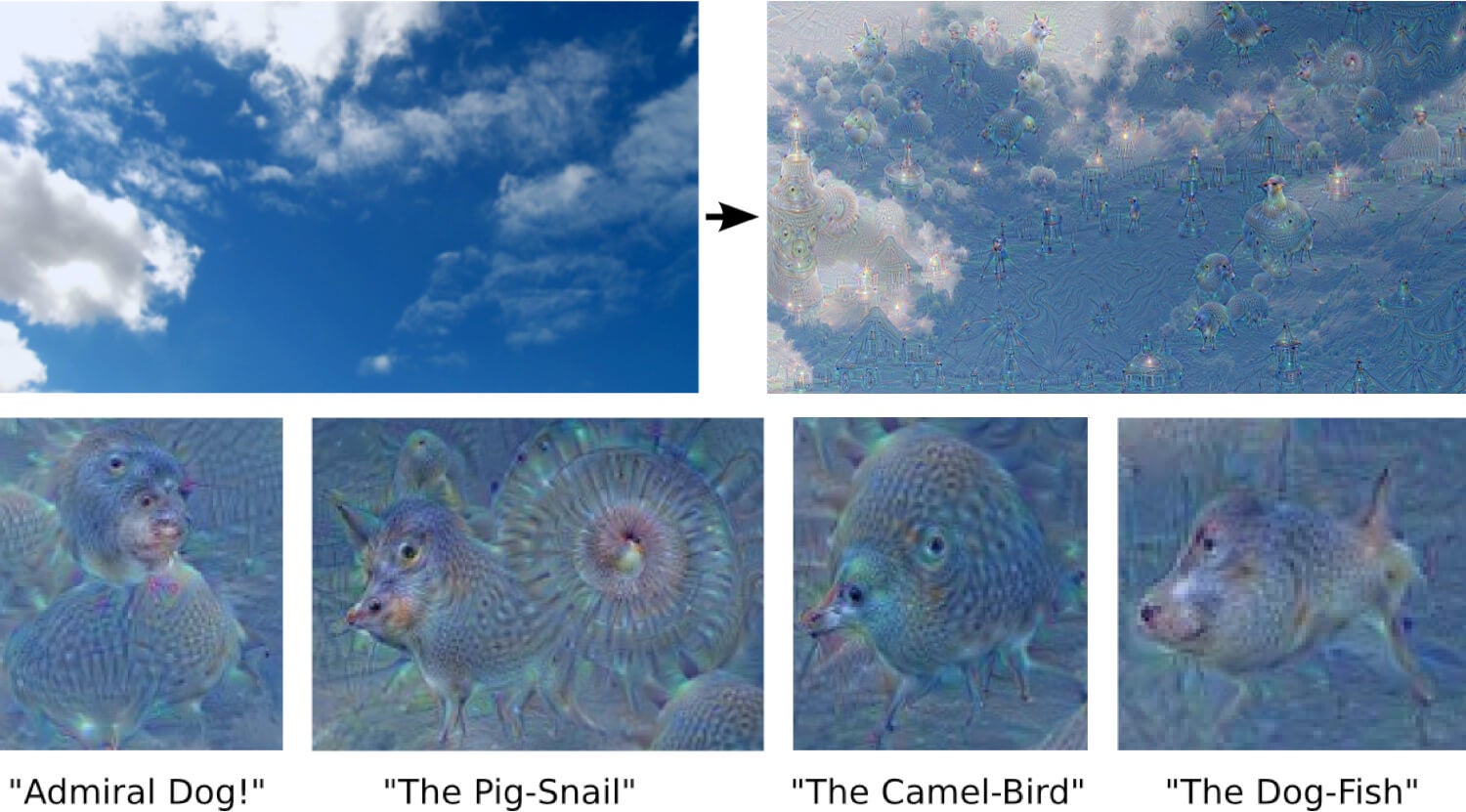

And here’s the fascinating bit: in a part of the experiment where the software was allowed to “free associate” and then forced into feedback loops to reinforce these associations—it found images and patterns (often mash-ups of things it had already seen) where none existed previously.

In some examples it interpreted leaves as birds or trees as buildings. In others, it created weird imaginary beasts in clouds—an “admiral-dog,” “pig-snail,” “camel-bird,” or “dog-fish.”

This tastes a little like our own creativity. We take in impressions, mash them up in our mind, and form complex ideas—some nonsensical, others more profound. But is it the same thing?

The easy answer: Of course not.

It’s Lady Lovelace’s objection as outlined by Alan Turing. Ada Lovelace, daughter of poet Lord Byron, wrote the earliest description of what we’d today call the software and programming of a modern universal computer. And she doubted machine creativity would ever exist.

“The Analytical Engine has no pretensions whatever to originate anything,” Lovelace wrote. “It can do whatever we know how to order it to perform. It can follow analysis; but it has no power of anticipating any analytical relations or truths.”

That is, machines do as we tell them. Nothing more.

Turing rephrased Lovelace’s objection as “a machine can never take us by surprise.” And he disagreed. He said his machines often surprised him—mainly because he understood the underlying settings in the general sense. But the specifics often conspired to create surprising results in practice.

Indeed, Google’s reason for running this experiment was that “we actually understand surprisingly little of why certain models work and others don’t.” In other words, we get the general idea, but we often don’t know what’s taking place in every step of the process.

Artificial neural networks, more or less based on the human brain, are made of hierarchical layers of artificial neurons. Each level is responsible for recognizing increasingly abstract image components. The first level, for example, might be tasked with finding edges and corners. The next level might look for basic shapes—all the way up until the final level makes an abstract leap to “fork” or “building.”

Running the algorithms in reverse is a way of finding out what they’ve learned.

In one part of the experiment, the researchers asked the algorithms to generate a specific image, say its conception of a banana, in random noise (think static on a television screen). This was a way of determining how well it knew bananas. In one instance, when asked to generate a dumbbell, the software repeatedly showed dumbbells attached to arms.

“In this case, the network failed to completely distill the essence of a dumbbell,” Google’s engineers wrote in a blog post. “Maybe it’s never been shown a dumbbell without an arm holding it. Visualization can help us correct these kinds of training mishaps.”

It got more interesting when they allowed the algorithm to look at an image and free associate. How abstract the result was depended on which layer of artificial neurons they queried.

The first, least abstract layer emphasized edges. This resulted in “ornament-like” patterns. Something you’ve probably already seen in a photo sketch app. But more abstract features emerged in higher layers. These were then further accentuated by induced feedback loops.

The researchers asked the network, “Whatever you see there, I want more of it!”

In one sense, these images are absolutely the result of a machine spitting out the contents of its database as directed by its programmers. Just as Lady Lovelace would have noted. And at the same time, they are undoubtedly surprising in a way Alan Turing would recognize.

Probably the most surprising aspect is just how much they resemble, in both process and output, something we ourselves might create—a daydreamer finding weird shapes in the clouds or an abstract artist visualizing otherworldly and contradictory landscapes. (Indeed, perhaps the desire to anthropomorphize machines is itself an ironic example of finding patterns where none exist.)

And it’s tempting to further extrapolate the process.

What happens when programs take in images, text, other sensory data—eventually rich experiences more akin to our own? Can a process like inceptionism incite them to remix these experiences into original ideas? Where do we draw the line between human and machine creativity?

Ultimately, it’s a circular debate, and a distinction impossible to definitely prove.

At the least, as computers get better at abstract concepts, they’ll help scientists or artists find new ideas. And maybe along the way we’ll gain new insights into the inner workings of our own creative processes.

For now, we can enjoy these first few surprising baby steps.

Image Credit: Google Research (see the whole set of images here)