Interpersonal communication just got a lot more intimate.

So intimate, in fact, that two strangers — physically separated by a mile — can literally get into each other’s heads to solve problems together, using only their brain waves, a special computer interface and the internet.

The results, published last week in PLOS One, is the latest push towards engineering highly sophisticated human brain-to-brain interfaces (BBIs) that directly link up the consciousness of human beings, thus eschewing the need for language or non-verbal signs to get our messages across vast distances of space.

Sounds fantastical? You decide — here’s how the setup worked.

Five pairs of participants, aged between 19 and 39, were randomly paired to play a game similar to 20 questions. In each pair, one participant picked out an object from a list of eight choices, and the other tried to guess the object using a series of yes-or-no questions.

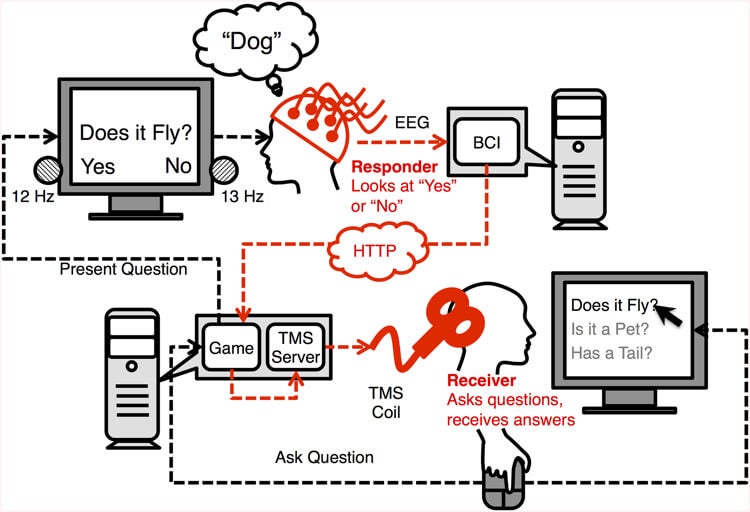

Scientists hooked up the first group of participants, dubbed the “respondents,” with an electroencephalography (EEG) cap that captures and records their brain waves.

The second group, or the “inquirers,” sat in a dark room on campus roughly a mile away, wearing heavy-duty earplugs to reduce any stimulation from their environment.

Their heads were locked in place by a two-pronged headset, with a magnetic coil placed over the visual cortex. The coil, shaped like a figure eight on top of a short handle, generates magnetic fields of various intensities, which in turn stimulate the brain. When the intensity reached a certain threshold, the inquirers saw a bright flash of light in the corner of their eye called a phosphene.

Each game started with the inquirer picking one object from a list of 20 (say, a dog), which came with a set of associated yes-no questions, such as “Can it fly?”, “Is it a mammal?” and “Is it a pet?”.

With a click of the mouse, the first question was sent to the respondent’s computer screen. Time was tight — they had only two seconds to read the question and a second to answer with their eyes.

To do so, they looked at one of two LED lights that flashed at different frequencies, which signaled either yes or no. Each light stimulus induced a different pattern of brain activation in the respondents, which was picked up by EEG.

This information was then emailed to the inquirer to power the magnetic coil. If the answer was yes, the stimulation was strong enough to induce bright phosphenes; if the response was no, the inquirer saw nothing.

In this way, the respondent’s answer narrowed the list of potential answers for the inquirer, who then shot another question back to the respondent. The question-and-answer cycle was repeated three times for each trial to get to the final answer.

Each pair played 20 rounds, with a random mixture of 10 real games — in which a “yes” answer generated a phosphene — and 10 control games, in which the magnetic coil went through all the “yes” motions, but didn’t actually stimulate the visual cortex.

We took many steps to make sure that people weren’t cheating, said Andrea Stocco at the University of Washington Institute for Learning and Brain Sciences, who is the first author of the study.

The participants were able to correctly guess the object 72% of all of the real games, compared to a measly 18% of the control ones. The actual success rate for each back-and-forth is higher — over 90% — noted the researchers in the paper, since all three rounds needed to be correct in order to get to the right answer.

That’s quite remarkable when considering all the things that could go haywire. The major one is correctly identifying phosphenes, noted author Dr. Chantel Prat. The inquirers are kind of “hallucinating” light flashes that can come in different shapes, such as waves, blobs or lines. It’s very weird and hard to nail down.

The respondents may not know how to answer a question, or their attention could’ve wavered, so that the recorded brain waves got muddled. After all, the brain is doing a million other things at the same time, which could confuse the EEG readings, says Prat.

This study is the third high-profile demonstration of human brain-to-brain chatter in the last few years. In another collaboration in 2013, Prat and Stocco used brain waves from one participant to control hand movement of another. In that case, the responders had no conscious awareness of the “move” signal — they simply relaxed and watched their hands dance unconsciously.

This study is the third high-profile demonstration of human brain-to-brain chatter in the last few years. In another collaboration in 2013, Prat and Stocco used brain waves from one participant to control hand movement of another. In that case, the responders had no conscious awareness of the “move” signal — they simply relaxed and watched their hands dance unconsciously.

In another study published in 2014, a Spanish team used a similar BBI to transmit words between volunteers located in India and France, respectively, using a pre-determined system similar to Morse code. At two bits per minute, the communication rate was agonizingly slow.

Compared to previous studies, Stocco says, “This is the most complex brain-to-brain experiment, I think, that’s been done to date in humans. We are actually still at the beginning of the field of interface technology, and we are just mapping out the landscape so every single step is a step that opens up some new possibilities.”

But the authors concede that for now the system is very limited.

We’re trying to move beyond the yes-no binary system to more abstract thoughts, said Prat. One advantage of BBIs would be transmitting things that are hard to verbalize, such as emotions and state of minds, especially between people who don’t speak the same language, or between a paralyzed patient and their loved ones.

Imagine if you could transmit the feeling of experiencing something sweet, or pass on knowledge that’s hard to verbalize, said Prat.

Or, alternatively, imagine linking up the brain state of a focused student to one with ADHD to help the latter concentrate.

This Matrix-like scenario may well be in the future, but the team is hopeful. Last year, they received a $1 million grant from the WM Keck Foundation that allows them to explore “brain tutoring.” The team hopes to run this experiment in the next six months.

Evolution has spent a colossal amount of time to find ways for us to communicate with others in the forms of behavior, speech and so on, said Stocco. Our goal is to bypass these translational needs, and directly pass on signals from one brain to another.

Image Credit: Shutterstock.com; experimental images courtesy of the University of Washington and Stocco et al., 2015, PLOS One.