The Lost Robot Saga Continues: Munich

Share

You think that these robots would start investing in a GPS system. Recently, Singularity Hub covered the Tweenbot, a simple cardboard-wrapped automaton that was guided through New York City by the hands of New Yorkers. The next step in the lost robot evolution has appeared: Autonomous City Explorer (or ACE) a robot that navigated the mean streets of Munich just like any tourist would: by asking directions from the natives. ACE identified and queried people around it to point it in the right direction, and, against my most cynical expectations, it arrived safely and sound at its destination. (Check out the video from New Scientist and the end of the post)

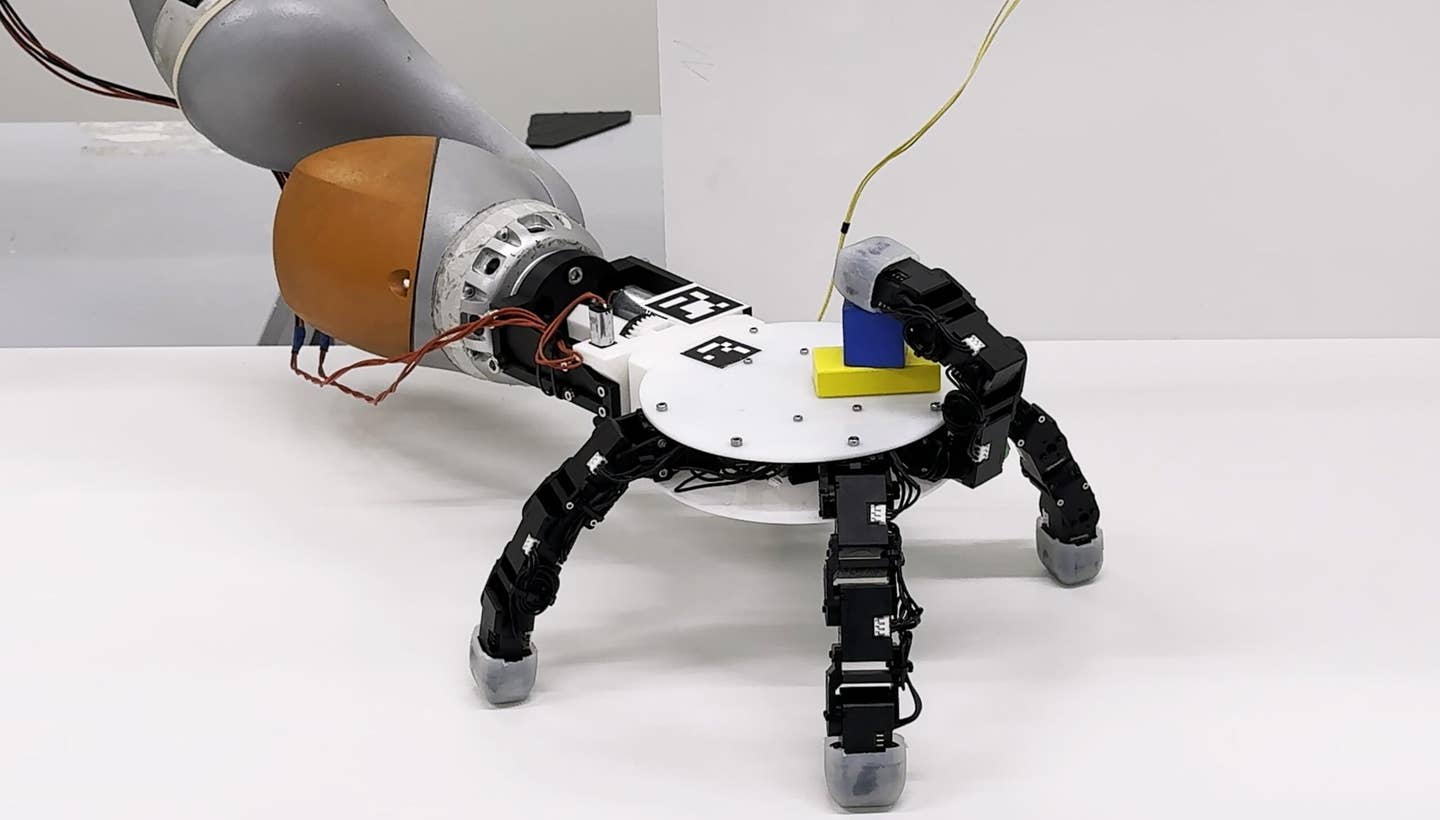

So what makes this lost robot so unique? Unlike its tween counterpart, ACE isn't just picked up and pushed in the right direction. The bot is roughly human sized and packed with instruments to help it detect and query humans for help. First, there are cameras and image recognition software geared towards finding people and moving ACE towards them. When a human is found, an audio message is played while virtual lips move on a screen. If the human is friendly (aren't we all?) he or she uses a touch-screen to indicate it's willingness to give directions. The human will then point in the direction ACE should go.

And ACE follows your finger! That's really kind of cool. By using multiple cameras and more image software, ACE is able to build a 3D model of the human and where he or she is actually pointing to. Compared to a toddler, this isn't a remarkable skill, but following visual cues is really difficult for most robots out there. ACE also prompts its helper human to use the touch-screen to suggest a given path, but in the end, it can rely on just the pointing.

ACE's point and go navigation avoids the hassle of interpreting humanity's subjective audible directions. "It's over there, behind that building, then you sort of take a right and keep going for a while." ---that's a message fit to make a robot pull its hair out. ACE just has to query enough people (38 for its maiden voyage) and it will build up a developing map of its surroundings. Simple and effective, the ACE system allowed the robot to travel little more than 1km in 5 hours. Not a speedy journey, but very promising.

Just wait 'til your robot comes home!

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

While Tweenbot is definitely the cute one, and ACE is light years ahead in technology, both lost bots illustrate how humanity and robots are being taught to work together. According to ACE's mandate, it seeks to develop new cognitive abilities to make robots more useful in a human-rich environment. Likewise, Tweenbot was an experiment in humanity's generosity (read here: kindness to something foreign). As robots leave the factory and warehouse (sorry KIVA), they will have to accommodate the needs of, and rely on the input from humans.

The ACE experiment showed that the robot-human interaction could be successful, but it wasn't very efficient. It also demonstrated human willingness to interact with the artificial, but would this trend continue once the robot's novelty wore off? The secret is making robots more life-like (what's more human than wandering around asking for directions) and making humans more robot-aware. Human-like robots may be destined to take a larger part in our society, but only if we decide to accept them. Experiments like ACE and Tweenbot show that the potential exists, we just have to find it. Luckily, we have people (and robots) willing to point us in the right direction.

https://c.brightcove.com/services/viewer/federated_f9/2227271001

Related Articles

This ‘Machine Eye’ Could Give Robots Superhuman Reflexes

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Waymo Closes in on Uber and Lyft Prices, as More Riders Say They Trust Robotaxis

What we’re reading