Hype or Hope? We’ll Soon Find Out – Leap Motion “Minority Report” Controller Ships in May

Leap Motion caused quite a stir in 2012 when they unveiled their super precise motion-sensing device, the Leap Motion Controller. (The Leap sensor is capable of detecting details as small as the head of a pin.) On Wednesday, Leap Motion announced plans to ship the first batch of pre-ordered Leap Controllers Monday, May 13th—less than a year after the tech was introduced. The device will be available in Best Buy stores May 19th.

Share

Leap Motion caused quite a stir in 2012 when they unveiled their super precise motion-sensing device, the Leap Motion Controller. (The Leap sensor is capable of detecting details as small as the head of a pin.) On Wednesday, Leap Motion announced plans to ship the first batch of pre-ordered Leap Controllers Monday, May 13th—less than a year after the tech was introduced. The device will be available in Best Buy stores May 19th.

The Leap Controller’s May launch is a touch later than the early 2013 launch originally targeted (February or March). Leap Motion CEO and co-founder, Michael Buckwald, told Singularity Hub that pushing shipment to May was in part to ensure Airspace (the firm’s newly named app store) was sufficiently stocked.

But Leap Motion also wants to ensure the core product—to be produced in the hundreds of thousands—is as dialed in as possible, both “beautiful and well-constructed." According to Buckwald, “The goal is for all those things to coalesce in May such that the user experience is amazing.”

First impressions are everything when the competition is a technology as familiar and comfortable as the mouse or touchpad. To maximize the probability a new alternative will be widely adopted, it needs to be as intuitive and useful as the technology it’s replacing—and hopefully even more so.

The Leap Motion Controller will be compatible with Windows 7 and 8 and Mac OS X 10.7 and 10.8. And Buckwald told us, “The goal is that the core OS and browsing control should be compelling enough by itself to justify someone getting Leap.” Think gesture-controlled point-and-click, highlighting, copy/paste, scrolling, browsing—the lot.

But Buckwald hopes those functions are just the tip of the iceberg, “Once [users] go to Airspace, they’ll realize that while, today, they and most people only use their computers to browse the web or use Word or Excel—there’s a lot more they can now do.”

In the real world, he said, it’s easy and fast to “mold a piece of clay, pick up an object, or draw something.” There is no barrier to entry. But the same digital process is very time consuming, requires practice and experience, and is therefore limited to a select group of computer-savvy professionals. Leap Motion wants to bridge that gap.

Buckwald gave us examples of just a few apps that will be in the app store.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Autodesk’s 123D suite of non-professional 3D modeling applications and its 3D animation program, Maya, will both be Leap-compatible. (The prospect of Leap-enabled Maya is intriguing. SF robotics software firm, Bot & Dolly, uses Maya to control robotic arms!)

Airspace will also include Leap-compatible Corel Painter apps and a Weather Channel app. And of course, games—Cut the Rope from ZeptoLab, a music game called Dischord from DoubleFine, first person shooters, and more.

By May, there should be a wide array of Leap-specific apps and plug-ins to make already familiar apps Leap-compatible. And the developer program is ongoing—anyone with a good idea can still apply and receive a device.

What about the future of Leap Motion? Buckwald says, “So we’d like to see this eventually embedded in tablets and projectors and appliances and cars. And in the future maybe even in things like head-mounted displays. Anywhere there’s a computer, we’d love to see Leap there.”

We at Singularity Hub particularly like the idea of controlling wearable devices, like Google Glass, with gestures—it seems better than voice recognition. Imagine if every time you see someone swiping and tapping their phone in public they were talking to it instead. (See here for a wearable gesture-control device we recently covered—it’s clunky, but with Leap-like tech could be made less intrusive.)

The idea of gesture-controlled cars is interesting because it highlights the hidden power of the interface—gesture-controls can dematerialize (or digitize physical things) any number of controllers. That could include a mouse or touch screen. But it could also extend to car dashboard knobs and buttons, steering wheels, joysticks, lightswitches, television remote controls, door handles, and so on.

There are still questions about just how useful the technology will be in practice. Won’t our arms get tired gesture-controlling long-use devices like computers? Or if you have loads of these sensors all over the place—how do you prevent them from misinterpreting motions, like a wave goodbye for turning off the lights? Will we have to be more careful with our naturally gesture-laden mode of communication?

Maybe we’ll ultimately find gesture-control works better for some tasks than others. And further along, maybe all the things these sensors dematerialize will be further dematerialized by some even more ingenious bit of tech—like a non-invasive version of the mind-control devices currently being developed for disabled folks.

Jason is editorial director at SingularityHub. He researched and wrote about finance and economics before moving on to science and technology. He's curious about pretty much everything, but especially loves learning about and sharing big ideas and advances in artificial intelligence, computing, robotics, biotech, neuroscience, and space.

Related Articles

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

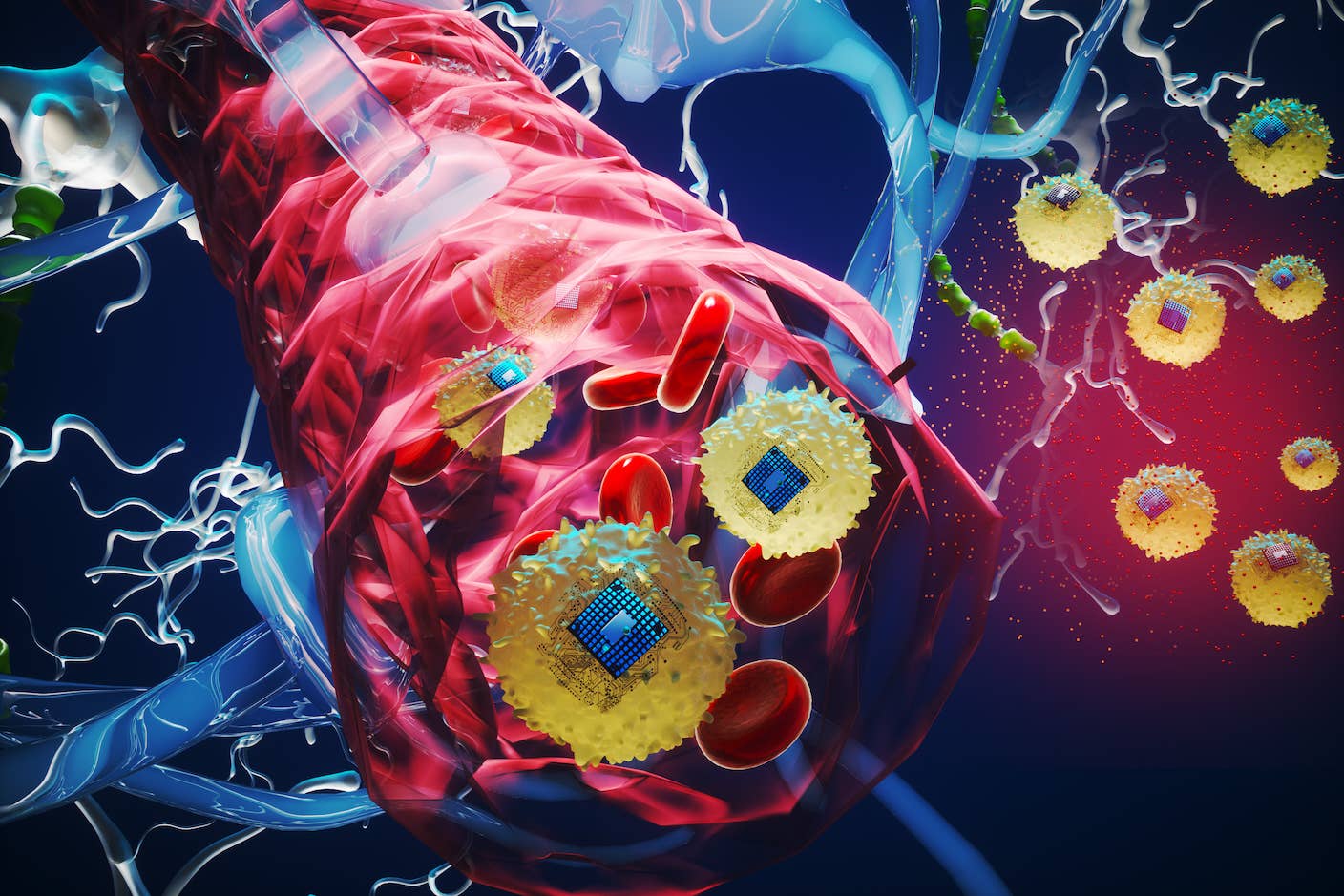

These Brain Implants Are Smaller Than Cells and Can Be Injected Into Veins

What we’re reading