Exponential Finance: Ray Kurzweil Stresses Humanity’s ‘Moral Imperative’ in Developing Artificial Intelligence

Share

Come to Singularity Hub for the latest from the frontiers of finance and technology as we bring you coverage of Singularity University and CNBC's Exponential Finance Summit.

“Technology is a double-edged sword...every technology has had its promise and peril."

Appearing via a telepresence robot, Ray Kurzweil took the stage at the Exponential Finance conference to address questions posed by CNBC’s Bob Pisani. Though the conversation covered a slew of topics from quantum computing to uploading the human mind, it returned repeatedly to artificial intelligence and its implications for humanity.

Unphased, Kurzweil at one point stated his position succinctly: “We have a moral imperative to continue reaping the promise [of artificial intelligence] while we control the peril. I tend to be optimistic, but that doesn't mean we should be lulled into a lack of concern.”

Kurzweil’s hopeful yet cautious point of view on artificial intelligence stands in contrast to Elon Musk, who caused a stir last year when he tweeted, “Worth reading Superintelligence by Bostrom. We need to be super careful with AI. Potentially more dangerous than nukes.” In Bostrom's book, he proposes scenarios in which humans suffer an untimely end due to artificial intelligence simply trying to fulfill its goals.

Troubled by the attention this tweet attracted, Kurzweil wanted to set the record straight: “I'm not sure where Musk is coming from. He's a major investor in artificial intelligence. He was saying that we could have superintelligence in 5 years. That's a very radical position—I don't know any practitioners who believe that.” He continued, "We have these emerging existential risks and we also have emerging, so far, effective ways of dealing with it...I think this concern will die down as we see more and more positive benefits of artificial intelligence and gain more confidence that we can control it.”

The Co-Founder of Singularity University and now Director of Engineering at Google offered his long-held belief on what the future holds for humans and artificial intelligence: convergence.

"We are going to directly merge with it, we are going to become the AIs," he stated, adding, "We've always used our technology to extend our reach...That's the nature of being human, to transcend our limitations, and there's always been dangers."

In his estimate, current exponential growth of computing will continue until human-level intelligence is achieved around 2029. Merging with artificial intelligence will then follow, sometime in the 2030s as humans will be "a hybrid of biological and nonbiological thinking," wherein we connect our brains to the cloud to harness massive computational power. Beyond that? "As you get to the late 2030s or the 2040s, our thinking will be predominately nonbiological. The nonbiological part will ultimately be intelligent and have such vast capacity it'll be able to model, simulate, and understand fully the biological part. We will be able to fully backup our brains."

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

This mind-boggling future begged the question, what is Kurzweil working on right now at Google?

"[Google] is a pretty remarkable company," he said. "The resources are incredible: computation, data, algorithms, and particularly the talent there. I think it's really the only place I can do my project which has been a 50-year quest to recreate human intelligence...I think we are very much on schedule to achieve human levels of intelligence by 2029."

He explained that his team is utilizing numerous AI techniques to deal with language and learning, and in the process, collaborating with Google's DeepMind, the recently acquired startup that developed a neural network that learned how to play video games successfully.

"Mastering intelligence is so difficult that we need to throw everything we have at it."

There's a good chance we may not find out the details of Kurzweil's work until 2017. During the discussion on the future of computing and whether Moore's law was truly in jeopardy, Kurzweil took the opportunity to announce a sequel to The Singularity Is Near aptly titled The Singularity Is Nearer, planned for release in 18 months which will include updated charts. It's likely that the text will also aim to showcase his prediction track record, akin to a report he released in 2010 titled "How My predictions Are Faring."

Close to the end of the interview, Kurzweil offered a simple reason why he's optimistic about AI: we have no other choice lest we accept a scenario in which a totalitarian government controls AI. He stated it simply: "The best way to keep [artificial intelligence] safe is in fact widely distributed, which is what we are seeing in the world today."

David started writing for Singularity Hub in 2011 and served as editor-in-chief of the site from 2014 to 2017 and SU vice president of faculty, content, and curriculum from 2017 to 2019. His interests cover digital education, publishing, and media, but he'll always be a chemist at heart.

Related Articles

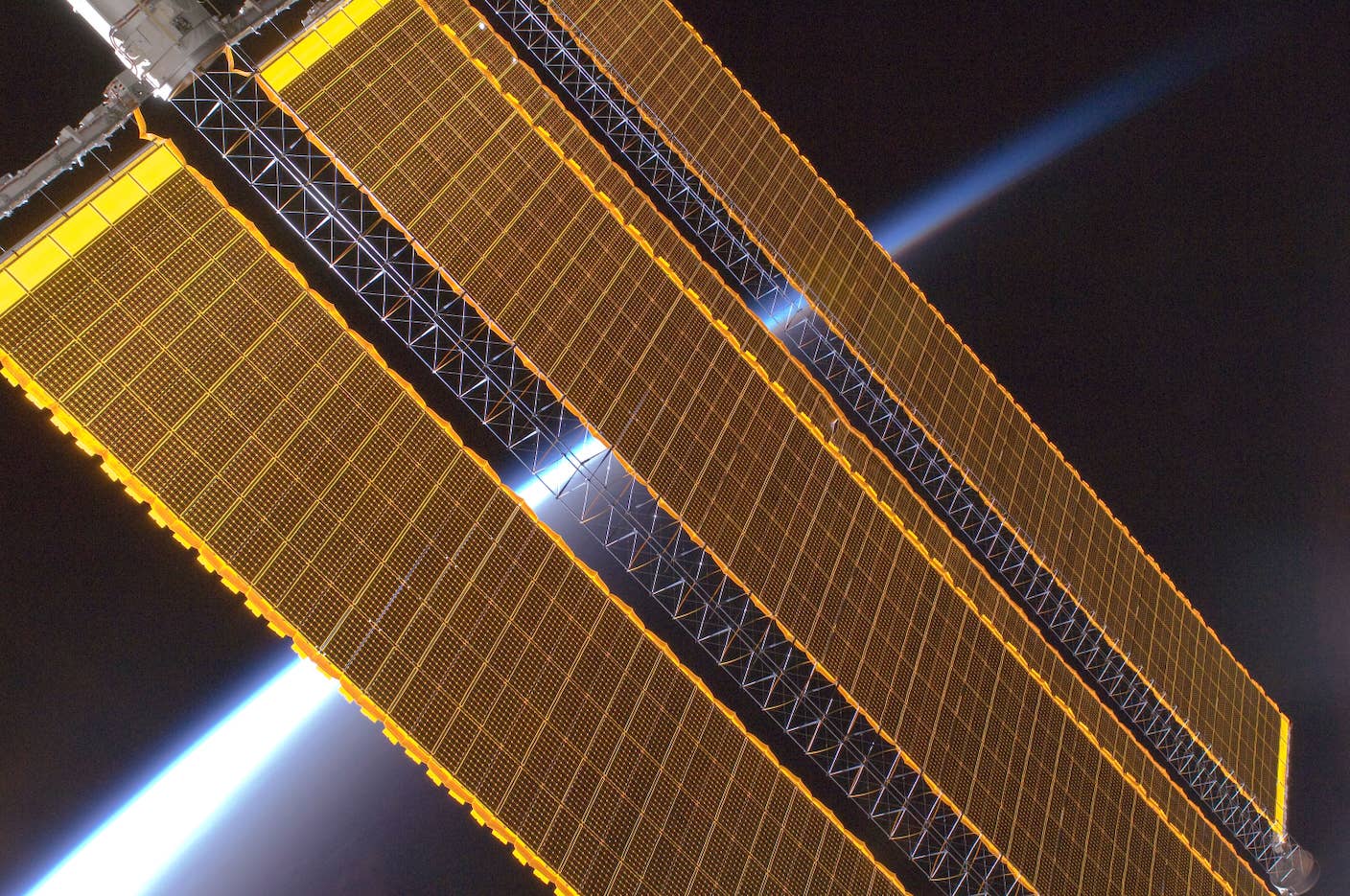

Data Centers in Space: Will 2027 Really Be the Year AI Goes to Orbit?

Hugging Face Says AI Models With Reasoning Use 30x More Energy on Average

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading