Forget Police Sketches: Researchers Perfectly Reconstruct Faces by Reading Brainwaves

Share

Picture this: you’re sitting in a police interrogation room, struggling to describe the face of a criminal to a sketch artist. You pause, wrinkling your brow, trying to remember the distance between his eyes and the shape of his nose.

Suddenly, the detective offers you an easier way: would you like to have your brain scanned instead, so that machines can automatically reconstruct the face in your mind's eye from reading your brain waves?

Sound fantastical? It’s not. After decades of work, scientists at Caltech may have finally cracked our brain’s facial recognition code. Using brain scans and direct neuron recording from macaque monkeys, the team found specialized “face patches” that respond to specific combinations of facial features.

Like dials on a music mixer, each patch is fine-tuned to a particular set of visual information, which then channel together in different combinations to form a holistic representation of every distinctive face.

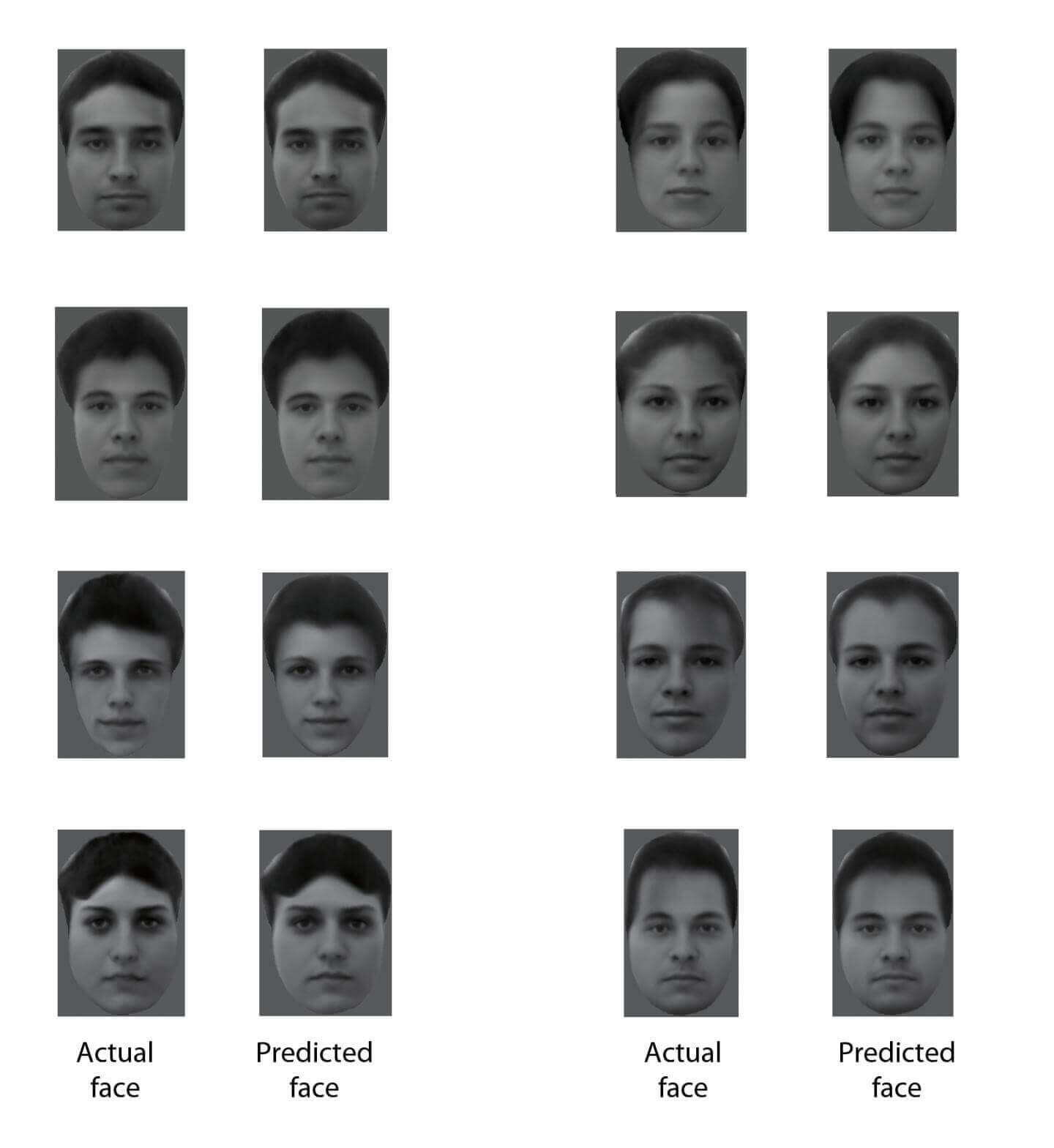

The values of each dial were so predictable that scientists were able to recreate a face the monkey saw simply by recording the electrical activity of roughly 200 brain cells. When placed together, the reconstruction and the actual photo were nearly indistinguishable.

“This was mind-blowing,” says lead author Dr. Doris Tsao.

Even more incredibly, the work “completely kills” the dominant theory of facial processing, potentially ushering in “a revolution in neuroscience,” says Dr. Rodrigo Quian Quiroga, a neuroscientist at the University of Leichester who was not involved in the work.

What’s in a face?

On average, humans are powerful face detectors, beating even the most sophisticated face-tagging algorithms.

Most of us are equipped with the uncanny ability to spot a familiar set of features from a crowd of eyes, noses and mouths. We can unconsciously process a new face in milliseconds, and—when exposed to that face over and over—often retain that memory for decades to come.

Under the hood, however, facial recognition is anything but simple. Why is it that we can detect a face under dim lighting, half obscured or at a weird angle, but machines can’t? What makes people’s faces distinctively their own?

When light reflected off a face hits your retina, the information passes through several layers of neurons before it reaches a highly specialized region of the visual cortex: the inferotemporal (IT) region, a small nugget of brain at the base of the brain. This region is home to “face cells”: groups of neurons that only respond to faces but not to objects such as houses or landscapes.

In the early 2000s, while recording from epilepsy patients with electrodes implanted into their brains, Quian Quiroga and colleagues found that face cells are particularly picky. So-called “Jennifer Aniston” cells, for example, would only fire in response to photos of her face and her face alone. The cells quietly ignored all other images, including those of her with Brad Pitt.

This led to a prevailing theory that still dominates the field: that the brain contains specialized “face neurons” that only respond to one or a few faces, but do so holistically.

But there’s a problem: the theory doesn’t explain how we process new faces, nor does it get into the nitty-gritty of how faces are actually encoded inside those neurons.

Mathematical faces

In a stroke of luck, Tsao and team blew open the “black box” of facial recognition while working on a different problem: how to describe a face mathematically, with a matrix of numbers.

Using a set of 200 faces from an online database, the team first identified “landmark” features and labeled them with dots. This created a large set of abstract dot-to-dot faces, similar to what filmmakers do during motion capture.

Then, using a statistical method called principle component analysis, the scientists extracted 25 measurements that best represented a given face. These measurements were mostly holistic: one “shape dimension,” for example, encodes for the changes in hairline, face width, and height of eyes.

This figure shows eight different real faces that were presented to a monkey, together with reconstructions made by analyzing electrical activity from 205 neurons recorded while the monkey was viewing the faces. Credit: Doris Tsao

By varying these “shape dimensions,” the authors generated a set of 2,000 black-and-white faces with slight differences in the distance between the brows, skin texture, and other facial features.

Division of labor

In macaque monkeys with electrodes implanted into their brains, the team recorded from three “face patches”—brain areas that respond especially to faces—while showing the monkeys the computer-generated faces.

As it turns out, each face neuron only cared about a single set of features. A neuron that only cares about hairline and skinny eyebrows, for example, would fire up when it detects variations in those features across faces. If two faces had similar hairlines but different mouths, those “hairline neurons” stayed silent.

What’s more, cells in different face patches processed complementary information. The anterior medial face patch, for example, mainly responded to distances between features (what the team dubs “appearance”). Other patches fired up to information about shapes, such as the curvature of the nose or length of the mouth.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

In a way, these feature neurons are like compasses: they only activate when the measurement is off from a set point (magnetic north, for a compass). Scientists aren’t quite sure how each cell determines its set point. However, combining all the “set points” generates a “face space”—a sort of average face, or a face atlas.

From there, when presented with a new face, each neuron will measure the difference between a feature (distance between eyes, for example) and the face atlas. Combine all those differences, and voilá—you have a representation of a new face.

Reading faces

Once the team figured out this division of labor, they constructed a mathematical model to predict how the patches process new faces.

Here’s the cool part: the medley of features that best covered the entire shape and look of a face was fairly abstract, including the distance between the brows. Sound familiar? That’s because the brain’s preferred set of features were similar to the “landmarks” that the team first intuitively labeled to generate their face database.

“We thought we had picked it out of the blue,” says Tsao.

But it makes sense. “If you look at methods for modeling faces in computer vision, almost all of them...separate out the shape and appearance,” she explains. “The mathematical elegance of the system is amazing.”

The team showed the monkeys a series of new faces while recording from roughly 200 neurons in the face patches. Using their mathematical model, they then calculated what features each neuron encodes for and how they combine.

The result? A “stunning accurate” reconstruction of the faces the monkeys were seeing. So accurate, in fact, that the algorithm-generated faces were nearly indistinguishable from the original.

“It really speaks to how compact and efficient this feature-based neural code is,” says Tsao, referring to the fact that such a small set of neurons contained sufficient information for a full face.

Forward facing

Tsao’s work doesn’t paint the full picture. The team only recorded from two out of six face patches, suggesting that other types of information processing may be happening alongside Tsao’s model.

But the study breaks the “black box” norm that’s plagued the field for decades.

“Our results demonstrate that at least one subdivision of IT cortex can be explained by an explicit, simple model, and ‘black box’ explanations are unnecessary,” the authors conclude (pretty sassy for an academic paper!).

While there aren’t any immediate applications, the new findings could eventually guide the development of brain-machine interfaces that directly stimulate the visual cortex to give back sight to the blind. They could also help physicians understand why some people suffer from “face blindness,” and engineer prosthetics to help.

Image Credit: Doris Tsao

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading