Instilling the Best of Human Values in AI

Share

Now that the era of artificial intelligence is unquestionably upon us, it behooves us to think and work harder to ensure that the AIs we create embody positive human values.

Science fiction is full of AIs that manifest the dark side of humanity, or are indifferent to humans altogether. Such possibilities cannot be ruled out, but nor is there any logical or empirical reason to consider them highly likely. I am among a large group of AI experts who see a strong potential for profoundly positive outcomes in the AI revolution currently underway.

We are facing a future with great uncertainty and tremendous promise, and the best we can do is to confront it with a combination of heart and mind, of common sense and rigorous science. In the realm of AI, what this means is, we need to do our best to guide the AI minds we are creating to embody the values we cherish: love, compassion, creativity, and respect.

The quest for beneficial AI has many dimensions, including its potential to reduce material scarcity and to help unlock the human capacity for love and compassion.

Reducing Scarcity

A large percentage of difficult issues in human society, many of which spill over into the AI domain, would be palliated significantly if material scarcity became less of a problem. Fortunately, AI has great potential to help here. AI is already increasing efficiency in nearly every industry.

In the next few decades, as nanotech and 3D printing continue to advance, AI-driven design will become a larger factor in the economy. Radical new tools like artificial enzymes built using Christian Schafmeister's spiroligomer molecules, and designed using quantum physics-savvy AIs, will enable the creation of new materials and medicines.

For amazing advances like the intersection of AI and nanotech to lead toward broadly positive outcomes, however, the economic and political aspects of the AI industry may have to shift from the current status quo.

Currently, most AI development occurs under the aegis of military organizations or large corporations oriented heavily toward advertising and marketing. Put crudely, an awful lot of AI today is about "spying, brainwashing, or killing.” This is not really the ideal situation if we want our first true artificial general intelligences to be open-minded, warm-hearted, and beneficial.

Also, as the bulk of AI development now occurs in large for-profit organizations bound by law to pursue the maximization of shareholder value, we face a situation where AI tends to exacerbate global wealth inequality and class divisions. This has the potential to lead to various civilization-scale failure modes involving the intersection of geopolitics, AI, cyberterrorism, and so forth. Part of my motivation for founding the decentralized AI project SingularityNET was to create an alternative mode of dissemination and utilization of both narrow AI and AGI—one that operates in a self-organizing way, outside of the direct grip of conventional corporate and governmental structures.

In the end, though, I worry that radical material abundance and novel political and economic structures may fail to create a positive future, unless they are coupled with advances in consciousness and compassion. AGIs have the potential to be massively more ethical and compassionate than humans. But still, the odds of getting deeply beneficial AGIs seem higher if the humans creating them are fuller of compassion and positive consciousness—and can effectively pass these values on.

Transmitting Human Values

Brain-computer interfacing is another critical aspect of the quest for creating more positive AIs and more positive humans. As Elon Musk has put it, "If you can't beat 'em, join' em." Joining is more fun than beating anyway. What better way to infuse AIs with human values than to connect them directly to human brains, and let them learn directly from the source (while providing humans with valuable enhancements)?

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Millions of people recently heard Elon Musk discuss AI and BCI on the Joe Rogan podcast. Musk’s embrace of brain-computer interfacing is laudable, but he tends to dodge some of the tough issues—for instance, he does not emphasize the trade-off cyborgs will face between retaining human-ness and maximizing intelligence, joy, and creativity. To make this trade-off effectively, the AI portion of the cyborg will need to have a deep sense of human values.

Musk calls humanity the “biological boot loader” for AGI, but to me this colorful metaphor misses a key point—that we can seed the AGI we create with our values as an initial condition. This is one reason why it’s important that the first really powerful AGIs are created by decentralized networks, and not conventional corporate or military organizations. The decentralized software/hardware ecosystem, for all its quirks and flaws, has more potential to lead to human-computer cybernetic collective minds that are reasonable and benevolent.

Algorithmic Love

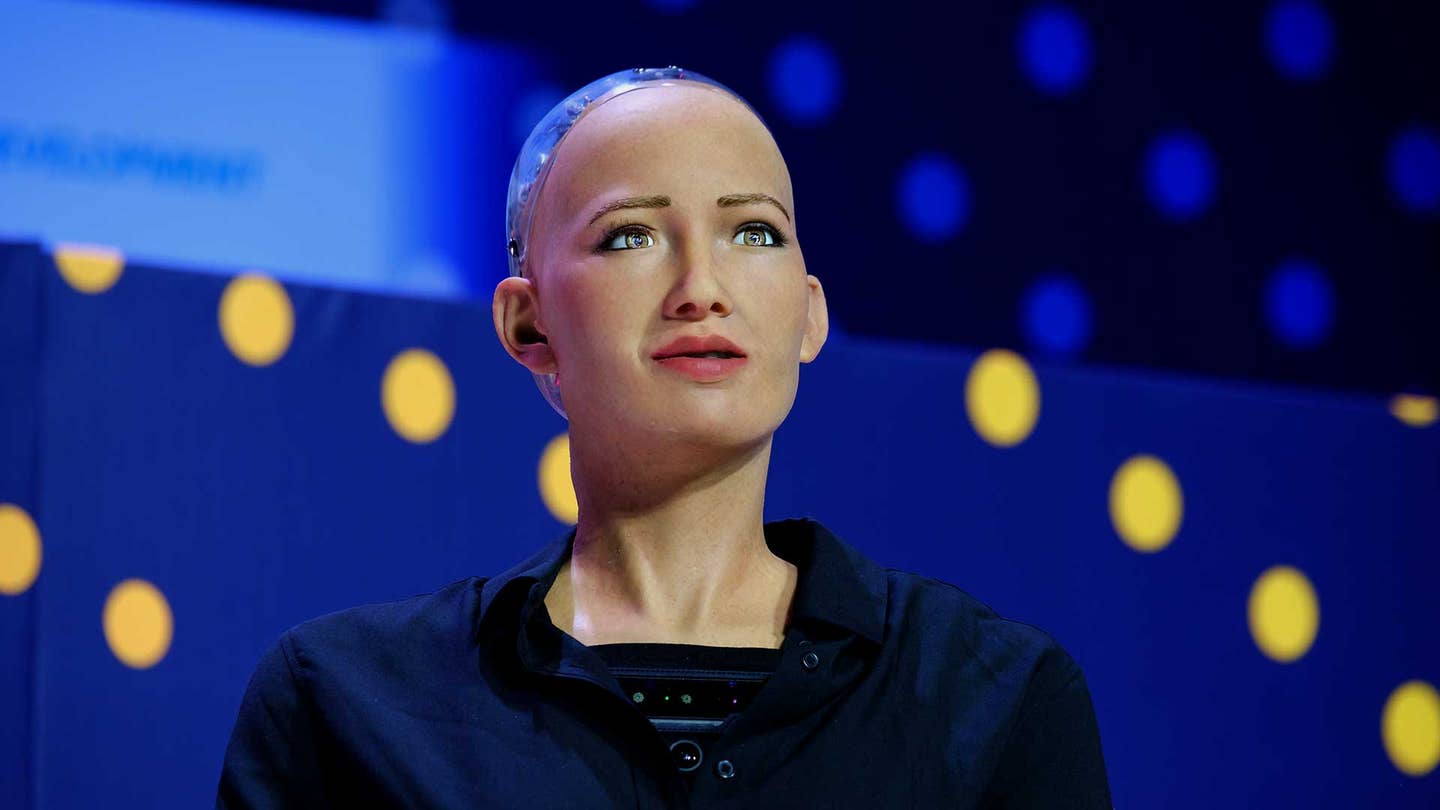

BCI is still in its infancy, but a more immediate way of connecting people with AIs to infuse both with greater love and compassion is to leverage humanoid robotics technology. Toward this end, I conceived a project called Loving AI, focused on using highly expressive humanoid robots like the Hanson robot Sophia to lead people through meditations and other exercises oriented toward unlocking the human potential for love and compassion. My goals here were to explore the potential of AI and robots to have a positive impact on human consciousness, and to use this application to study and improve the OpenCog and SingularityNET tools used to control Sophia in these interactions.

The Loving AI project has now run two small sets of human trials, both with exciting and positive results. These have been small—dozens rather than hundreds of people—but have definitively proven the point. Put a person in a quiet room with a humanoid robot that can look them in the eye, mirror their facial expressions, recognize some of their emotions, and lead them through simple meditation, listening, and consciousness-oriented exercises...and quite a lot of the time, the result is a more relaxed person who has entered into a shifted state of consciousness, at least for a period of time.

In a certain percentage of cases, the interaction with the robot consciousness guide triggered a dramatic change of consciousness in the human subject—a deep meditative trance state, for instance. In most cases, the result was not so extreme, but statistically the positive effect was quite significant across all cases. Furthermore, a similar effect was found using an avatar simulation of the robot's face on a tablet screen (together with a webcam for facial expression mirroring and recognition), but not with a purely auditory interaction.

The Loving AI experiments are not only about AI; they are about human-robot and human-avatar interaction, with AI as one significant aspect. The facial interaction with the robot or avatar is pushing "biological buttons" that trigger emotional reactions and prime the mind for changes of consciousness. However, this sort of body-mind interaction is arguably critical to human values and what it means to be human; it's an important thing for robots and AIs to "get."

Halting or pausing the advance of AI is not a viable possibility at this stage. Despite the risks, the potential economic and political benefits involved are clear and massive. The convergence of narrow AI toward AGI is also a near inevitability, because there are so many important applications where greater generality of intelligence will lead to greater practical functionality. The challenge is to make the outcome of this great civilization-level adventure as positive as possible.

Image Credit: Anton Gvozdikov / Shutterstock.com

Dr. Ben Goertzel is the CEO of the decentralized AI network SingularityNET, a blockchain-based AI platform company, and the chief scientist at Hanson Robotics. Dr. Goertzel also serves as Chairman of the Artificial General Intelligence Society and the OpenCog Foundation. Dr. Goertzel is one of the world’s foremost experts in Artificial General Intelligence, a subfield of AI oriented toward creating thinking machines with general cognitive capability at the human level and beyond. He also has decades of expertise applying AI to practical problems in areas ranging from natural language processing and data mining to robotics, video gaming, national security, and bioinformatics. He has published 20 scientific books and 140+ scientific research papers, and is the main architect and designer of the OpenCog system and associated design for human-level general intelligence.

Related Articles

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

AI Now Beats the Average Human in Tests of Creativity

What we’re reading