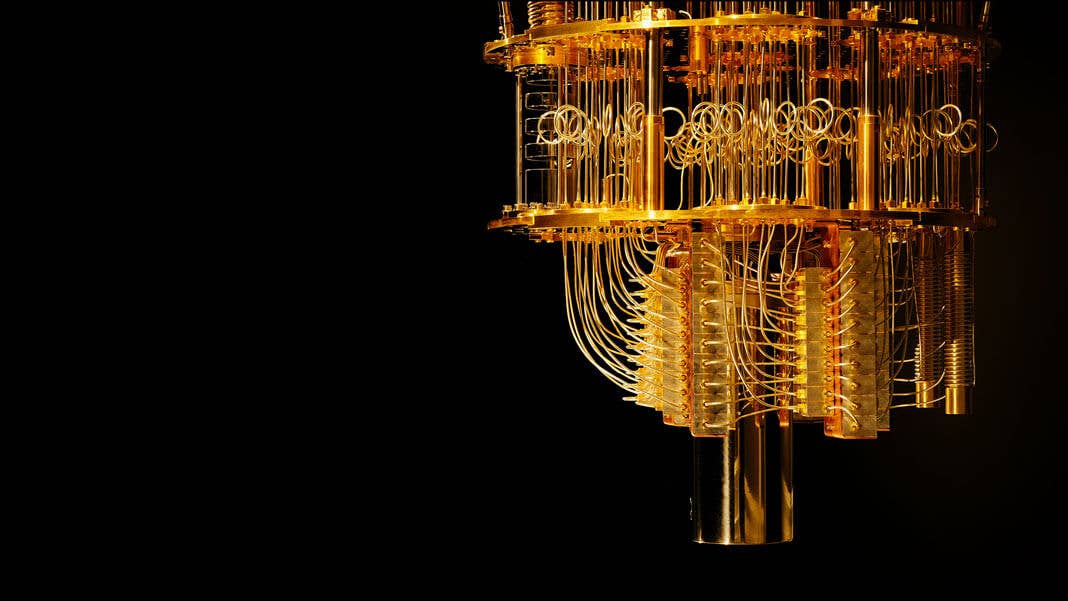

IBM Introduces ‘Quantum Volume’ to Track Progress Towards the Quantum Age

Share

Quantum computing companies are racing to squeeze ever more qubits into their devices, but is this really a solid sign of progress? IBM is proposing a more holistic measure it calls “quantum volume” (QV) that it says gives a better indication of how close we are to practical devices.

Creating quantum computers that can solve real-world problems will require devices many times larger than those we have today, so it’s no surprise that announcements of processors with ever more qubits generate headlines. Google is currently leading the pack with its 72 qubit Bristlecone processor, but IBM and Intel aren’t far behind with 50 and 49, respectively.

Unsurprisingly, building a useful quantum computer is more complicated than simply adding more qubits, the building blocks used to encode information in a quantum computer (here’s an excellent refresher on how quantum computers work). How long a machine can maintain fragile quantum states for, the frequency of errors, and the overall architecture can dramatically impact how useful those extra qubits will be.

That’s why IBM is pushing a measure first introduced in 2017 called quantum volume (QV), which it says does a much better job of capturing how these factors combine to impact real-world performance. The rationale for QV is that the most important question for a quantum computer is how complex an algorithm it can implement.

That’s governed by two key characteristics: how many qubits it has and how many operations it can perform before errors or the collapse of quantum states distort the result. In the language of quantum circuits, the computational model favored by most industry leaders, these characteristics can be described as width and depth (more specifically achievable circuit depth).

Width is important because more complex quantum algorithms able to exceed classical computing’s capabilities require lots of qubits to encode all the relevant information. A two-qubit system that can run indefinitely still won’t be able to solve many useful problems. Greater depth is important because it allows the circuit to carry out more steps with the qubits at its disposal, and thus run more complex algorithms than a shallower circuit could.

The IBM researchers have therefore decided that rather than just counting qubits, they are going to treat width and depth as equally important, and so QV essentially measures the largest square-shaped circuit—i.e. one with equal width and depth—that a quantum computer can successfully implement.

What makes the approach so neat is that working out the depth requires you to consider a host of other metrics researchers already use to assess the performance of a quantum computer. It then boils all that information down to a single numerical value that can be compared across devices.

Those measures include coherence time—how long qubits can maintain their quantum states before interactions with the environment cause them to collapse. It also takes account of the rate at which the quantum gates used to carry out operations on qubits (analogous to logic gates in classical computers) introduce errors. The frequency of errors determines how many operations can be carried out before the results become junk.

It even encompasses the architecture of the device. When qubits aren’t directly connected to each other, getting them to interact requires extra gates, which can introduce error and therefore impact the depth. That means the greater the connectivity the better, with the ideal device being one where every qubit is connected to every other one.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

To validate the approach, the company has tested it on three of its machines, including the 5-qubit system it made publicly available over the cloud in 2017, the 20-qubit system it released last year, and the Q System 1 it released earlier this year. What they found was a doubling in QV every year, from 4 to 8 to 16, a pattern the company takes pains to stress is the same as Moore’s Law, which has governed the exponential improvement in classical computer performance over the last 50 years.

The relevance of that factoid will depend on whether other companies adopt the measure; progress in quantum computing isn’t an internal IBM process. But the company is actively calling for others to get on board, pointing out that condensing all this information into a single number should make it easier to draw comparisons across the highly varied devices being explored.

But while QV is undoubtedly elegant, it is important to remember the potential value of being the one to set the benchmark against which progress in quantum computing is measured. It’s unclear yet how other companies’ devices would fair on the measure, but there’s a natural incentive for IBM to promote metrics that favor its own technology.

That doesn’t mean you should automatically discount it, though. Veteran high-performance computing analyst Addison Snell described the metric as “compelling” to HPCwire, which also noted that rival quantum computing firm Rigetti has reportedly implemented QV as a measure. Ars Technica’s Chris Lee also thinks it could achieve widespread adoption.

Whether QV becomes the quantum equivalent of the LINPACK benchmark used to speed test the world’s most powerful supercomputers remains to be seen. But hopefully it will start a conversation about how companies can compare notes and start to peel away some of the opaqueness surrounding the race for quantum supremacy.

Photo by Graham Carlow, IBM Research / CC BY ND-2.0

Related Articles

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

This Light-Powered AI Chip Is 100x Faster Than a Top Nvidia GPU

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

What we’re reading