Moving Beyond Mind-Controlled Limbs to Prosthetics That Can Actually ‘Feel’

Share

Brain-machine interface enthusiasts often gush about “closing the loop.” It’s for good reason. On the implant level, it means engineering smarter probes that only activate when they detect faulty electrical signals in brain circuits. Elon Musk’s Neuralink—among other players—are readily pursuing these bi-directional implants that both measure and zap the brain.

But to scientists laboring to restore functionality to paralyzed patients or amputees, “closing the loop” has broader connotations. Building smart mind-controlled robotic limbs isn’t enough; the next frontier is restoring sensation in offline body parts. To truly meld biology with machine, the robotic appendage has to “feel one” with the body.

This month, two studies from Science Robotics describe complementary ways forward. In one, scientists from the University of Utah paired a state-of-the-art robotic arm—the DEKA LUKE—with electrically stimulating remaining nerves above the attachment point. Using artificial zaps to mimic the skin’s natural response patterns to touch, the team dramatically increased the patient’s ability to identify objects. Without much training, he could easily discriminate between the small and large and the soft and hard while blindfolded and wearing headphones.

In another, a team based at the National University of Singapore took inspiration from our largest organ, the skin. Mimicking the neural architecture of biological skin, the engineered “electronic skin” not only senses temperature, pressure, and humidity, but continues to function even when scraped or otherwise damaged. Thanks to artificial nerves that transmit signals far faster than our biological ones, the flexible e-skin shoots electrical data 1,000 times quicker than human nerves.

Together, the studies marry neuroscience and robotics. Representing the latest push towards closing the loop, they show that integrating biological sensibilities with robotic efficiency isn’t impossible (super-human touch, anyone?). But more immediately—and more importantly—they’re beacons of hope for patients who hope to regain their sense of touch.

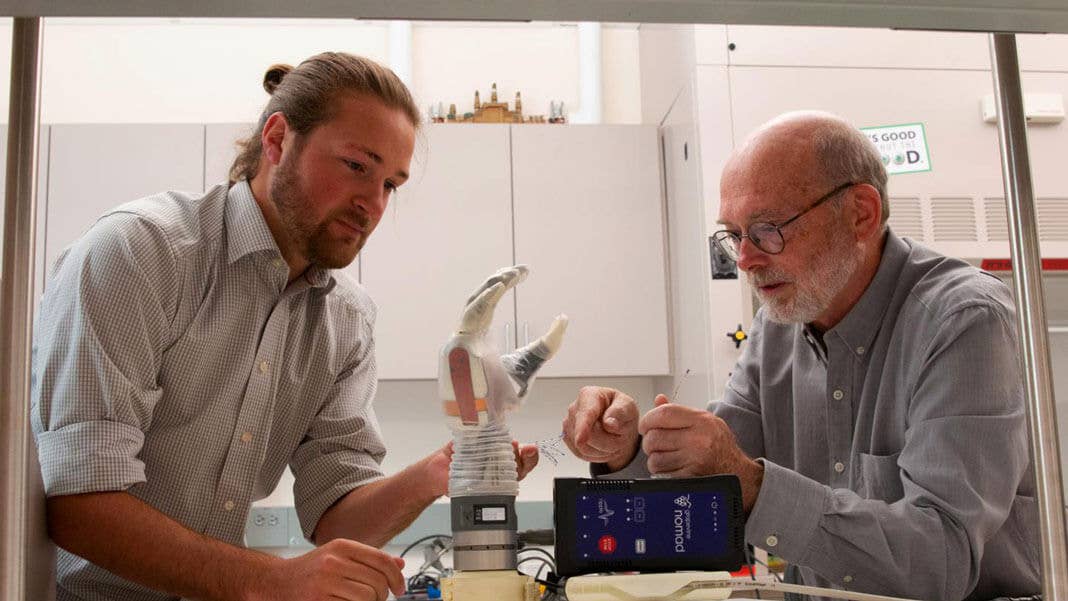

For one of the participants, a late middle-aged man with speckled white hair who lost his forearm 13 years ago, superpowers, cyborgs, or razzle-dazzle brain implants are the last thing on his mind. After a barrage of emotionally-neutral scientific tests, he grasped his wife’s hand and felt her warmth for the first time in over a decade. His face lit up in a blinding smile.

That’s what scientists are working towards.

Biomimetic Feedback

The human skin is a marvelous thing. Not only does it rapidly detect a multitude of sensations—pressure, temperature, itch, pain, humidity—its wiring “binds” disparate signals together into a sensory fingerprint that helps the brain identify what it’s feeling at any moment. Thanks to over 45 miles of nerves that connect the skin, muscles, and brain, you can pick up a half-full coffee cup, knowing that it’s hot and sloshing, while staring at your computer screen. Unfortunately, this complexity is also why restoring sensation is so hard.

The sensory electrode array implanted in the participant’s arm. Image Credit: George et al., Sci. Robot. 4, eaax2352 (2019)..

However, complex neural patterns can also be a source of inspiration. Previous cyborg arms are often paired with so-called “standard” sensory algorithms to induce a basic sense of touch in the missing limb. Here, electrodes zap residual nerves with intensities proportional to the contact force: the harder the grip, the stronger the electrical feedback. Although seemingly logical, that’s not how our skin works. Every time the skin touches or leaves an object, its nerves shoot strong bursts of activity to the brain; while in full contact, the signal is much lower. The resulting electrical strength curve resembles a “U.”

The LUKE hand. Image Credit: George et al., Sci. Robot. 4, eaax2352 (2019).

The team decided to directly compare standard algorithms with one that better mimics the skin’s natural response. They fitted a volunteer with a robotic LUKE arm and implanted an array of electrodes into his forearm—right above the amputation—to stimulate the remaining nerves. When the team activated different combinations of electrodes, the man reported sensations of vibration, pressure, tapping, or a sort of “tightening” in his missing hand. Some combinations of zaps also made him feel as if he were moving the robotic arm’s joints.

In all, the team was able to carefully map nearly 120 sensations to different locations on the phantom hand, which they then overlapped with contact sensors embedded in the LUKE arm. For example, when the patient touched something with his robotic index finger, the relevant electrodes sent signals that made him feel as if he were brushing something with his own missing index fingertip.

Standard sensory feedback already helped: even with simple electrical stimulation, the man could tell apart size (golf versus lacrosse ball) and texture (foam versus plastic) while blindfolded and wearing noise-canceling headphones. But when the team implemented two types of neuromimetic feedback—electrical zaps that resembled the skin’s natural response—his performance dramatically improved. He was able to identify objects much faster and more accurately under their guidance. Outside the lab, he also found it easier to cook, feed, and dress himself. He could even text on his phone and complete routine chores that were previously too difficult, such as stuffing an insert into a pillowcase, hammering a nail, or eating hard-to-grab foods like eggs and grapes.

The study shows that the brain more readily accepts biologically-inspired electrical patterns, making it a relatively easy—but enormously powerful—upgrade that seamlessly integrates the robotic arms with the host. “The functional and emotional benefits…are likely to be further enhanced with long-term use, and efforts are underway to develop a portable take-home system,” the team said.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

E-Skin Revolution: Asynchronous Coded Electronic Skin (ACES)

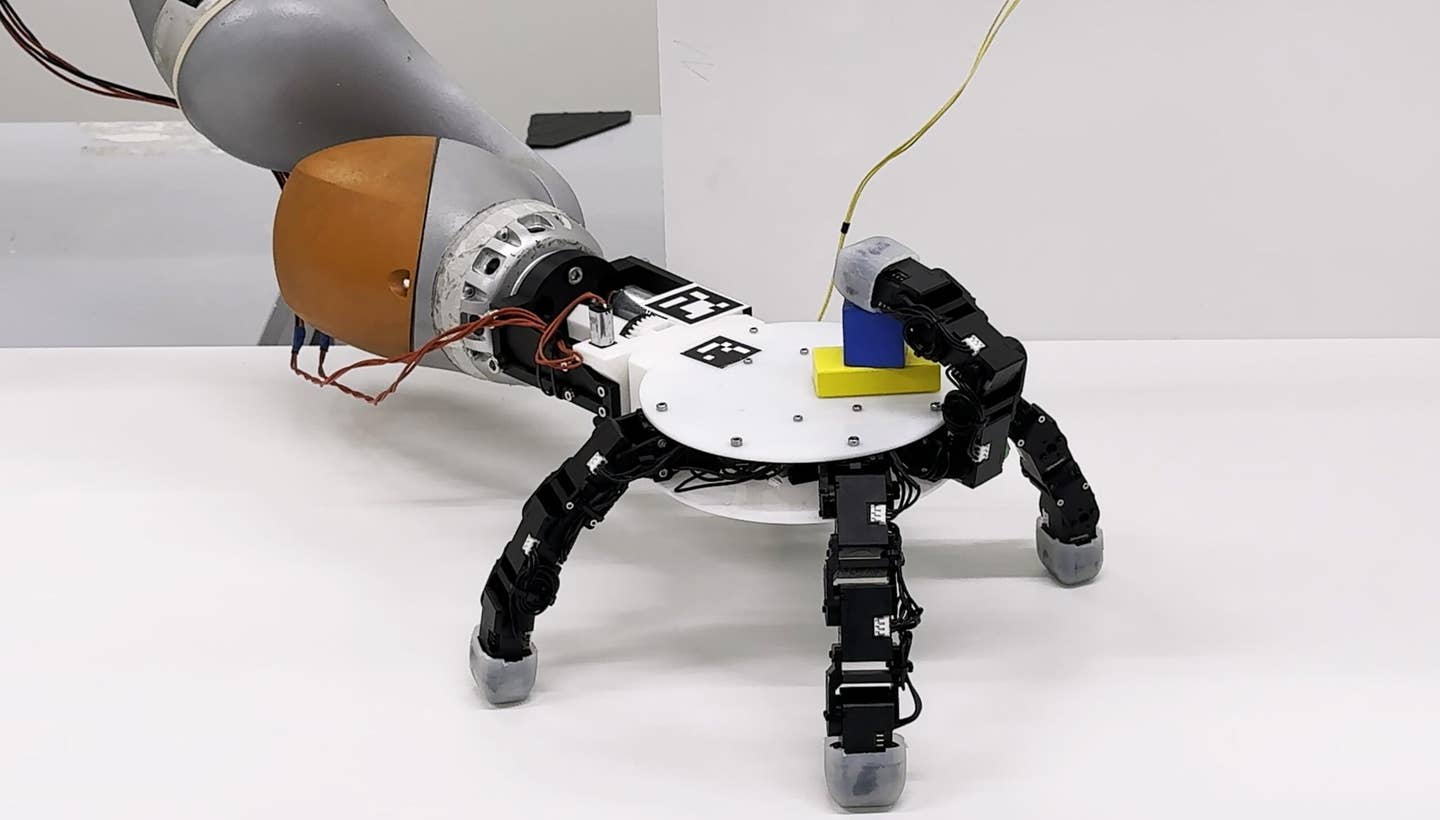

Flexible electronic skins also aren’t new, but the second team presented an upgrade in both speed and durability while retaining multiplexed sensory capabilities.

Starting from a combination of rubber, plastic, and silicon, the team embedded over 200 sensors onto the e-skin, each capable of discerning contact, pressure, temperature, and humidity. They then looked to the skin’s nervous system for inspiration. Our skin is embedded with a dense array of nerve endings that individually transmit different types of sensations, which are integrated inside hubs called ganglia. Compared to having every single nerve ending directly ping data to the brain, this “gather, process, and transmit” architecture rapidly speeds things up.

The team tapped into this biological architecture. Rather than pairing each sensor with a dedicated receiver, ACES sends all sensory data to a single receiver—an artificial ganglion. This setup lets the e-skin’s wiring work as a whole system, as opposed to individual electrodes. Every sensor transmits its data using a characteristic pulse, which allows it to be uniquely identified by the receiver.

The gains were immediate. First was speed. Normally, sensory data from multiple individual electrodes need to be periodically combined into a map of pressure points. Here, data from thousands of distributed sensors can independently go to a single receiver for further processing, massively increasing efficiency—the new e-skin’s transmission rate is roughly 1,000 times faster than that of human skin.

Second was redundancy. Because data from individual sensors are aggregated, the system still functioned even when any individual receptors are damaged, making it far more resilient than previous attempts. Finally, the setup could easily scale up. Although the team only tested the idea with 240 sensors, theoretically the system should work with up to 10,000.

The team is now exploring ways to combine their invention with other material layers to make it water-resistant and self-repairable. As you might’ve guessed, an immediate application is to give robots something similar to complex touch. A sensory upgrade not only lets robots more easily manipulate tools, doorknobs, and other objects in hectic real-world environments, it could also make it easier for machines to work collaboratively with humans in the future (hey Wall-E, care to pass the salt?).

Dexterous robots aside, the team also envisions engineering better prosthetics. When coated onto cyborg limbs, for example, ACES may give them a better sense of touch that begins to rival the human skin—or perhaps even exceed it.

Regardless, efforts that adapt the functionality of the human nervous system to machines are finally paying off, and more are sure to come. Neuromimetic ideas may very well be the link that finally closes the loop.

Image Credit: Dan Hixson/University of Utah College of Engineering..

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Waymo Closes in on Uber and Lyft Prices, as More Riders Say They Trust Robotaxis

Sci-Fi Cloaking Technology Takes a Step Closer to Reality With Synthetic Skin Like an Octopus

What we’re reading