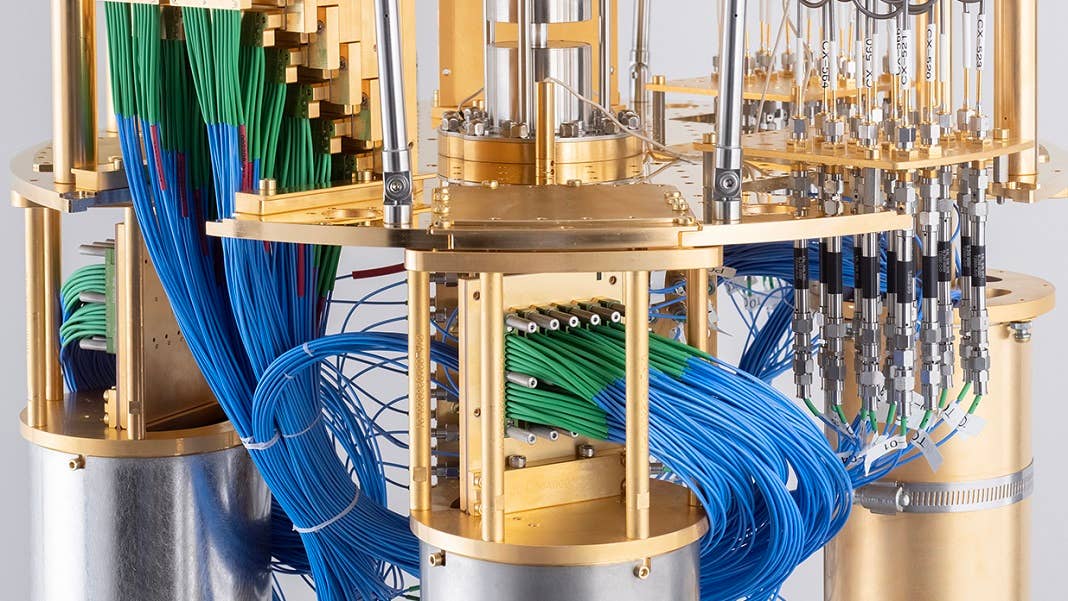

An IBM Quantum Computer Beat a Supercomputer in a Benchmark Test

Share

Quantum computers may soon tackle problems that stump today’s powerful supercomputers—even when riddled with errors.

Computation and accuracy go hand in hand. But a new collaboration between IBM and UC Berkeley showed that perfection isn’t necessarily required for solving challenging problems, from understanding the behavior of magnetic materials to modeling how neural networks behave or how information spreads across social networks.

The teams pitted IBM’s 127-qubit Eagle chip against supercomputers at Lawrence Berkeley National Lab and Purdue University for increasingly complex tasks. With easier calculations, Eagle matched the supercomputers' results every time—suggesting that even with noise, the quantum computer could generate accurate responses. But where it shone was in its ability to tolerate scale, returning results that are—in theory—far more accurate than what’s possible today with state-of-the-art silicon computer chips.

At the heart is a post-processing technique that decreases noise. Similar to looking at a large painting, the method ignores each brush stroke. Rather, it focuses on small portions of the painting and captures the general “gist” of the artwork.

The study, published in Nature, isn’t chasing quantum advantage, the theory that quantum computers can solve problems faster than conventional computers. Rather, it shows that today’s quantum computers, even when imperfect, may become part of scientific research—and perhaps our lives—sooner than expected. In other words, we’ve now entered the realm of quantum utility.

“The crux of the work is that we can now use all 127 of Eagle’s qubits to run a pretty sizable and deep circuit—and the numbers come out correct,” said Dr. Kristan Temme, principle research staff member and manager for the Theory of Quantum Algorithms group at IBM Quantum.

The Error Terror

The Achilles heel of quantum computers is their errors.

Similar to classic silicon-based computer chips—those running in your phone or laptop—quantum computers use packets of data called bits as the basic method of calculation. What’s different is that in classical computers, bits represent 1 or 0. But thanks to quantum quirks, the quantum equivalent of bits, qubits, exist in a state of flux, with a chance of landing in either position.

This weirdness, along with other attributes, makes it possible for quantum computers to simultaneously compute multiple complex calculations—essentially, everything, everywhere, all at once (wink)—making them, in theory, far more efficient than today’s silicon chips.

Proving the idea is harder.

“The race to show that these processors can outperform their classical counterparts is a difficult one,” said Drs. Göran Wendin and Jonas Bylander at the Chalmers University of Technology in Sweden, who were not involved in the study.

The main trip-up? Errors.

Qubits are finicky things, as are the ways in which they interact with each other. Even minor changes in their state or environment can throw a calculation off track. “Developing the full potential of quantum computers requires devices that can correct their own errors,” said Wendin and Bylander.

The fairy tale ending is a fault-tolerant quantum computer. Here, it’ll have thousands of high-quality qubits similar to “perfect” ones used today in simulated models, all controlled by a self-correcting system.

That fantasy may be decades off. But in the meantime, scientists have settled on an interim solution: error mitigation. The idea is simple: if we can’t eliminate noise, why not accept it? Here, the idea is to measure and tolerate errors while finding methods that compensate for quantum hiccups using post-processing software.

It's a tough problem. One previous method, dubbed “noisy intermediate-scale quantum computation,” can track errors as they build up and correct them before they corrupt the computational task at hand. But the idea only worked for quantum computers running a few qubits—a solution that doesn’t work for solving useful problems, because they’ll likely require thousands of qubits.

IBM Quantum had another idea. Back in 2017, they published a guiding theory: if we can understand the source of noise in the quantum computing system, then we can eliminate its effects.

The overall idea is a bit unorthodox. Rather than limiting noise, the team deliberately enhanced noise in a quantum computer using a similar technique that controls qubits. This makes it possible to measure results from multiple experiments injected with varying levels of noise, and develop ways to counteract its negative effects.

Back to Zero

In this study, the team generated a model of how noise behaves in the system. With this “noise atlas,” they could better manipulate, amplify, and eliminate the unwanted signals in a predicable way.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Using post-processing software called Zero Noise Extrapolation (ZNE), they extrapolated the measured “noise atlas” to a system without noise—like digitally erasing background hums from a recorded soundtrack.

As a proof of concept, the team turned to a classic mathematical model used to capture complex systems in physics, neuroscience, and social dynamics. Called the 2D Ising model, it was originally developed nearly a century ago to study magnetic materials.

Magnetic objects are a bit like qubits. Imagine a compass. They have a propensity to point north, but can land in any position depending on where you are—determining their ultimate state.

The Ising model mimics a lattice of compasses, in which each one’s spin influences its neighbor’s. Each spin has two states: up or down. Although originally used to describe magnetic properties, the Ising model is now widely used for simulating the behavior of complex systems, such as biological neural networks and social dynamics. It also helps with cleaning up noise in image analysis and bolsters computer vision.

The model is perfect for challenging quantum computers because of its scale. As the number of “compasses” increases, the system’s complexity rises exponentially and quickly outgrows the capability of today’s supercomputers. This makes it a perfect test for pitting quantum and classical computers mano a mano.

An initial test first focused on a small group of spins well within the supercomputers’ capabilities. The results were on the mark for both, providing a benchmark of the Eagle quantum processor’s performance with the error mitigation software. That is, even with errors, the quantum processor provided accurate results similar to those from state-of-the-art supercomputers.

For the next tests, the team stepped up the complexity of the calculations, eventually employing all of Eagle’s 127 qubits and over 60 different steps. At first, the supercomputers, armed with tricks to calculate exact answers, kept up with the quantum computer, pumping out surprisingly similar results.

“The level of agreement between the quantum and classical computations on such large problems was pretty surprising to me personally,” said study author Dr. Andrew Eddins at IBM Quantum.

As the complexity increased, however, classic approximation methods began to falter. The breaking point happened when the team dialed up the qubits to 68 to model the problem. From there, Eagle was able to scale up to its entire 127 qubits, generating answers beyond the capability of the supercomputers.

It’s impossible to certify that the results are completely accurate. However, because Eagle’s performance matched results from the supercomputers—up to the point the latter could no longer hold up—the previous trials suggest the new answers are likely correct.

What’s Next?

The study is still a proof of concept.

Although it shows that the post-processing software, ZNE, can mitigate errors in a 127-qubit system, it’s still unclear if the solution can scale up. With IBM’s 1,121-qubit Condor chip set to release this year—and “utility-scale processors” with up to 4,158 qubits in the pipeline—the error-mitigating strategy may need further testing.

Overall, the method’s strength is in its scale, not its speed. The quantum speed-up was about two to three times faster than classical computers. The strategy also uses a short-term pragmatic approach by pursuing strategies that minimize errors—as opposed to correcting them altogether—as an interim solution to begin utilizing these strange but powerful machines.

These techniques “will drive the development of device technology, control systems, and software by providing applications that could offer useful quantum advantage beyond quantum-computing research—and pave the way for truly fault-tolerant quantum computing,” said Wendin and Bylander. Although still in their early days, they “herald further opportunities for quantum processors to emulate physical systems that are far beyond the reach of conventional computers.”

Image Credit: IBM

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

How Scientists Are Growing Computers From Human Brain Cells—and Why They Want to Keep Doing It

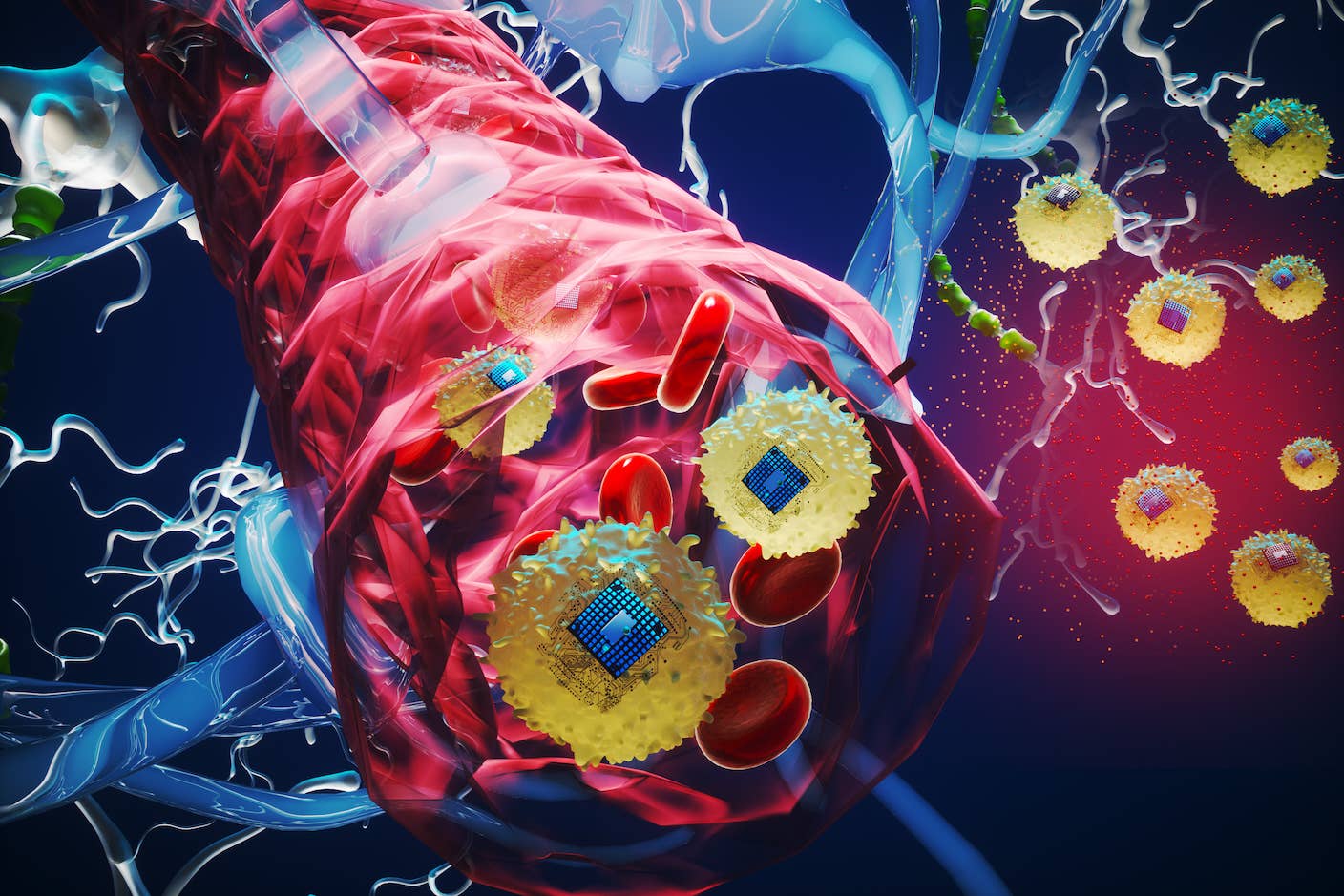

These Brain Implants Are Smaller Than Cells and Can Be Injected Into Veins

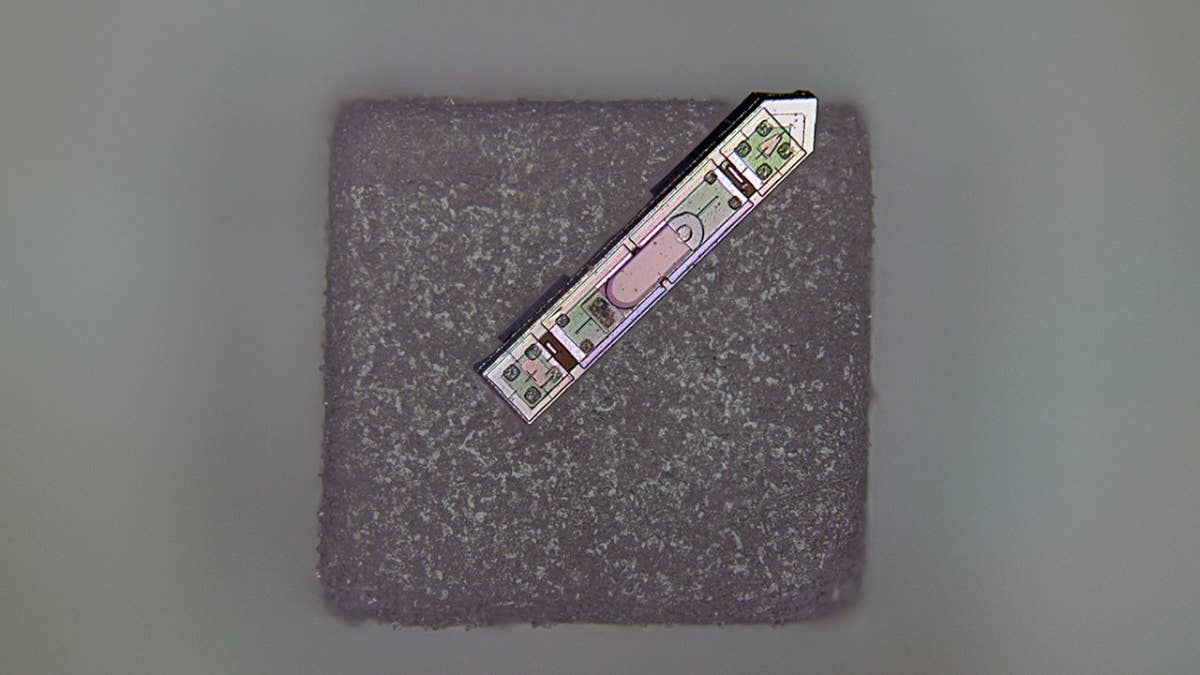

This Wireless Brain Implant Is Smaller Than a Grain of Salt

What we’re reading