DeepMind’s ChatGPT-Like Brain for Robots Lets Them Learn From the Internet

Share

Ever since ChatGPT exploded onto the tech scene in November of last year, it’s been helping people write all kinds of material, generate code, and find information. It and other large language models (LLMs) have facilitated tasks from fielding customer service calls to taking fast food orders. Given how useful LLMs have been for humans in the short time they’ve been around, how might a ChatGPT for robots impact their ability to learn and do new things? Researchers at Google DeepMind decided to find out and published their findings in a blog post and paper released last week.

They call their system RT-2. It’s short for robotics transformer 2, and it’s the successor to robotics transformer 1, which the company released at the end of last year. RT-1 was based on a small language and vision program and specifically trained to do many tasks. The software was used in Alphabet X’s Everyday Robots, enabling them to do over 700 different tasks with a 97 percent success rate. But when prompted to do new tasks they weren’t trained for, robots using RT-1 were only successful 32 percent of the time.

RT-2 almost doubles this rate, successfully performing new tasks 62 percent of the time it’s asked to. The researchers call RT-2 a vision-language-action (VLA) model. It uses text and images it sees online to learn new skills. That’s not as simple as it sounds; it requires the software to first “understand” a concept, then apply that understanding to a command or set of instructions, then carry out actions that satisfy those instructions.

One example the paper’s authors give is disposing of trash. In previous models, the robot’s software would have to first be trained to identify trash. For example, if there’s a peeled banana on a table with the peel next to it, the bot would be shown that the peel is trash while the banana isn’t. It would then be taught how to pick up the peel, move it to a trash can, and deposit it there.

RT-2 works a little differently, though. Since the model has trained on loads of information and data from the internet, it has a general understanding of what trash is, and though it’s not trained to throw trash away, it can piece together the steps to complete this task.

The LLMs the researchers used to train RT-2 are PaLI-X (a vision and language model with 55 billion parameters), and PaLM-E (what Google calls an embodied multimodal language model, developed specifically for robots, with 12 billion parameters). “Parameter” refers to an attribute a machine learning model defines based on its training data. In the case of LLMs, they model the relationships between words in a sentence and weigh how likely it is that a given word will be preceded or followed by another word.

Through finding the relationships and patterns between words in a giant dataset, the models learn from their own inferences. They can eventually figure out how different concepts relate to each other and discern context. In RT-2’s case, it translates that knowledge into generalized instructions for robotic actions.

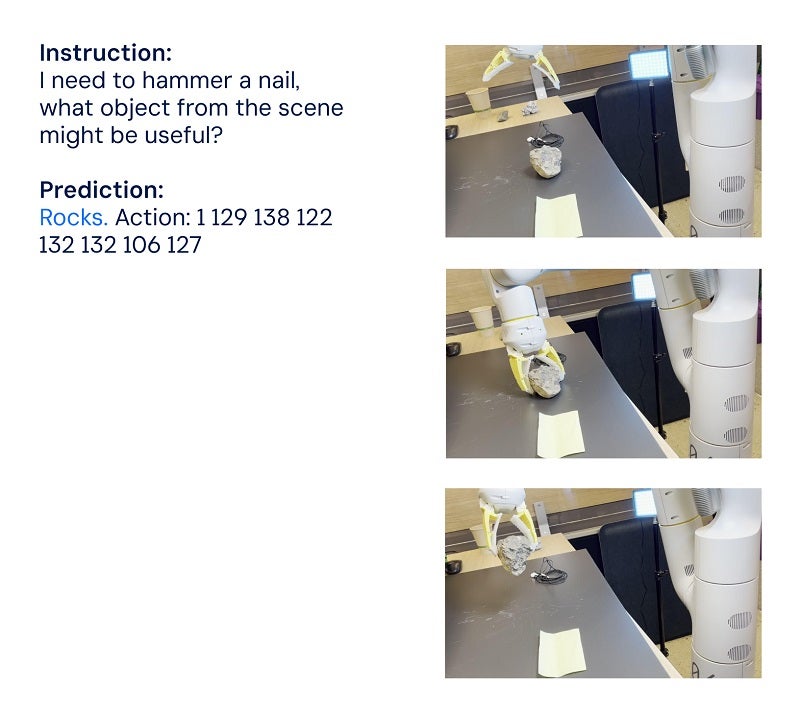

Those actions are represented for the robot as tokens, which are usually used to represent natural language text in the form of word fragments. In this case, the tokens are parts of an action, and the software strings multiple tokens together to perform an action. This structure also enables the software to perform chain-of-thought reasoning, meaning it can respond to questions or prompts that require some degree of reasoning.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Examples the team gives include choosing an object to use as a hammer when there’s no hammer available (the robot chooses a rock) and picking the best drink for a tired person (the robot chooses an energy drink).

Image Credit: Google DeepMind

“RT-2 shows improved generalization capabilities and semantic and visual understanding beyond the robotic data it was exposed to,” the researchers wrote in a Google blog post. “This includes interpreting new commands and responding to user commands by performing rudimentary reasoning, such as reasoning about object categories or high-level descriptions.”

The dream of general-purpose robots that can help humans with whatever may come up—whether in a home, a commercial setting, or an industrial setting—won’t be achievable until robots can learn on the go. What seems like the most basic instinct to us is, for robots, a complex combination of understanding context, being able to reason through it, and taking actions to solve problems that weren’t anticipated to pop up. Programming them to react appropriately to a variety of unplanned scenarios is impossible, so they need to be able to generalize and learn from experience, just like humans do.

RT-2 is a step in this direction. The researchers do acknowledge, though, that while RT-2 can generalize semantic and visual concepts, it’s not yet able to learn new actions on its own. Rather, it applies the actions it already knows to new scenarios. Perhaps RT-3 or 4 will be able to take these skills to the next level. In the meantime, as the team concludes in their blog post, “While there is still a tremendous amount of work to be done to enable helpful robots in human-centered environments, RT-2 shows us an exciting future for robotics just within grasp.”

Image Credit: Google DeepMind

Vanessa has been writing about science and technology for eight years and was senior editor at SingularityHub. She's interested in biotechnology and genetic engineering, the nitty-gritty of the renewable energy transition, the roles technology and science play in geopolitics and international development, and countless other topics.

Related Articles

Sparks of Genius to Flashes of Idiocy: How to Solve AI’s ‘Jagged Intelligence’ Problem

Researchers Break Open AI’s Black Box—and Use What They Find Inside to Control It

What the Rise of AI Scientists May Mean for Human Research

What we’re reading