Google DeepMind’s AI Rat Brains Could Make Robots Scurry Like the Real Thing

Share

Rats are incredibly nimble creatures. They can climb up curtains, jump down tall ledges, and scurry across complex terrain—say, your basement stacked with odd-shaped stuff—at mind-blowing speed.

Robots, in contrast, are anything but nimble. Despite recent advances in AI to guide their movements, robots remain stiff and clumsy, especially when navigating new environments.

To make robots more agile, why not control them with algorithms distilled from biological brains? Our movements are rooted in the physical world and based on experience—two components that let us easily explore different surroundings.

There’s one major obstacle. Despite decades of research, neuroscientists haven’t yet pinpointed how brain circuits control and coordinate movement. Most studies have correlated neural activity with measurable motor responses—say, a twitch of a hand or the speed of lifting a leg. In other words, we know brain activation patterns that can describe a movement. But which neural circuits cause those movements in the first place?

We may find the answer by trying to recreate them in digital form. As the famous physicist Richard Feynman once said, “What I cannot create, I do not understand.”

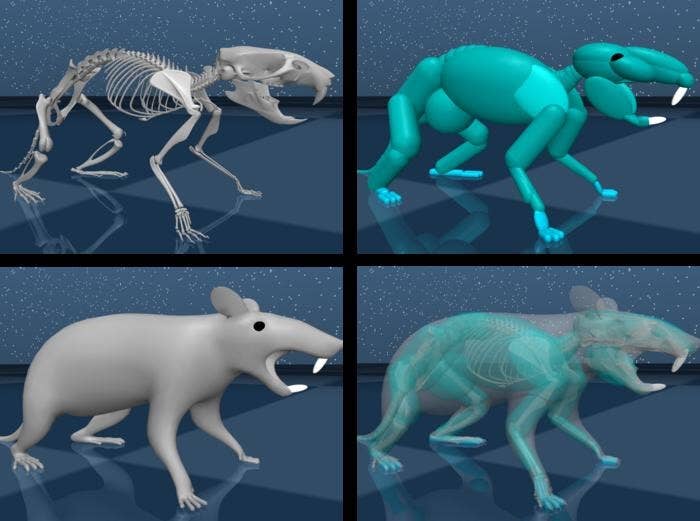

This month, Google DeepMind and Harvard University built a realistic virtual rat to home in on the neural circuits that control complex movement. The rat’s digital brain, composed of artificial neural networks, was trained on tens of hours of neural recordings from actual rats running around in an open arena.

Comparing activation patterns of the artificial brain to signals from living, breathing animals, the team found the digital brain could predict the neural activation patterns of real rats and produce the same behavior—for example, running or rearing up on hind legs.

The collaboration was “fantastic,” said study author Dr. Bence Ölveczky at Harvard in a press release. “DeepMind had developed a pipeline to train biomechanical agents to move around complex environments. We simply didn’t have the resources to run simulations like those, to train these networks.”

The virtual rat’s brain recapitulated two regions especially important for movement. Tweaking connections in those areas changed motor responses across a variety of behaviors, suggesting these neural signals are involved in walking, running, climbing, and other movements.

“Virtual animals trained to behave like their real counterparts could provide a platform for virtual neuroscience…that would otherwise be difficult or impossible to experimentally deduce,” the team wrote in their article.

A Dense Dataset

Artificial intelligence “lives” in the digital world. To power robots, it needs to understand the physical world.

One way to teach it about the world is to record neural signals from rodents and use the recordings to engineer algorithms that can control biomechanically realistic models replicating natural behaviors. The goal is to distill the brain’s computations into algorithms that can pilot robots and also give neuroscientists a deeper understanding of the brain’s workings.

So far, the strategy has been successfully used to decipher the brain’s computations for vision, smell, navigation, and recognizing faces, the authors explained in their paper. However, modeling movement has been a challenge. Individuals move differently, and noise from brain recordings can easily mess up the resulting AI’s precision.

This study tackled the challenges head on with a cornucopia of data.

The team first placed multiple rats into a six-camera arena to capture their movement—running around, rearing up, or spinning in circles. Rats can be lazy bums. To encourage them to move, the team dangled Cheerios across the arena.

As the rats explored the arena, the team recorded 607 hours of video and also neural activity with a 128-channel array of electrodes implanted in their brains.

They used this data to train an artificial neural network—a virtual rat’s “brain”—to control body movement. To do this, they first tracked how 23 joints moved in the videos and transferred them to a simulation of the rats’ skeletal movements. Our joints only bend in certain ways, and this step filters out what’s physically impossible (say, bending legs in the opposite direction).

The core of the virtual rat’s brain is a type of AI algorithm called an inverse dynamics model. Basically, it knows where “body” positions are in space at any given time and, from there, predicts the next movements leading to a goal—say, grab that coffee cup without dropping it.

Through trial-and-error, the AI eventually came close to matching the movements of its biological counterparts. Surprisingly, the virtual rat could also easily generalize motor skills to unfamiliar places and scenarios—in part by learning the forces needed to navigate the new environments.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

The similarities allowed the team to compare real rats to their digital doppelgangers, when performing the same behavior.

In one test, the team analyzed activity in two brain regions known to guide motor skills. Compared to an older computational model used to decode brain networks, the AI could better simulate neural signals in the virtual rat across multiple physical tasks.

Because of this, the virtual rat offers a way to study movement digitally.

One long-standing question, for example, is how the brain and nerves command muscle movement depending on the task. Grabbing a cup of coffee in the morning, for example, requires a steady hand without any jerking action but enough strength to hold it steady.

The team tweaked the “neural connections” in the virtual rodent to see how changes in brain networks alter the final behavior—getting that cup of coffee. They found one network measure that could identify a behavior at any given time and guide it through.

Compared to lab studies, these insights “can only be directly accessed through simulation,” wrote the team.

The virtual rat bridges AI and neuroscience. The AI models here recreate the physicality and neural signals of living creatures, making them invaluable for probing brain functions. In this study, one aspect of the virtual rat’s motor skills relied on two brain regions—pinpointing them as potential regions key to guiding complex, adaptable movement.

A similar strategy could provide more insight into the computations underlying vision, sensation, or perhaps even higher cognitive functions such as reasoning. But the virtual rat brain isn’t a complete replication of a real one. It only captures snapshots of part of the brain. But it does let neuroscientists “zoom in” on their favorite brain region and test hypotheses quickly and easily compared to traditional lab experiments, which often take weeks to months.

On the robotics side, the method adds a physicality to AI.

“We’ve learned a huge amount from the challenge of building embodied agents: AI systems that not only have to think intelligently, but also have to translate that thinking into physical action in a complex environment,” said study author Dr. Matthew Botvinick at DeepMind in a press release. “It seemed plausible that taking this same approach in a neuroscience context might be useful for providing insights in both behavior and brain function.”

The team is next planning to test the virtual rat with more complex tasks, alongside its biological counterparts, to further peek inside the inner workings of the digital brain.

“From our experiments, we have a lot of ideas about how such tasks are solved,” said Ölveczky to The Harvard Gazette. “We want to start using the virtual rats to test these ideas and help advance our understanding of how real brains generate complex behavior.”

Image Credit: Google DeepMind

Dr. Shelly Xuelai Fan is a neuroscientist-turned-science-writer. She's fascinated with research about the brain, AI, longevity, biotech, and especially their intersection. As a digital nomad, she enjoys exploring new cultures, local foods, and the great outdoors.

Related Articles

Vast ‘Blobs’ of Rock Have Stabilized Earth’s Magnetic Field for Hundreds of Millions of Years

Your Genes Determine How Long You’ll Live Far More Than Previously Thought

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

What we’re reading