What's New Today

Scientists Send Secure Quantum Keys Over 62 Miles of Fiber—Without Trusted Devices

Edd Gent

Latest Stories

This Week’s Awesome Tech Stories From Around the Web (Through February 7)

SingularityHub Staff

Artificial Intelligence

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

Ricky J. Sethi

Robotics

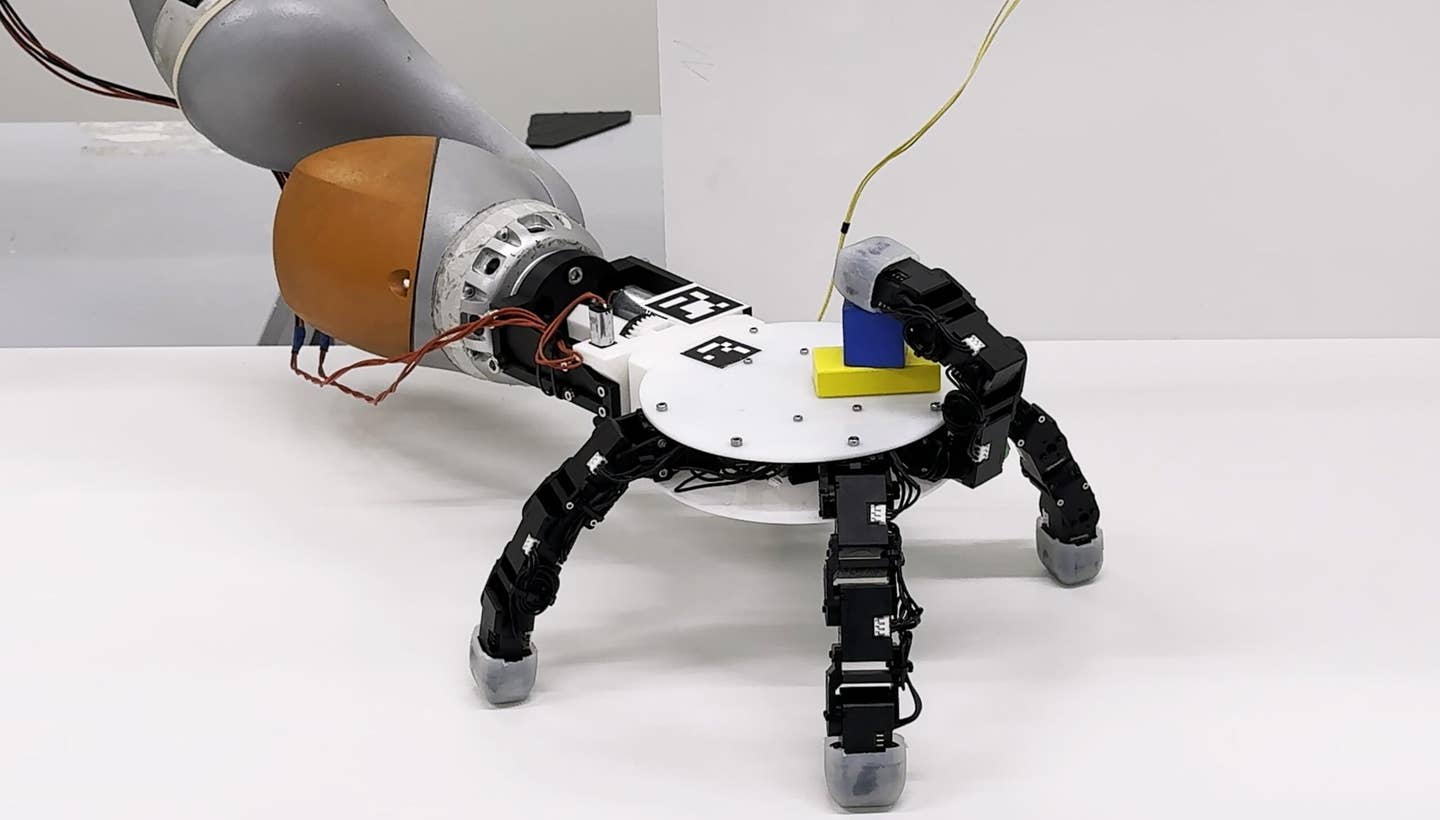

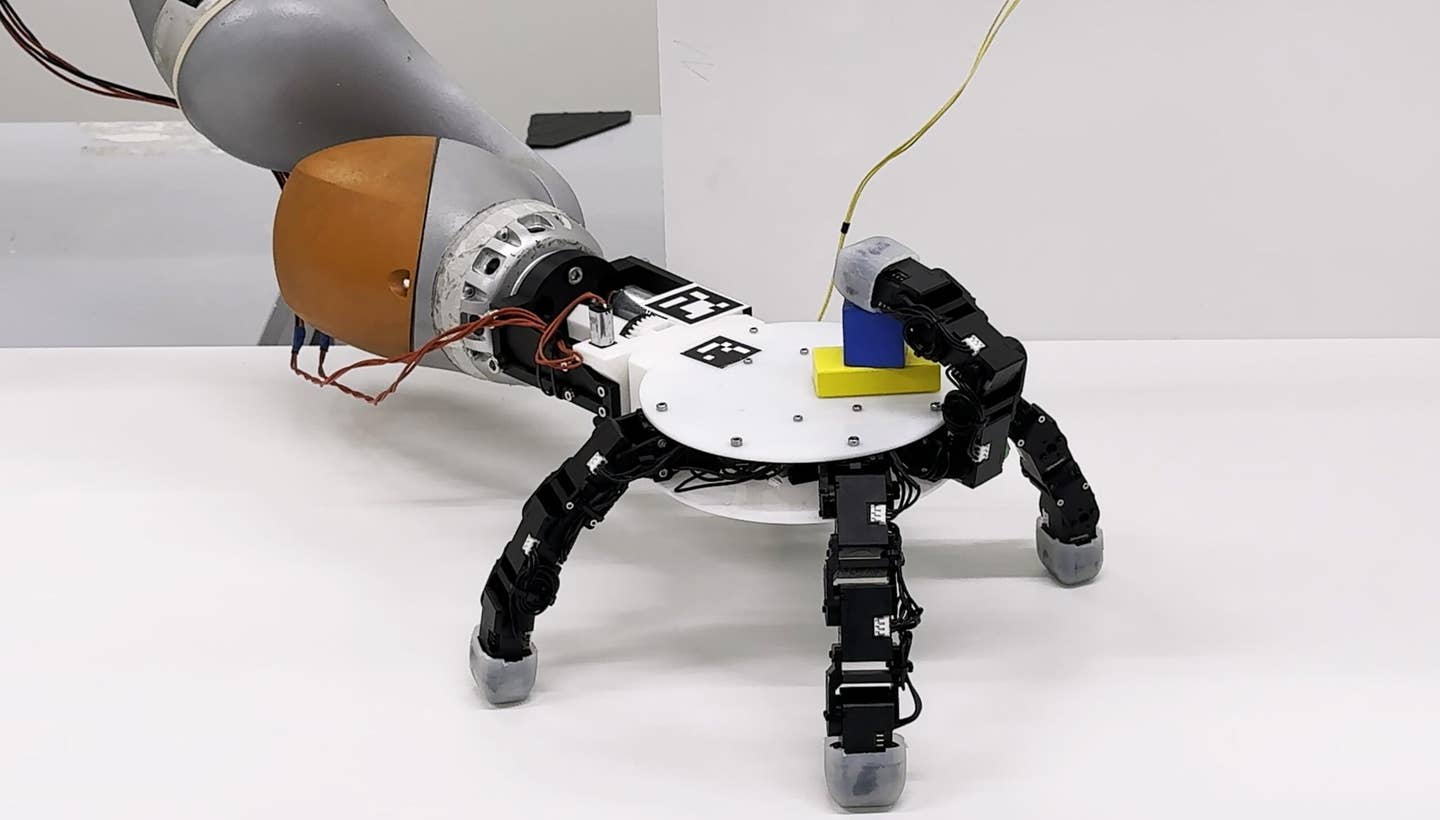

This Robotic Hand Detaches and Skitters About Like Thing From ‘The Addams Family’

Shelly Fan

Artificial Intelligence

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

Shelly Fan

Robotics

Waymo Closes in on Uber and Lyft Prices, as More Riders Say They Trust Robotaxis

Edd Gent

This Week’s Awesome Tech Stories From Around the Web (Through January 31)

SingularityHub Staff

What we’re reading

DON'T MISS A TREND

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

100% Free. No Spam. Unsubscribe any time.

Join 50,000+ researchers, entrepreneurs, science enthusiasts, technophiles, and the insatiably curious

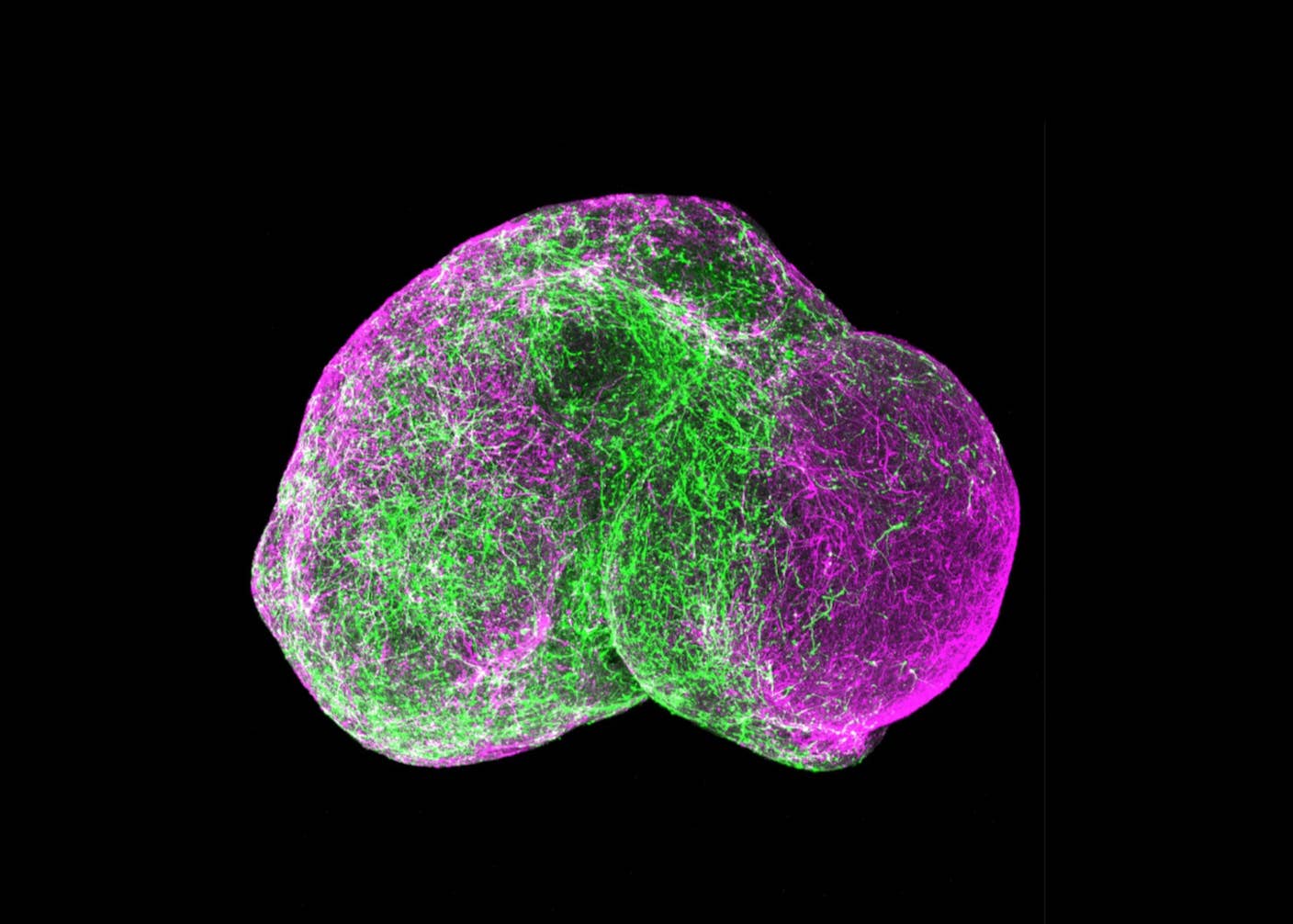

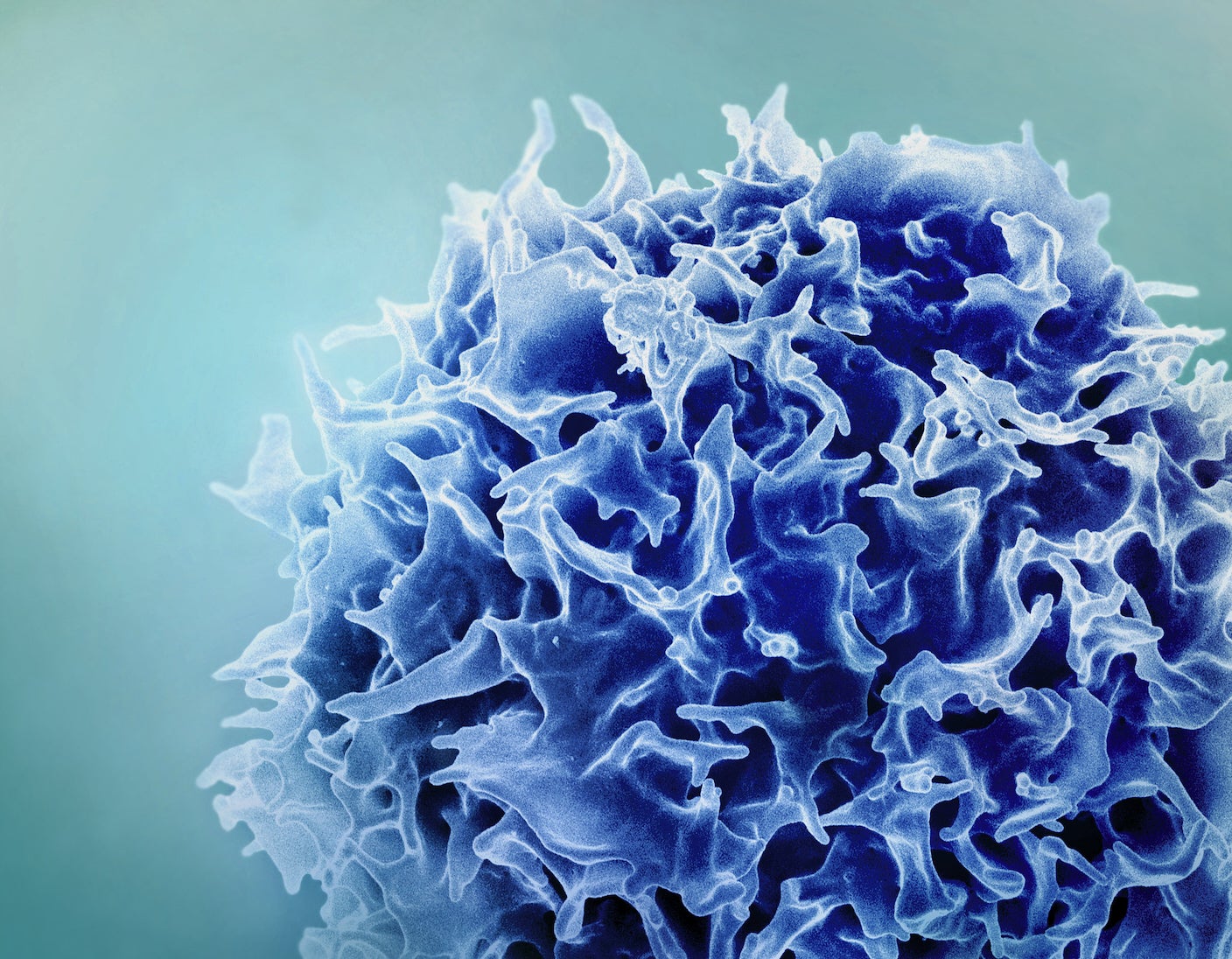

FeaturedBiotechnology

Single Injection Transforms the Immune System Into a Cancer-Killing Machine

Shelly Fan

Editor's Picks

Explore topics

- Artificial Intelligence

- Biotechnology

- Computing

- Energy

- Future

- Robotics

- Science

- Space

- Tech

Scientists Want to Give ChatGPT an Inner Monologue to Improve Its ‘Thinking’

Ricky J. Sethi

Humanity’s Last Exam Stumps Top AI Models—and That’s a Good Thing

Shelly Fan

AI Now Beats the Average Human in Tests of Creativity

Edd Gent

Meta Will Buy Startup’s Nuclear Fuel in Unusual Deal to Power AI Data Centers

Edd Gent

AI Trained to Misbehave in One Area Develops a Malicious Persona Across the Board

Shelly Fan

How I Used AI to Transform Myself From a Female Dance Artist to an All-Male Post-Punk Band

Priscilla Angelique-Page