MIT’s ‘visual microphone’ is the kind of tool you’d expect Q to develop for James Bond, or to be used by nefarious government snoops listening in on Jason Bourne. It’s like these things except for one crucial thing—this is the real deal.

Describing their work in a paper, researchers led by MIT engineering graduate student, Abe Davis, say they’ve learned to recover entire conversations and music by simply videoing and analyzing the vibrations of a bag of chips or a plant’s leaves.

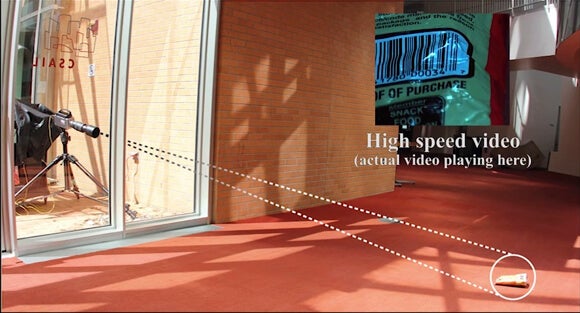

The researchers use a high-speed camera to record items—a candy wrapper, a chip bag, or a plant—as they almost invisibly vibrate to voices in conversation or music or any other sound. Then, using an algorithm based on prior research, they analyze the motions of each item to reconstruct the sounds behind each vibration.

The result? Whatever you say next to that random bag of chips lying on the kitchen table can and will be held against you in a court of law. (Hypothetically.)

The technique is accurate to a tiny fraction of a pixel and can reconstruct sound based on how the edges of those pixels change in color due to sound vibration. It works equally well in the same room or at a distance through soundproof glass.

The results are impressive (check out the video below). The researchers use their algorithm to digitally reassemble the notes and words of “Mary Had a Little Lamb” with surprising fidelity, and later, the Queen song “Under Pressure” with enough detail to identify it using the mobile music recognition app, Shazam.

While the visual microphone is cool, it has limitations.

The group was able to make it work at a distance of about 15 feet, but they haven’t tested longer distances. And not all materials are created equal. Plastic bags, foam cups, and foil were best. Water and plants came next. The worst materials, bricks for example, were heavy and only poorly conveyed local vibrations.

Also, the camera matters. The best results were obtained from high-speed cameras capable of recording 2,000 to 6,000 frames per second (fps)—not the highest frame rate out there, but orders of magnitude higher than your typical smartphone.

Even so, the researchers were also able to reproduce intelligible sound using a special technique that exploits the way many standard cameras record video.

Your smartphone, for example, uses a rolling shutter. Instead of recording a frame all at once, it records it line by line, moving from side to side. This isn’t ideal for image quality, but the distortions it produces infer motion the MIT team’s algorithm can read.

The result is more noisy than the sounds reconstructed using a high-speed camera. But theoretically, it lays the groundwork for reconstructing audio information, from a conversation to a song, using no more than a smartphone camera.

Primed by the news cycle, the mind is almost magnetically drawn to surveillance and privacy issues. And of course the technology could be used for both good and evil by law enforcement, intelligence agencies, or criminal organizations.

However, though the MIT method is passive, the result isn’t necessarily so different from current techniques. Surveillance organizations can already point a laser at an item in a room and infer sounds based on how the light scatters or how its phase changes.

And beyond surveillance and intelligence, Davis thinks it will prove useful as a way to visually analyze the composition of materials or the acoustics of a concert hall. And of course, the most amazing applications are the ones we can’t imagine.

None of this would be remotely possible without modern computing. The world is full of information encoded in the unseen. We’ve extended our vision across the spectrum, from atoms to remote galaxies. Now, technology is enabling us to see sound.

What other hidden information will we one day mine with a few clever algorithms?

Image Credit: MIT Computer Science and Artificial Intelligence Laboratory (CSAIL)/YouTube