The list of things computers can do better than humans is already long, and it’s getting longer every year. Now, you can add making environmental inferences.

We pride ourselves on the ability to read between the lines, find patterns and information where they aren’t immediately obvious. A hundred subtle (or not so subtle) details in a given scene may add up to the fight-or-flight judgement this is a safe place—or, run!

Turns out, computers can do the same thing, and they do it better than us.

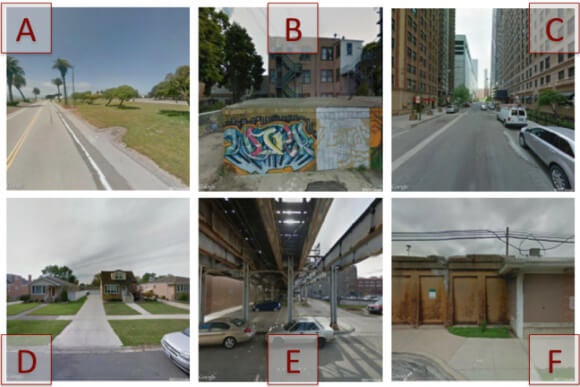

Researchers at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) showed human volunteers two pictures from different locations and asked them the questions: Which scene is closer to a McDonald’s, and which has the higher crime rate?

The humans were outperformed by a computer program.

The algorithm—developed by PhD students Aditya Khosla, Byoungkwon An, Joseph Lim and CSAIL principal investigator Antonio Torralba—was fed eight million Google images from eight urban areas across the US. Each image was tagged with key GPS data related to crime rates and McDonald’s locations.

The MIT program uses an algorithmic approach called deep learning where a computer is fed a huge amount of data and asked to independently sort individual elements. For example, on its own, the program noted you tend to find taxis, police vans, and prisons near McDonald’s—but you don’t find cliffs, suspension bridges, or sandbars.

“These sorts of algorithms have been applied to all sorts of content, like inferring the memorability of faces from headshots,” said Khosla. “But before this, there hadn’t really been research that’s taken such a large set of photos and used it to predict qualities of the specific locations the photos represent.”

Khosla thinks the algorithm could be used in an app that helps users avoid high-crime areas or aid McDonald’s in deciding where to build their next restaurant.

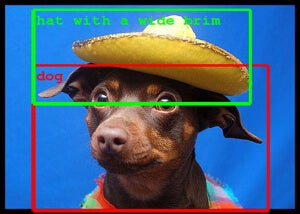

While the MIT algorithm uses contextual clues to make a specific inference, other computer vision work is allowing software to more accurately identify exactly what those clues are—like that’s a dog wearing a wide-brimmed hat.

While the MIT algorithm uses contextual clues to make a specific inference, other computer vision work is allowing software to more accurately identify exactly what those clues are—like that’s a dog wearing a wide-brimmed hat.

In this year’s ImageNet large-scale visual recognition challenge, the largest academic computer vision contest in the world, the winning team doubled the quality of computer vision classification and detection over last year’s outcome.

The goal of all this? Certainly better image search or maybe smarter apps. But also paired with a robot and camera system—improved computer vision and the ability to make simple inferences about what’s being seen make for more autonomous robots.

Such robots are already making their way onto the factory floor, and eventually, they may prove useful in other professional settings, or even in at home.