As part of Singularity Hub’s Future of Work series, I had a chance to sit down with Neil Jacobstein, the co-chair of the Artificial Intelligence and Robotics track. Neil has worked on AI applications for Fortune 100 and government customers for over 25 years. Neil also has deep interdisciplinary roots ranging from environmental sciences to nanotechnology and molecular manufacturing. Neil has spent significant time researching the ethical implications of exponential technologies.

Machine learning and robots are going to create a lot of wealth, but they will also replace a lot of human labor. We saw many types of jobs disappear in the Industrial Revolution, but we also saw jobs created that had never existed before. In this new wave of technological disruption, do you think the rate of job displacement is going to be equal to the rate of new job creation?

Although many of my colleagues are more positive on the subject of new job creation, I think it’s likely that the pace of job automation will outstrip job creation in the short term and cause a lot of unemployment and underemployment.

In the Industrial Revolution you could jump off of any unskilled labor job, take some courses, learn some new skills, and find a new, likely higher paying job. What’s different this time is that we now have AIs and machine learning algorithms that can replicate many of the cognitive skills these individuals would be pursuing, and they can do it much faster. In many winning cases, we will see people partnering with AI and robotic systems to deliver productivity not possible with AIs or humans alone.

I think this transition will ultimately be a good thing. We will generate more wealth than we ever have before, the economy will grow, and businesses will be able to do new things they’ve never done before. In the long term, this will create a society that potentially improves the quality of life for everyone. However, in the short term, we’ll likely see displacement because we don’t have the social mechanisms in place to keep up with these changes.

Most large organization, whether corporations or governments, are famously (or infamously) reactive rather than proactive. Is there any chance our global societies will prepare sufficiently to make this upcoming transition a smooth one or are we potentially facing some rough seas ahead? What are the major hurdles we’ll need to get over if we want to minimize disruption?

Most large organization, whether corporations or governments, are famously (or infamously) reactive rather than proactive. Is there any chance our global societies will prepare sufficiently to make this upcoming transition a smooth one or are we potentially facing some rough seas ahead? What are the major hurdles we’ll need to get over if we want to minimize disruption?

Society has a long history of not being particularly good at proactive change. We rarely, if ever, institute policy before a trainwreck. I don’t assume we’ll see a change in human nature in the next decade, and as a result, there will likely be a large number of displaced and underutilized people, and they could well be angry about it. I would rather see us be much more proactive, but it is unlikely that we will do enough in time.

The biggest hurdle is psychological — social attitudes need to change. Most people currently believe that people need to work for a living, that there is something shameful or disgraceful about not working. As a society, we tend to view negatively people that utilize social welfare, and social safety net programs. One of the great things about moving towards a world of abundance is that society will be able to easily afford to provide education, health care, shelter, and social services to everyone.

A lot of discussion has rekindled around the topic of a universal basic income. Do you think these ideas will come to fruition?

Absolutely. Eventually we’ll see a basic income as something fundamental and important to society, and it doesn’t have to be a handout. We can pay people to go back to school, to take care of the environment, and to take care of young or old people that need help. These are things that society considered too expensive to do in the past. Ideally, we’ll be able to take care of a lot of the problems that have slipped through the social cracks, leading to a higher quality of life.

There has been some smaller scale experimentation and research, dating back to the 70s. How long will it be before we see a basic income implemented on a large scale?

In more progressive countries it may take 5 to 10 years to become mainstream and get to large scale. The US will not be the first, but at least the basic minimum income has gone from being an inadmissible conversation, to being admissible in both the Democratic and Republican parties. It is now entering the mainstream conversation and that in itself is a big step forward.

At one time social security was controversial, so were child labor laws — people used to think it was essential that we employ child labor. The 40-hour work week was a also a big change. As were occupational safety and health and environmental protection. Further back, some people in the US and elsewhere thought we couldn’t get along without slavery. These changes in attitude and practices were often hard fought social changes. They were reactions to social pressure to create a more humane, safe, and sane work environment and quality of life.

It seems clear that various forms of artificial intelligences and robots will become more common in the workplace. Do you think we are capable as a society of adjusting to these changes and retooling our skill sets?

Yes. AIs and robots entered the workplace decades ago. If people are proactive and educate themselves, we can expect to see humans continuing to work with and alongside AIs and robots, ultimately achieving greater productivity than either robots or humans working separately.

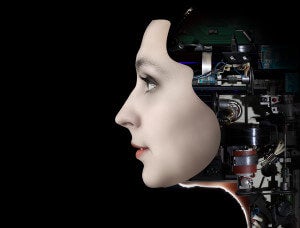

However, while we can leverage work patterns that take the best of both for quite a while, at some point the biological human component will lag behind the capabilities of the machines, and we will have to further co-evolve our cognition with machines, or be eclipsed. That process has already begun, and we can expect the interfaces to become deeper and more intimate. I am optimistic about our prospects in the long run.

It’s been said the most important skill to have in the 21st century is the ability to learn and relearn everything you’ve been taught. What are the particular skills that someone could start working on today that would help them succeed or best prepare them for the future?

I think the most important skill is the ability to ask the right questions — critical and creative thinking skills in general will not become obsolete any time soon. They are the foundation for people to improve businesses, manufacturing, their organizations, and themselves.

It is also important to be able to think statistically, model the world mathematically, and know what ideas violate the second law of thermodynamics, or are really not economically viable. It’s critical to not waste time trying to create a perpetual motion machine. On the other hand, we want to encourage people to be technically savvy, informed, optimistic and creative enough to come up with potentially breakthrough ideas that some people today may currently think are impossible.

Echoing concerns by Carl Sagan, we are living in a society increasingly dependent on science and technology, yet the majority of people are ignorant to how any of it works on a physical level. Do you think more people should work on better understanding the technology they use every day?

Yes. I see a lot of people attempting to apply technology with no real mechanistic understanding or underlying conceptual model of how it really works. If people use their technology in only a routine and black box way it makes it very difficult to do problem solving. If the routine breaks, they have difficulty being resilient and creative in their approach to problem solving.

There are, of course, many layers of abstraction — you don’t have to understand everything on the quantum mechanical scale to obtain a pretty good working model of what most macro-scale machines are doing. The goal should be to understand at least one level of abstraction deeper than your everyday interaction with the technology. However, it does help to understand the entire stack of technology levels that you want to improve. For example, understanding the extraordinary potential of atomically precise manufacturing requires modeling materials at many levels of abstraction.

Our economy and society is structured to prefer specialization. Individuals are encouraged to build up more and more knowledge in a single area. Do you think this will be the case going forward?

I think that specialization is a very brittle model for problem solving and creativity. In the beginning of my lectures, I often address the importance of being interdisciplinary. The world is a seamless whole. We don’t want to become victims of single specialty perspectives. It’s best to build a solid interdisciplinary foundation of problem solving skills — you can still specialize later on, depending on the problems you are most interested in solving.

At Singularity University we tend to be quite optimistic about the future. Do you think exponential technologies will truly lead to abundance?

Yes. The convergence of AI, robotics, synthetic biology, nanotechnology, and molecular manufacturing really will lead to an explosion of wealth and resource availability. The question is when we get there, how do we ensure a reasonable distribution for everyone, and create a society that is workable and healthy for all of its citizens?

There has recently been a lot of public exposure to concerns surrounding Artificial General Intelligence thanks to comments from Stephen Hawking, Bill Gates, and others. Elon Musk even donated $10 million to the Future of Life Institute for research into protective measures. Do you think these concerns are justified?

The AI community has begun to take the downside risk of AI very seriously. I attended a Future of AI workshop in January of 2015 in Puerto Rico sponsored by the Future of Life Institute. The ethical consequences of AI were front and center. There are four key thrusts the AI community is focusing research on to get better outcomes with future AIs:

Verification – Research into methods of guaranteeing that the systems we build actually meet the specifications we set.

Validation – Research into ensuring that the specifications, even if met, do not result in unwanted behaviors and consequences.

Security – Research on building systems that are increasingly difficult to tamper with – internally or externally.

Control – Research to ensure that we can interrupt AI systems (even with other AIs) if and when something goes wrong, and get them back on track.

These aren’t just philosophical or ethical considerations, they are system design issues. I think we’ll see a greater focus on these kinds of issues not just in AI, but in software generally as we develop systems with more power and complexity.

Will AIs ever be completely risk free? I don’t think so. Humans are not risk free! There is a predator/prey aspect to this in terms of malicious groups who choose to develop these technologies in harmful ways. However, the vast majority of people, including researchers and developers in AI, are not malicious. Most of the world’s intellect and energy will be spent on building society up, not tearing it down. In spite of this, we need to do a better job anticipating the potential consequences of our technologies, and being proactive about creating the outcomes that improve human health and the environment. That is a particular challenge with AI technology that can improve itself. Meeting this challenge will make it much more likely that we can succeed in reaching for the stars.