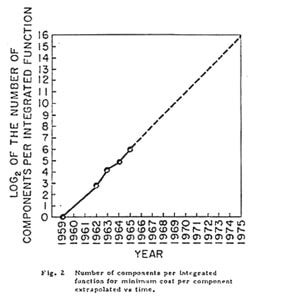

On April 19, 1965, Intel cofounder Gordon Moore (then of Fairchild Semiconductor) published a paper on the fledgling technology of integrated circuits. In the paper, Moore noted that the number of components (diodes, capacitors, resistors, and transistors) that could fit on a chip had been doubling every year.

Despite what amounted to a relatively sparse set of historical data, Moore made a bold forecast.

The doubling trend, he wrote, would continue for the next decade and the number of components would increase from 64 in 1965 to 65,000 by 1975. Moore was off by a factor of two, and he later revised the doubling period to two years. But otherwise, the prediction proved eerily prescient. His colleague and Caltech professor, Carver Meade, dubbed it Moore’s Law, and the name stuck.

The doubling trend, he wrote, would continue for the next decade and the number of components would increase from 64 in 1965 to 65,000 by 1975. Moore was off by a factor of two, and he later revised the doubling period to two years. But otherwise, the prediction proved eerily prescient. His colleague and Caltech professor, Carver Meade, dubbed it Moore’s Law, and the name stuck.

An awe inspiring demonstration of the power of an exponential trend, transistors on modern chips now number in the billions. In 2014, the semiconductor industry manufactured 250 billion billion transistors at a rate of, on average, 8 trillion a second. That’s roughly 25 transistors per star in the Milky Way—every second.

This massive explosion of microchips has made more than just personal computers possible. In a recent blog post, economist Timothy Taylor wrote, “Many other technological changes—like the smartphone, medical imaging technologies, decoding the human gene, or various developments in nanotechnology—are only possible based on a high volume of cheap computing power.”

Today, miniaturization of key components, in particular transistors, continues to push into the nanoscale.

The latest chips boast 14 nanometer (or billionths of a meter) features and upcoming chips may push to 10 nanometers and beyond. However, while transistor densities (the number you can fit on a single chip) continue their doubling, other properties, like clock speed began slowing years ago.

The added benefit of more miniature transistors is getting smaller compared to the cost.

“For planning horizons, I pick 2020 as the earliest date we could call [Moore’s Law] dead,” Intel’s former chief architect Bob Colwell said in a presentation back in 2013. “You could talk me into 2022, but whether it will come at 7 or 5nm, it’s a big deal.” Colwell and others are skeptical new technology will pick up the slack. That is, once Moore’s Law ends—we won’t see its like again any time soon in computing.

But not everyone shares this view. In his book, The Singularity Is Near, Ray Kurzweil plots the price-performance (or processing power per dollar) of five paradigms in computing—electromechanical, relay, vacuum tube, discrete transistor, and (finally) integrated circuits.

Together, they follow a smooth exponential curve. How? Each new technology advances along an S-curve—an exponential beginning and a flattening out as the technology reaches its limits. But as one technology flattens, the next paradigm takes over. The result is a series of overlapping S-curves that combine in a broader exponential curve with no significant flattening (yet).

From this view, as Moore’s Law turns 50, the question is: What comes next?

Candidates include quantum computing (for a specific applications), 3D chips (extending flat components into the third dimension), and neuromorphic chips (mimicking the brain). Materials may go from silicon to gallium arsenide, carbon nanotubes, or graphene. And chips may swap electricity for light (photonics).

None of these are certain of success. Colwell, for example, thinks that although they represent advances, these will be only incremental improvements. The years may prove him right. On the other hand, Kurzweil’s observations imply a new technology will arise to continue the trend.

“My experience has been whenever you think you’re out of gas on a learning curve, there’s a breakthrough,” Carver Meade recently said. “But the breakthrough never comes from where you’re thinking. It’s never clear until it’s already happened what’s going to be the next really exciting thing. But there always is one.”

To our readers: What is the best candidate for the next great shift in computing technology? Do you believe it can fully assume Moore’s mantle? And if so, when??

Image Credit: Shutterstock.com; Intel