This is the first in a four-part series looking at the big ideas in Ray Kurzweil’s book The Singularity Is Near. Be sure to read the other articles:

- Technology Feels Like It’s Accelerating—Because It Actually Is

- How to Think Exponentially and Better Predict the Future

- Ray Kurzweil Predicts Three Technologies Will Define Our Future

“A common challenge to the ideas presented in this book is that these exponential trends must reach a limit, as exponential trends commonly do.” –Ray Kurzweil, The Singularity Is Near

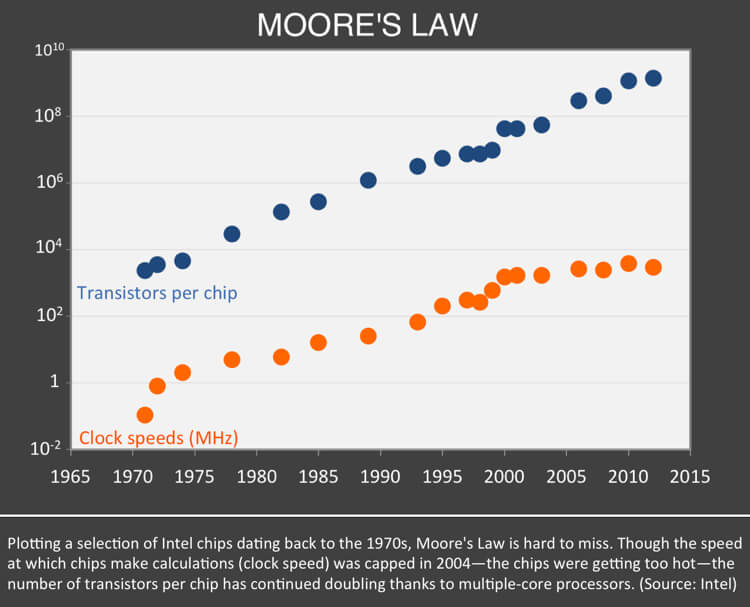

Much of the future we envision today depends on the exponential progress of information technology, most popularly illustrated by Moore’s Law. Thanks to shrinking processors, computers have gone from plodding, room-sized monoliths to the quick devices in our pockets or on our wrists. Looking back, this accelerating progress is hard to miss—it’s been amazingly consistent for over five decades.

But how long will it continue?

This post will explore Moore’s Law, the five paradigms of computing (as described by Ray Kurzweil), and the reason many are convinced that exponential trends in computing will not end anytime soon.

What Is Moore’s Law?

“In brief, Moore’s Law predicts that computing chips will shrink by half in size and cost every 18 to 24 months. For the past 50 years it has been astoundingly correct.” –Kevin Kelly, What Technology Wants

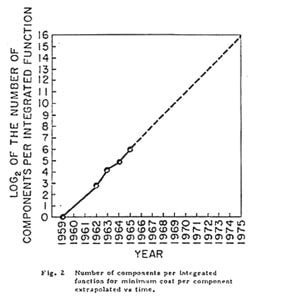

In 1965, Fairchild Semiconductor’s Gordon Moore (later cofounder of Intel) had been closely watching early integrated circuits. He realized that as components were getting smaller, the number that could be crammed on a chip was regularly rising and processing power along with it.

Based on just five data points dating back to 1959, Moore estimated the time it took to double the number of computing elements per chip was 12 months (a number he later revised to 24 months), and that this steady exponential trend would result in far more power for less cost.

Soon it became clear Moore was right, but amazingly, this doubling didn’t taper off in the mid-70s—chip manufacturing has largely kept the pace ever since. Today, affordable computer chips pack a billion or more transistors spaced nanometers apart.

Moore’s Law has been solid as a rock for decades, but the core technology’s ascent won’t last forever. Many believe the trend is losing steam, and it’s unclear what comes next.

Experts, including Gordon Moore, have noted Moore’s Law is less a law and more a self-fulfilling prophecy, driven by businesses spending billions to match the expected exponential pace. Since 1991, the semiconductor industry has regularly produced a technology roadmap to coordinate their efforts and spot problems early.

In recent years, the chipmaking process has become increasingly complex and costly. After processor speeds leveled off in 2004 because chips were overheating, multiple-core processors took the baton. But now, as feature sizes approach near-atomic scales, quantum effects are expected to render chips too unreliable.

This year, for the first time, the semiconductor industry roadmap will no longer use Moore’s Law as a benchmark, focusing instead on other attributes, like efficiency and connectivity, demanded by smartphones, wearables, and beyond.

As the industry shifts focus, and Moore’s Law appears to be approaching a limit, is this the end of exponential progress in computing—or might it continue awhile longer?

Moore’s Law Is the Latest Example of a Larger Trend

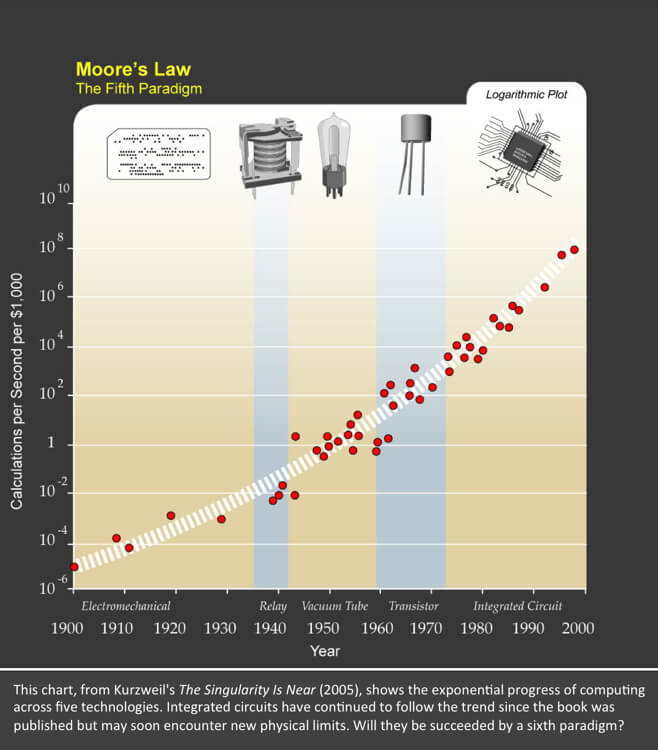

“Moore’s Law is actually not the first paradigm in computational systems. You can see this if you plot the price-performance—measured by instructions per second per thousand constant dollars—of forty-nine famous computational systems and computers spanning the twentieth century.” –Ray Kurzweil, The Singularity Is Near

While exponential growth in recent decades has been in integrated circuits, a larger trend is at play, one identified by Ray Kurzweil in his book, The Singularity Is Near. Because the chief outcome of Moore’s Law is more powerful computers at lower cost, Kurzweil tracked computational speed per $1,000 over time.

This measure accounts for all the “levels of ‘cleverness’” baked into every chip—such as different industrial processes, materials, and designs—and allows us to compare other computing technologies from history. The result is surprising.

The exponential trend in computing began well before Moore noticed it in integrated circuits or the industry began collaborating on a roadmap. According to Kurzweil, Moore’s Law is the fifth computing paradigm. The first four include computers using electromechanical, relay, vacuum tube, and discrete transistor computing elements.

There May Be ‘Moore’ to Come

“When Moore’s Law reaches the end of its S-curve, now expected before 2020, the exponential growth will continue with three-dimensional molecular computing, which will constitute the sixth paradigm.” –Ray Kurzweil, The Singularity Is Near

While the death of Moore’s Law has been often predicted, it does appear that today’s integrated circuits are nearing certain physical limitations that will be challenging to overcome, and many believe silicon chips will level off in the next decade. So, will exponential progress in computing end too? Not necessarily, according to Kurzweil.

The integrated circuits described by Moore’s Law, he says, are just the latest technology in a larger, longer exponential trend in computing—one he thinks will continue. Kurzweil suggests integrated circuits will be followed by a new 3D molecular computing paradigm (the sixth) whose technologies are now being developed. (We’ll explore candidates for potential successor technologies to Moore’s Law in future posts.)

Further, it should be noted that Kurzweil isn’t predicting that exponential growth in computing will continue forever—it will inevitably hit a ceiling. Perhaps his most audacious idea is the ceiling is much further away than we realize.

How Does This Affect Our Lives?

Computing is already a driving force in modern life, and its influence will only increase. Artificial intelligence, automation, robotics, virtual reality, unraveling the human genome—these are a few world-shaking advances computing enables.

If we’re better able to anticipate this powerful trend, we can plan for its promise and peril, and instead of being taken by surprise, we can make the most of the future.

Kevin Kelly puts it best in his book What Technology Wants:

“Imagine it is 1965. You’ve seen the curves Gordon Moore discovered. What if you believed the story they were trying to tell us…You would have needed no other prophecies, no other predictions, no other details to optimize the coming benefits. As a society, if we just believed that single trajectory of Moore’s, and none other, we would have educated differently, invested differently, prepared more wisely to grasp the amazing powers it would sprout.”

To learn more about the exponential pace of technology and Ray Kurzweil’s predictions, read his 2001 essay “The Law of Accelerating Returns” and his book, The Singularity Is Near.

Image Credit: Shutterstock, Intel (Gordon Moore’s 1965 integrated circuit chart), Ray Kurzweil and Kurzweil Technologies, Inc/Wikimedia Commons/CC BY