Wireless Device Reads Brain Signals, Turns them into Speech (Video)

Share

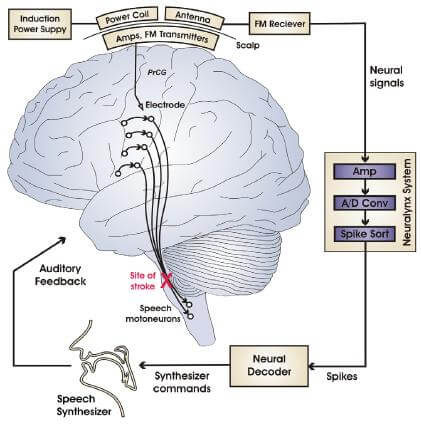

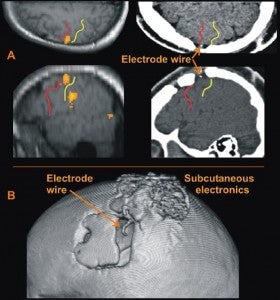

Remarkable news keeps coming for those who are trapped in their own bodies. People with locked-in syndrome, a condition where a healthy mind is unable to express itself due to brain damage, are slowly being opened up through direct contact with their motor neurons in the brain. Frank Guenther at Boston University's Speech Lab has teamed up with Phillip Kennedy at Neural Signals to measure activity in the speech centers of the brain through implanted electrodes. These electrodes can then relay the information to a sub-dermal amplifier and then to a computer via wireless FM transmission. The results: a patient has demonstrated the ability to form rudimentary vowel sounds on a synthesizer using just his thoughts. It's a small step, but research like this may one day allow someone to simply think of the words he wants to say, and have a computer do the talking for him. We have some videos of the wireless brain signal to speech test results after the break.

Brain-machine interfaces (BMIs), aka brain-computer interfaces (BCIs), are in development by several different teams across the globe. The Braingate project uses similar wireless transmission technology to connect electrodes in the brain to cursors on a computer, or even the controls of an electric wheelchair. Like many such projects, Braingate uses motor neurons to control movement. We've seen other teams work with robotic arms and prosthetic limbs. The Speech Lab/Neural Signals BMI is somewhat rarer because it is translating those signals which might inform mouth/tongue/vocal chord movement and directly interpreting them as sounds. This layer of interpretation is difficult to perfect but its pursuit gives us hope that one day we could see devices that actually "read" our thoughts and translate them into images, sounds, and other sensations. Once we achieved that level of "mind-reading", there could be a direct conduit between our mental and digital worlds. Totally immersive virtual reality, surrogate bodies...the possibilities really expand at that point.

For now, research into turning thoughts into sounds is still at a rudimentary level. Neural Signal designed the hardware (electrodes, amp, receiver) and implanted it, but the Speech Lab had to develop the software routines to interpret the information into sounds. As described in the paper published on PLoS ONE, the joint team of scientists was able to produce a system that provided the patient with the means to make vowel sounds. A synthesized voice produced on a computer gave the patient auditory feedback so that he could hear how his "thoughts" were being translated and could focus on correcting them as needed. That feedback was remarkably fast, about 50ms, on par with the normal speed of talking. After practice, the patient's ability to listen to vowel sounds and then repeat them improved from 45% to 70% (and beyond). In the following video from New Scientist, you can see how the patient is given audio promptings and then repeats the vowel sounds using his thoughts and the BMI. The second video, from Wired, gives you a better idea of what appears on the screen. You may want to turn down the volume before watching as the synthesized voice is fairly loud and atonal.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

When I first heard about this project, I sort of expected to see a video with, you know, a little more pizazz. Maybe someone with a Darth Vader voice, or a Stephen Hawking look a like. The Speech Lab/Neural Signals BMI is at once less impressive and more important however. No, the patient isn't making clear intelligible speech yet. No, the voice doesn't carry human emotion. But he's talking with wires in his brain, people! Wires in the brain and a little FM transmitter are all that connect this guy's speech centers to the outside world. It's not Darth Vader, but it still gives me chills.

Guenther and Kennedy plan to make some major improvements in the BMI in the next few rounds of experimentation. First, the number of implanted electrodes will increase from 2 or 3 up to 32. This will allow for the interpretation of neural signals that describe more complex mouth movements. Patients will be able to manipulate a "virtual tongue" with their brain activity and thus be able to form consonants (and hopefully full words). Also, the BMI currently requires lab computers to work, but the researcher team is hoping to move this functionality to a laptop.

As always, research like this doesn't happen in a vacuum. The data gathered in this experiment will inform others and vice versa, hopefully accelerating the development process. Already we've seen how research into Broca's Area in the brain is providing insight into how we speak. The Speech Lab/Neural Signals BMI, which also deals with Broca's Area, could benefit from that research. This sort of basic neural research however, is likely to have a long lead-up time before we see tangible effects outside of the lab. In the short term, non invasive devices like Audeo, or the artificial larynx are more likely to make measurable improvements in a larger number of people's lives. For those with locked-in syndrome, however, BMIs are really the only non-biological solution. As we learn how to release their minds into the outside world, we will find the key to unlock our own as well.

[photo credits: Speech Lab]

[video credits: New Scientists, Wired]

Related Articles

Study: AI Chatbots Choose Friends Just Like Humans Do

AI Companies Are Betting Billions on AI Scaling Laws. Will Their Wager Pay Off?

Are Animals and AI Conscious? Scientists Devise New Theories for How to Test This

What we’re reading