Imagine if all the sounds in your world translated into visual experiences. Sound psychedelic? The cross-blending of sensory input – called synesthesia – is normally limited to individuals with a relatively unique neurological condition, and maybe a few Burners. But a new vision technology, called the vOICe system, is aiming to treat blindness by translating images from a camera into audio signals. This technological sensory substitution shows evidence of rewiring the brains of the blind, restoring vision and improving their lives drastically.

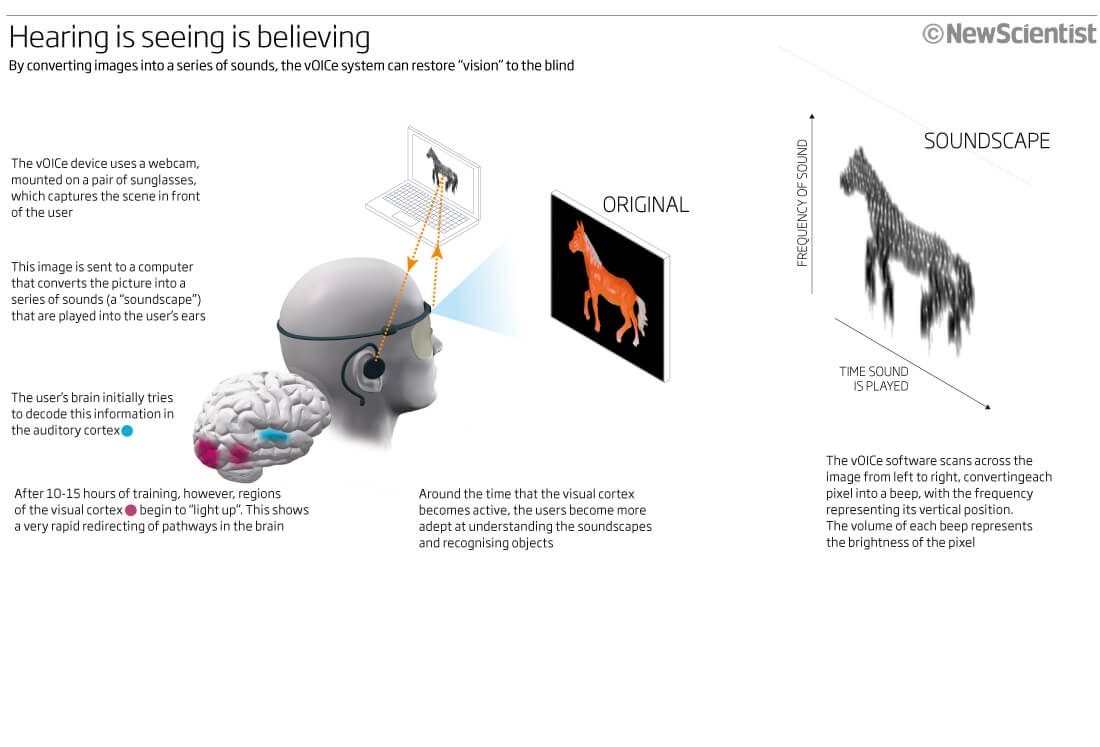

The vOICe system (OIC = “Oh, I See”) involves three components: sunglasses with a miniature camera installed, software that translates images into different audio stimuli, and headphones for the user. Using volume, frequency, stereo, and other audio variables, the local environment is represented to the blind individual as a “soundscape” through the headphones. Research suggests the system takes advantage of adult neural plasticity to redirect audio stimuli to visual areas of the brain; blind users report a partial return of their visual experience. Beyond its clinical applications, the system might also shed some light on theories of how the brain works.

Here’s how it vOICe works. The camera scans the environment every second from left to right, picking up the relative distance of objects; this information is passed on to software in a netbook. Data about the user’s left side enters their left ear, and the same with the right. The timing of each sound represents information along the horizontal axis (objects to the left represented first, followed by those to the right), and the frequency of the sound is determined by information along the vertical axis. Brighter objects produce louder audio. The resulting sound is pretty weird – a noisy, electronic blend of grating audio input (maybe a bad trip?).

Check out this video from TechTV:

vOICe is the brainchild of Dutch inventor Peter Meijer, who conceived of the device in 1982. It wasn’t until 1991 that he built his first prototype, and the device reached its first portable (but bulky) version until 1998. Today, it is highly portable, and is actually a viable option for everyday use. Now the system can even be used with a smart phone, which runs the software and takes input from the phone’s internal camera. It’s a nice testament to how accelerating technology brought the size and price of components down until the system became a practical possibility.

What’s amazing about the system – and what makes it a hot item for neuroscience research – is that it appears to restore the actual subjective experience of vision (visual qualia) to blind users, rather than just teaching them to correlate objects and sounds. Users have reported the return of experiences like depth and the sense of empty space in their environment. The restored vision is not the same as normal visual experience – one user described it as being comparable to an old black and white film, while others report vague impressions of objects as shades of grey. Research is now underway to understand how the vOICe system might be rewiring the brain to achieve this effect.

In 2002, neuroscientist Amir Amedi found a part of the occipital (i.e visual) cortex that responded to touch input as well as visual information. Their research suggested that this area – the lateral occipital tactical-visual area (LOtv) – was not involved in visual processes per se, but rather 3D object recognition (a function tied to both visual and haptic experience). More recently, they studied the LOtv while people used the vOICe system. Regular users of the system showed LOtv activity under fMRI, while it remained inactive for newbies – further supporting that this region handles features of object recognition, regardless of the sensory source.

Some users of the system even report that after training with the vOICe, they experience vision analogues when they hear everyday sounds. This more permanent reorganization of brain networks is a strong testament to the potential of adult neural plasticity. Research with the vOICe also challenges some of our widely held assumptions about how the brain is organized, specifically the extent to which brain regions are modular. If incoming sonic stimuli obey the same laws as visual input, they seem capable of generating visual experience; what does that say about the “function” of the brain regions involved? Is function plastic, too?

Good questions, and exciting ones. The vOICe also makes you wonder what other types of artificial synesthesia could be technologically induced, and how. I’ll leave that question to the comment section.

[Source: New Scientist]

[images: vOICe; New Scientist]

[video: TechTV]