Robot Hand Copies Your Movements, Mimics Your Gestures (video)

Share

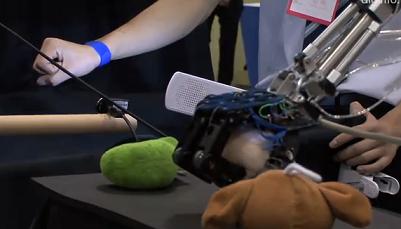

2010 may go down in history as the year of gesture recognition. We've seen it in TVs, we have it in our video games (thanks, Kinect!), and now we have it in our robots. The Biological Cybernetics Lab at Tsukuba University, headed by Kiyoshi Hoshino, recently demonstrated a robotic arm that can mimic the position and movements of your own. Using two cameras, the system tracks your hand and arm more than 100 times per second and relays the information to the robot so that it can repeat what you do. The system is fast enough that there is relatively little lag time between your gesture and the robot's copied motion. Hoshino and his students have pushed the system even further and taught it how to recognize 100 distinct hand shapes. This allows the robot arm to not only track the location and movement of your arm, but to reliably perform the same actions like picking up an object or waving hello. The robot arm was demonstrated at the recent 3DExpo in Yokohama, and you can see it in action in the video below. This sort of intuitive interface could let almost anyone control and program a robot. Hooray for the democratization of technology!

The Hoshino Lab should be commended for its cool approach to robotic controls, but I'm not sure how impressed we should be with their camera setup. I think recent work with the Xbox Kinect sensor shows that you can have some amazing 3D capture for just $150, and one or two of those sensors are probably going to be able to give you a much better idea of hand shapes. Considering how much open source code is being generated for the Kinect it seems like it should be easy to get such a system up and running fairly quickly.

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Also, while I'm totally in favor of gesture controlled robots, the video above demonstrates that there are some serious limitations to the technology. First, the lag-time, while pretty small, isn't negligible. The human controller is obviously having to wait and see before he makes his next move in order to properly pick up the stuffed animals. Second, the system has no tactile feedback to the user, and that's a problem for me. Without some form of haptics anyone controlling a robot through a gesture system is going to have to be absurdly vigilant or they will end up crushing everything they grab. I think there's a reason why Hoshino uses soft stuffed animals in the demonstration, and it's not because cuteness sells.

Even with these limitations, I think that the Hoshino Lab shows us that non-traditional controls for robotics could arrive much sooner than we think. They're going to be needed. Automation is creeping into all levels of manufacturing, and if small businesses want to keep up with larger corporations they are going to need their own kind of industrial robots. These low-level industrial bots won't have owners that are engineers or experts in computer code. They will require a simple interface that allows them to teach a robot how to perform a complex task. The gesture guided robot arm from Tsukuba University is a good example of that kind of simplified intuitive programming technology. I hear that Rodney Brooks and his new startup Heartland Robotics are pursuing a somewhat similar system. With the work of these teams, and many others around the world, we may develop a personal robot who learns by watching humans. Anything you can do, it will be able to do.

Only better.

[screen capture and video credit: DigInfo News]

[sources: DigInfo News video, Biological Cybernetics Lab]

Related Articles

These Robots Are the Size of Single Cells and Cost Just a Penny Apiece

In Wild Experiment, Surgeon Uses Robot to Remove Blood Clot in Brain 4,000 Miles Away

A Squishy New Robotic ‘Eye’ Automatically Focuses Like Our Own

What we’re reading