So you love your touchscreen tablet, smartphone, and dashboard. But then you still have those moments when your fingers are missing that tactile feel, that haptic feedback. Wouldn’t it be awesome if our tablets and smartphones could give us a keyboard on demand? With the push of a button it would rise up – almost magically – right from the glass surface. And then when we want want to go back to playing Angry Birds, the buttons would simply recede away.

It sounds like magic, but now it is reality. Created by Tactus Technology, a Fremont, California-based start-up, Tactus is a deformable layer that sits on top of a touchscreen sensor and display. The deformations can create buttons, such as the keys of a QWERTY keyboard, buttons for the numbers on a phone, or just about any shape to make your touchscreen tactile whenever you want it to be. The company is demonstrating the Tactile Layer publicly today for the first time at the Society For Information Display’s ‘Display Week’ in Boston. I had a chance to speak with Tactus’ CEO, Dr. Craig Ciesla, and ask him how Tactus might ‘reshape’ they way we use touchscreens.

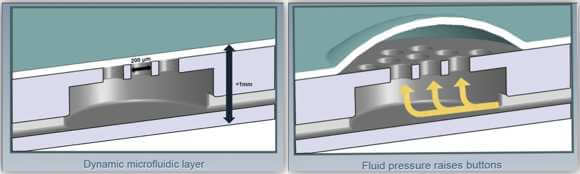

The Tactile Layer replaces the glass or plastic that normally sits on the touchscreen sensor and display. The layer is about 0.75mm to 1mm thick, and at its top sits a deformable, clear layer 200 nm thick. Beneath the clear layer a fluid travels through micro-channels and is pushed up through tiny holes, deforming the clear layer to create buttons or shapes. The buttons or patterns remain for however long they are needed, just for a few seconds or for hours when you’re using your iPad to write that novel. And because the fluid is trapped inside the buttons, they can stay put without additional power consumption. They come or go pretty quickly, taking only a second to form or disappear. And when they’re no longer needed, the buttons recede and you’re left with a touchscreen indistinguishable from any other Tactus-less touchscreen.

Of course, if you’re not using the buttons you don’t want to know that they’re there. The company had to make sure that their technology, when not in use, was truly invisible to the user. Ciesla explained, “Two important factors for our tactile product: it has to be flat in the down state and it has to be invisible. We have been able to fine tune the invisibility of the micro-channels through our proprietary fluid formulation. This allows us to have a network of channels running throughout the panel that can’t be seen. Additionally, we have developed unique fabrication processes that allow us to create buttons that go completely flat without any residual optical effects.”

Here’s a video to give you an idea of what the system looks like – and it looks pretty slick:

I suggested to the Tactus people that their system seems to make sense for tablets, given the space available to create an entire keyboard, but I wasn’t so sure about smartphones. They told me that in informal conversation people seem to be split on whether or not tactile feedback would benefit a tablet or a smartphone more. It would make typing on the keyboard for long stretches more bearable, sure, but a lot of people would enjoy the bumps of a tiny keyboard while texting, for instance. It would give your iPhone a Blackberry feel.

By allowing us to type better, Ciesla thinks Tactus could be a game-changer for how we use tablets. “Tablets are content consumption devices that are being thrust into content creation roles. People either suffer the inadequacies or attach keyboard accessories to make the experience more tolerable. By integrating a keyboard directly into the device interface, it shifts the usage model and transforms the device to enable content creation.”

And Tactus is not only looking to give our tablets and smartphones an intuitive feel, but they envision the deformable surface enhancing touchscreens in cars, medical devices, ATM’s, or any other device where touchscreens are found. They pointed out to me that using a touchscreen in a car to, say, change a radio station, requires at least a second or two when you have to actually look at the buttons. A tactile system where you don’t have to take your eyes off the road would be much safer.

And Tactus is not only looking to give our tablets and smartphones an intuitive feel, but they envision the deformable surface enhancing touchscreens in cars, medical devices, ATM’s, or any other device where touchscreens are found. They pointed out to me that using a touchscreen in a car to, say, change a radio station, requires at least a second or two when you have to actually look at the buttons. A tactile system where you don’t have to take your eyes off the road would be much safer.

Right now the patterns will be configured by the device manufacturers. But Tactus says in the future users will be able to configure Tactus themselves. The company expects the first Tactus products to be out sometime in 2013.

Where and in what form exactly Tactus will be found in the future is still being worked out. They are currently running studies that test what features are best for users, and what features need to be improved. Ciesla explained, “What we provide is really a tool to enable device designers to differentiate their products and specifically the user interface of their devices, by leveraging our tactile surface technology. As part of that, we want to provide design guidelines that will help ensure the optimal use of our technology. So one aspect of the usability study will focus on design optimization.”

Just last week the Consumer Electronics Association selected Tactus as one of ten finalist companies in their Eureka Park Challenge that seeks to recognize “game-changing” entrepreneurs and start-ups. Will Tactus, as CEA and Ciesla believe, really be a “game-changer?”

One has to wonder if this is really a case of necessity being the mother of invention, or the other way around. We’ll have to see how people react to Tactus in today’s demonstration. Tactus is also taking a scientific approach and working with usability firms and user groups to quantify the benefits of adding touch to touchscreen.

Touchscreen or keyboard? The perfect solution probably lies somewhere in between, and Tactus may very well just get us there.

[image credits: Tactus Technology]

images: Tactus Technology

video: Tactus Technology