In five years, IBM thinks computers will touch, taste, smell, hear, and see. Sensing devices will aid online shoppers (touching products), parents (interpreting the sound of baby cries), chefs (cooking a perfectly tasty and healthy meal), and doctors (smelling disease). No word on a sixth sense, as yet the sole domain of humans.

This vision of sensory machines is courtesy of IBM’s seventh annual “5 in 5,” a list of technologies forecast to hit the mainstream in five years or less.

The list, first published in 2006, has included gems like the oft-touted (and downplayed) 2007 prediction “your cell phone will be your wallet, ticket broker, concierge, bank, shopping buddy, and more.” Of course, that prediction may have been aided (or perhaps inspired) by the first generation iPhone.

The list, first published in 2006, has included gems like the oft-touted (and downplayed) 2007 prediction “your cell phone will be your wallet, ticket broker, concierge, bank, shopping buddy, and more.” Of course, that prediction may have been aided (or perhaps inspired) by the first generation iPhone.

Other predictions reflect tech trends of the day yet to really catch on, like 2006’s prediction there would soon be a 3D Internet. IBM’s “5 in 5” home page justifies the forecast by noting the popularity of online 3D games and specific IBM projects using the “emerging 3D Internet.” But let’s face it—the Internet ain’t 3D yet.

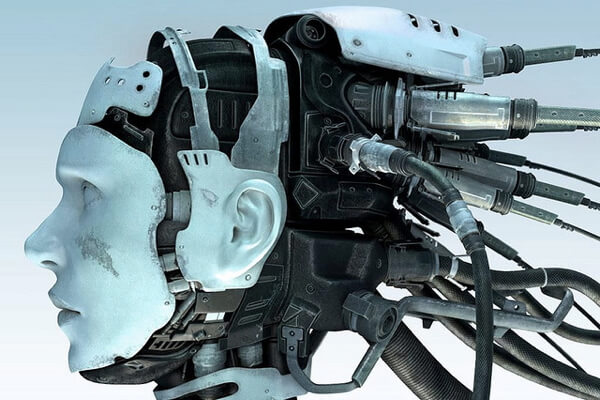

2012’s list is all about the senses. Computers can already see and hear by way of microphone and camera. And plenty of apps use these senses for fun, like recording music, and more serious purposes, like monitoring moles for skin cancer.

But why not go further? The “5 in 5” visionaries predict we’ll have touch screens that not only sense our fingers but give back subtle vibrations to simulate how certain items feel—from a silk shirt to a cotton pillow. Or devices that will smell our breath to see if we have diabetes, cancer, or a cold.

These are certainly novel ideas, but the “life changing” trends IBM’s list alludes to are not how machines sense—because they sense in some ways like humans do and many ways they don’t already—but how machines make use of that sensory data.

Your smartphone “tasting” the wind to predict a storm is fine and good. But it needs to understand what that information means, how it is useful for humans, and how to communicate it to us. In that sense—it seems the list is right on. The next five years are likely to bring smarter technology, thanks to more widely distributed sensory networks and clever software to interpret all that data.

In fact, big data analysis is an IBM specialty right now. So no surprises there.

As for touching silk or burlap through our iPhones? Machines taking in human-like sensory input on one end and delivering it to users on the other end evokes tech fads of yore. The dot.bomb, DigiScent’s iSmell device, for example—since named one of the 50 worst tech fails in history. Or 1960’s Smell-O-Vision.

Weird Al Yankovic predicted in 2007, we’ll soon be smelling Richard Simmons “sweating to the oldies.” His point, of course, is no one wants to smell Richard Simmons no matter what he’s doing.

Computers sensing just like humans sounds cool. But the usefulness of such technology will drive its adoption. More likely—and perhaps more useful—will be machines that move beyond our rather limited sensory suite to do precisely the things we can’t do for ourselves already.