We often hear of robots forcing humans to learn new skills, but Willow Garage spinoff, Industrial Perception, Inc., (IPI) aims to do just the opposite. IPI is in the business of training robots for the real world. Step one: Give them eyes. Step two: Teach them to understand what they’re seeing. Step three: Do something about it.

Combining environmental awareness with decision-making and ultimately action is something we take for granted. But in robotics, it is an incredibly difficult problem and only now beginning to show up in machines—which is why watching a robot look, analyze, and then choose a course of action is a touch unnerving.

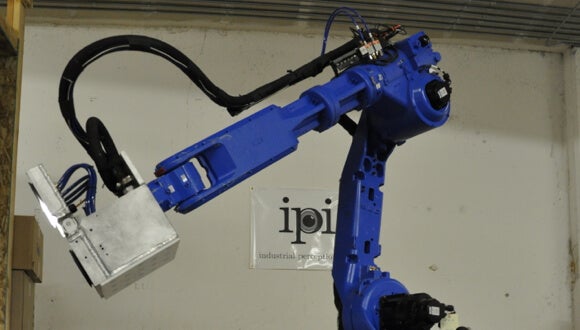

When I recently visited IPI, I couldn’t help but notice their industrial robotic arm resembled a mechanical cobra…thinking. I found myself wondering, did it just cock its head? And oh man, I think it’s looking at me. Luckily, in practice, IPI’s bots are the playful sort. Less cobra, more puppy.

During our demo, the robots tossed boxes and sorted colorful balls for stuffed animals. Such activities may seem trivial, but the engineering behind them is serious stuff. And IPI CEO, Troy Straszheim, assured me their creations can unload and load boxes too, as advertised. (It’s just more fun to throw them around!)

How does it work? IPI’s robots use a visual system akin to Kinect.

Straszheim says, “They use multiple 3D sensors, engineered algorithms, and plenty of IPI Special Sauce.” Each robot creates a real time digital 3D model of the scene in front of it. They’ve been taught to search for specific shapes amid the clutter, be it irregularly stacked boxes or a jumble of colored balls, and perform actions on those objects.

In the below video (forgive the amateur videography!) you see what the robot sees. In this case, the robot is searching for a toy dog. It fits a 3D mesh of the desired object over the shapes in its field of vision. When the bot finds a match, it projects the appropriate mesh over the object to confirm. In the second half of the video, you can see the robot turn visual information into action by picking up the targeted item (a toy bear, in this case).

Though IPI’s toy-picking robot is perhaps the more complex of their two demos, going after irregularly shaped items amid a field of confusing shapes and colors, their box-picking robot is more practical. This robotic arm is fitted with a hinged hand lined with vacuum powered suction cups. Confronted with a stack of boxes in a simulated truck bed, the arm surveys the scene, picks the best box to move, decides where to grab it, and then tosses the box to IPI’s Product Manager, Erin Rapacki.

The final video is an example of the robot working through a non-standard situation of poorly stacked boxes. You can see it imaging, thinking, re-adjusting its view—at one point it turns and stares at me—before finally choosing the top box to pick up and toss.

How does this box-picking robot compare to a human worker? Straszheim says, “That depends on what you mean by picking skills. They can lift more than humans, and do it for longer, but they’re generally not as flexible. The industry has end effector designs that do well for certain classes of objects, like boxes. Currently, the systems are slower than humans when measured over tens of minutes, but they’ll outlast humans, especially if the loads are heavy. They are getting faster, fast.”

And as for mobility—that’s already happening. Straszheim again, “Wynright Corporation has a Truck Unloader system for sale which uses IPI software in its two perception-intensive operations: finding boxes and navigating inside a truck. It is about the size of a Miata, with a big blue Motoman robot on the left shoulder, and a telescoping conveyor belt on the right shoulder. You point it at a trailer full of boxes and it will unload it, unsupervised.”

Robots are in nearly every factory and industrial process these days. They can be programmed to hammer hot steel or stamp out industrial forms or weld parts. But the vast majority of these are inflexible and capable of only highly repetitive tasks in controlled environments. As robots like IPI’s gain more sense and sensibility—and their cost goes down—they’ll be able to move around a factory floor and flexibly perform less fixed tasks largely independent of human direction.