How Google’s AI Beat a Human at ‘Go’ a Decade Earlier Than Expected

Share

Last week, news broke that the holy grail of game-playing AI—the ancient and complex Chinese game Go—was cracked by AI system AlphaGo.

AlphaGo was created by Google’s DeepMind, a UK group led by David Silver and Demis Hassabis. Last October the group invited three-time European Go champion Fan Hui to their office in London. Behind closed doors, AlphaGo defeated Hui 5 games to 0—the first time a computer program has beaten a professional Go player.

Google announced the achievement in a blog post, calling it one of the “grand challenges of AI” and noting it happened a decade earlier than experts predicted.

A brief history of AI vs. human game duels

AI battling humans in games has been a long-standing method for testing the intelligence of a computer system.

In 1952, the first computer mastered the classic game tic-tac-toe (or noughts and crosses) followed by checkers in 1994. In 1997, IBM’s supercomputer Deep Blue cracked the game of chess when it beat world chess champion Garry Kasparov. A decade and a half later in 2011, IBM’s supercomputer Watson used advanced natural language processing to destroy all human opponents at Jeopardy.

In 2014, a DeepMind algorithm taught itself to play dozens of Atari games. The system combined deep neural networks and reinforcement learning to turn raw pixel inputs into real-time actions—and pretty solid gaming skills.

But humans still reigned at Go and were expected to for a while yet.

Why we thought Go required human intellect

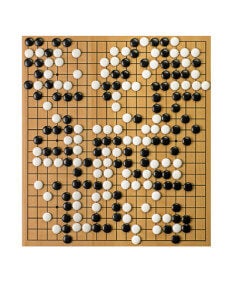

With more potential board configurations than the number of atoms in the universe, Go is in a league of its own in terms of game complexity—and because of its vast range of possibilities, a game that requires human players use logic, yes, but also intuition.

The rules of Go are relatively simple: two players go back and forth playing black or white stones on a 19-by-19 grid. The goal is to capture an opponent’s stone by surrounding it completely. A player wins when their color controls more than 50 percent of the board.

The twist is, there are too many possible moves for a player to comprehend, which is why many experts often make their moves based on intuition.

Though intuition was thought to be a uniquely human element needed to master Go, DeepMind’s AlphaGo shows this isn’t necessarily the case.

How AlphaGo’s deep learning works

The sheer size of the search tree in Go—meaning all possible moves available in a game—makes it far too large for even computational brute force. So, DeepMind designed AlphaGo’s search algorithm to be more human-like than its precursors.

DeepMind’s David Silver says “[the algorithm is] more akin to imagination.”

Prior Go algorithms used a powerful search technique called Monte-Carlo tree search (MCTS), where a random sample of a search tree is analyzed to determine the next best moves. AlphaGo combines MCTS with two deep neural networks—a machine learning method that has recently taken AI by storm—each made up of millions of neuron-mimicking connections to help analyze possible moves.

AlphaGo simulates the remainder of the game, but uses the data from the two neural networks to narrow and guide its search. After simulation, AlphaGo selects its move.

The head of DeepMind, Demis Hassabis, told Wired, “The most significant aspect of all this…is that AlphaGo isn’t just an expert system, built with handcrafted rules. Instead, it uses general machine-learning techniques to win at Go.”

Be Part of the Future

Sign up to receive top stories about groundbreaking technologies and visionary thinkers from SingularityHub.

Using two deep learning networks to train each other

The group at Google started by training AlphaGo on 30 million human moves, until the neural network could predict the next human move with 57 percent accuracy.

But for AlphaGo to go beyond simply mimicking human moves, the two neural networks played thousands of games against each other, learning new strategies and how to identify patterns on their own—a trial-and-error process called reinforcement learning. (The same process used to train DeepMind’s Atari AI.)

Notably, it isn’t just the software. AlphaGo’s computational power is vast, amply drawing on Google’s cloud computing might. And even so, its ability is nowhere near that of the human mind. Hassabis emphasized that Go is a closed game with a defined goal, and therefore does not represent even a microcosm of the real world.

So, no, we do not need to have an existential crisis over AlphaGo.

https://www.youtube.com/embed/bHvf7Tagt18

A decade earlier than predictions

In May of 2014, Wired published a feature titled, “The Mystery of Go, the Ancient Game That Computers Still Can’t Win,” where computer scientist Rémi Coulom estimated we were a decade away from having a computer beat a professional Go player. (To his credit, he also said he didn’t like making predictions.)

Crazy Stone, Coulom’s computer program referenced in the article, used the Monte Carlo tree search technique, but unlike AlphaGo, it lacked the reinforcement learning of two separate neural networks training each other. That is, Crazy Stone was not able to teach itself to identify new patterns as it progressed in the game.

Added to quick and dramatic breakthroughs in other classically thorny AI problems like image recognition, AlphaGo is further testament to the technique’s power.

“Deep learning is killing every problem in AI,” Coulom recently told Nature.

Next up for AlphaGo is a match this March in Seoul against legendary Go world champion, Lee Sedol, who in the 2014 Wired article was quoted saying, “There is chess in the western world, but Go is incomparably more subtle and intellectual.”

After AlphaGo’s recent defeat of Hui, the algorithm’s abilities are crystal clear. Beating Lee, however, would be equal to IBM’s Deep Blue beating Garry Kasparov in 1997—only AlphaGo would do so using the next generation in machine learning.

“We’re pretty confident,” Hassabis says.

Image source: Shutterstock

Alison tells the stories of purpose-driven leaders and is fascinated by various intersections of technology and society. When not keeping a finger on the pulse of all things Singularity University, you'll likely find Alison in the woods sipping coffee and reading philosophy (new book recommendations are welcome).

Related Articles

Study: AI Chatbots Choose Friends Just Like Humans Do

AI Companies Are Betting Billions on AI Scaling Laws. Will Their Wager Pay Off?

Are Animals and AI Conscious? Scientists Devise New Theories for How to Test This

What we’re reading